As one of the top 20 universities in the UK, The University of Sussex offers undergraduate courses that could be pivotal to the future of VR. Its teachings stretch across neuro, cognitive and biomedical sciences, among other areas. We could well see a new generation of VR recruits come out of its campus. But I’m not visiting the grounds to talk about that; I’m here to talk about tech.

Housed away in a quiet corner of the campus is the Sussex Innovation Centre. Quieter still is the office that facial recognition company Emteq occupies inside that building. CEO Graeme Cox tells me that the small team has the “Friday feeling” as he closes the office door behind me, though it’s difficult to imagine the friendly engineers and developers that greet me being in any other frame of mind. For a company that’s working in one of VR’s most vital areas, Emteq makes for a remarkably calm environment.

Perhaps that’s a reflection of the confidence the team has in faceteq, the platform it’s building for emotion tracking in VR headsets and other devices. Rather than using cameras to monitor and replicate your facial expressions, faceteq uses a sensor-based system that lines the area of a headset that touches your face. The system reads electrical muscle activity, heart-rate, skin response and other indicators, then translates them back into the relevant expression.

This is an important area of VR, especially as the industry begins to shift toward social experiences. Facebook was on-stage at Oculus Connect 3 earlier in the month to demonstrate cartoonish avatars with animated faces that try to replicate the user’s emotions and reactions. It’s also an area in very early stages; an hour after that demo Oculus Chief Scientist Michael Abrash told the audience that perfect virtual humans, of which emotion tracking will play a big part in, were a long way out.

And Emteq’s work is indeed early; the current iteration of the tech is just over a year old but shows plenty of promise, though a much improved version is right around the corner.

“Understanding human context, emotional context, and physical context and how they’re formed [and having] VR systems, augmented reality systems, AI systems that can do it with the human is really important,” Cox says. “Moving through VR in AR and having that emotional response built into it, that’s our mission.”

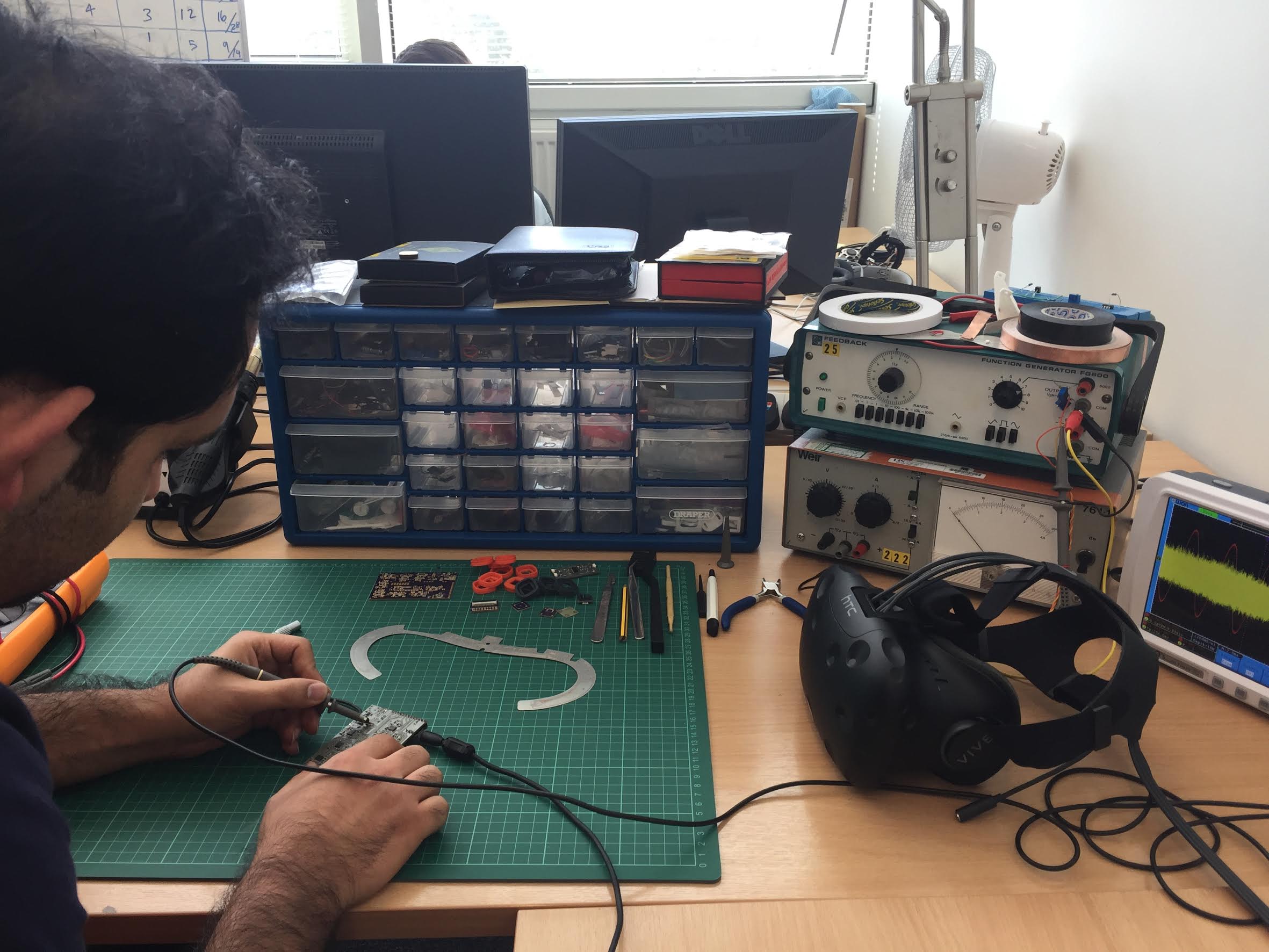

Today the company has a rough demo to show me, both hands-on and off. The team is showing the system with the Oculus Rift DK2, though, behind me is Electrical Engineer Dr Mohsen Fatoorechi’s desk where he’s currently piecing together a prototype for CV1 that will be completed very soon.

“Once it’s finished it will just be a plug-in insert,” Cox explains.

He also plans a Vive version that will be a simple lining that replaces the velcro-attached foam one that comes with the kit. The company is also looking into Daydream.

I first watch the faceteq-modified DK2 used with an avatar that’s been pre-calibrated to Fatoorechi’s expressions. At first he sits in a basic environment. To the right a debug menu of sorts is giving realtime feedback on the sensor’s readings. To the left is a large set of facial expressions that the user selects to individually calibrate. The process involves performing a specific action such as blinking with the right or left eye three times over so that the system can memorize the feedback with machine learning.

Each expression takes about 30 seconds to calibrate, but the team is working to streamline the process in the future.

Fatoorechi is using winks to select options on a menu, an action that does away with the seconds spent staring at options when using gaze controls. In the first demonstration, he sits in front of a woman that mirrors his expressions. It’s not the most refined showcase; every time the woman blinks her jagged face looks like she’s in agony, but that’s not the point of this demonstration at this stage. Though buggy, the system responds well to Fatoorechi’s reactions, at least as far as I can tell when much of his face is obscured by a headset.

The two other demos I see present some unique use cases for facial tracking that I hadn’t yet thought of. One is a cinema screening room. Here Chief Scientific Officer Charles Nduka gets me to imagine a Hollywood preview screening where movie studios might gather feedback data from the sensors, which is one example of what Cox labels “objective emotional analytics”, and could also be used in research projects or game testing. Or you could watch content with your friends and see how they react. Imagine laughing together at a comedy or both jolting in shock at a jump scare.

The other demo is a little more unique. Emteq has created its own Facebook wall in which, just by looking at posts, you can assign emojis based on your expression. Facebook has been heading in the direction of more specific emotional responses for a while, and this system presents a more honest and immediate way of relaying that information, even if that might not always be ideal for maintaining friendships.

Following that demo, I get to try the tech for myself. It’s important to note that I have long hair which provided a little interference in this early prototype. I first calibrate a right wink, which is a fairly smooth and pain-free process, though the system gave me a little trouble with a shocked expression with eyebrows raised, and I had to abandon calibrating an open-mouthed smile as it simply wouldn’t register. Again, this was early prototype tech.

With what I had calibrated, I sampled the demo with the woman. Impressively, she quickly replicated my winks and the position of my eyebrows and forehead wrinkles to intimidate some expressions. The system was remembering the impulses I had given off making certain expressions during calibrating, and then producing the relevant emotion or action.

Ideally, faceteq would reach a stage where it recognizes impulses and replicates expressions on the fly without the calibration process. Cox points out that the company’s method of tracking can pick up on minute muscle movements that camera tracking can’t recognize, but if you need to calibrate expressions so small then where do you stop? What number of variations of muscle impulses and reactions is enough for a flawless virtual human?

Hopefully Emteq can refine the process enough that this isn’t an issue, which is what it’s working toward.

“It’s a system built on data,” Nduka says. “The more data it gets, the more intelligent it becomes.”

I’m looking forward to seeing the system improve in the coming months. The group is also working on a form of eye-tracking using electrooculography, which, according to Cox, isn’t a “high-precision” solution. It won’t be enough to enable essential processes like foveated rendering, but will be able to read small and large movements and the general direction of your eyes. That could add another compelling layer to what’s already here.

But Emteq is already making use of what it has now. The company’s tech is being used in a treatment app for sufferers of a type of paralysis known as facial palsy. And faceteq can also be paired with glasses to give you key information in every day scenarios, like registering your weariness as you drive low on sleep.

Currently, the company is in process of raising seed money, though Cox says it’s “pretty well funded” from a research standpoint. For a possible consumer release of its VR tech, it sees a range of options, including the aforementioned inserts for headsets.

“We’ve got an exciting road ahead,” Cox says. “There’s still plenty to do, obviously, but we’re able to demonstrate some pretty interesting stuff already and by the time we get Q1 next year, we’re going to have a fully working system that can do some really quite surprising stuff.”