NOTE: the images in this article were pulled from a third-party unlisted video that surfaced online, despite SIGGRAPH’s no recording policy during the talks. UploadVR does not take responsibility for the video and is only sharing the data now that it has leaked. At SIGGRAPH’s request we have not included a link to the video in this report.

An informative talk with several VR pioneers at SIGGRAPH called “The Renaissance of VR: Are We Going to Do it Right This Time?” brought to light the many withstanding issues associated with the proliferation of VR into the mainstream. At the same time it also revealed a new project that Jaron Lanier and group of research students are working on.

During that discussion, Lanier showed a surprise documentation video of a social experience that he is leading called Comradery where his team ‘hacked’ together a mixed reality headset codenamed “Reality Mashers.” It looks to solve productivity problems through networked group collaboration in a three dimensional way.

The team consists primarily of doctoral candidate interns who developed Comradery at Microsoft Research in the summer of 2015. They studied social, multi-user applications of mixed reality/augmented reality and depicted the potential of rapid prototyping of equipment for mixed reality. In parallel with the hacker/maker mentality, the entire lab where the project emerged was started from scratch and put together in a matter of only two months. The project’s rapid prototyping obviously extends to the lab itself.

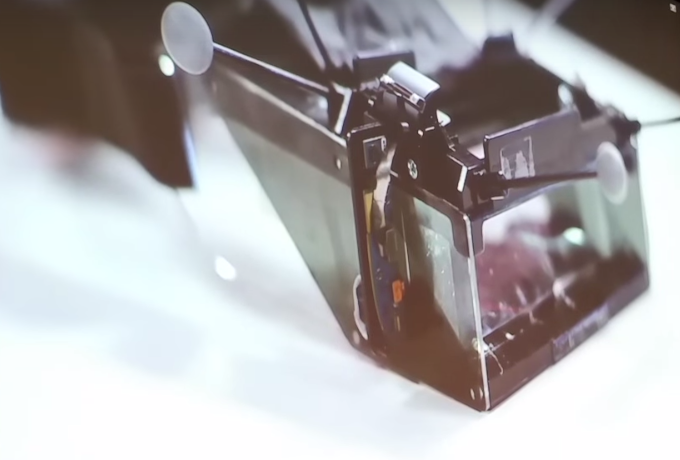

The headsets are known as “Reality Mashers” and currently exist in many forms. The requirements for this multi-user Mixed Reality experience included a low latency wireless coordination architecture and a wide enough field of view for people to see augmentations. The underlying technology is reminiscent of another Microsoft product which needs no introduction – the Hololens, except that the Field of View (FOV) of Lanier’s project has been greatly increased. These “Reality Mashers,” as mentioned by Jaron Lanier in the documentation video, “exceeds 60 degrees in all cases.” We are assuming he means 60 degrees horizontally. We are not sure what the vertical FOV is, but it is reportedly greater than the Hololens’ regardless.

Reality Mashers’ displays are described as sometimes being a smartphone, and other times it is a display attached to a gaming laptop in a backpack. It is always untethered, providing for mobile solution. Lanier states that the trackers are sometimes Optitracks but that they use other platforms as well. Some of the Reality Mashers have Leap Motion sensors attached to them as well, most likely for gesture recognition.

Additionally, the optics were created by Josh Hudman, Senior Optical Engineer at Microsoft Research. Lanier also gave a shout out to the person who designed the 3D printed frame and provided mechanical engineering for the project. It is hard to tell exactly who Lanier mentions, but after a bit of digging, it looks like a likely candidate is Patrick Therien, Hardware Lab Manager and Principal Mechanical Engineer at Microsoft Research.

Other members of the team include (but are not limited to):

- 1) Andrea Stevenson Won – Stanford University

- 2) Victor Mateevitsi – University of Illinois at Chicago

- 3) Gheric Speiginer – Georgia Institute of Technology

- 4) Kishore Rathinavel – University of North Carolina at Chapel Hill

- 5) Andzej Banburski – Perimeter Insitute for Theoretical Physics

- 6) Judith Amores – MIT Media Lab

- 7) Xavier Benavides – MIT Media Lab

- 8) Lior Shapira

While watching the video, it is noticeable that the smartphone displays project images onto some sort of reflective material that looks like glass. This means that the mobile phones are likely attached to the top of the headsets, angled down. Virtual content is being streamed at a low resolution instead of rendered in the headset, keeping the augmentations dynamic. Interestingly enough, the sides of the “glasses” remain open – allowing for external light to enter in.

Another requirement for this system was to have real-time volumetric and feature sensing of room contents and participant’s bodies. Real time volumetric and skeletal data is generated by an array of Kinect sensors, which accurately tracks the user’s presence in the space. Also, the microphone arrays in the Kinect sensors generate a spatial map of sound energy in the room. This information is further processed by a propagation simulation algorithm that takes volumetric room data into account. This allows for the system to augment the environment based on audio signals, which makes for a spectacular visual depiction of sound waves.

The video documentation also shows some experimentations with input. For instance, a tracked physical 3D cube is combined with an additional controller to manipulate a 4D hypercube. There is a lot of mathematical 3D interpretations shown in the experience, which could potentially lead towards collaborative learning in the future.

Obliviously this is only a mere prototype, but it shows early potential of a socially networked experience. With an industrial AR headset about the hit the market with Microsoft’s Hololens, more and more people are going to be acclimated to AR on a larger level.

However, there will much to be desired for example, the FOV has already been criticized as small for the Hololens, so seeing a system with a much larger FOV within Microsoft’s Research department is a relief. The technology will only get better from here. Consider this a proof of concept for now and watch Microsoft closely for what they do next.