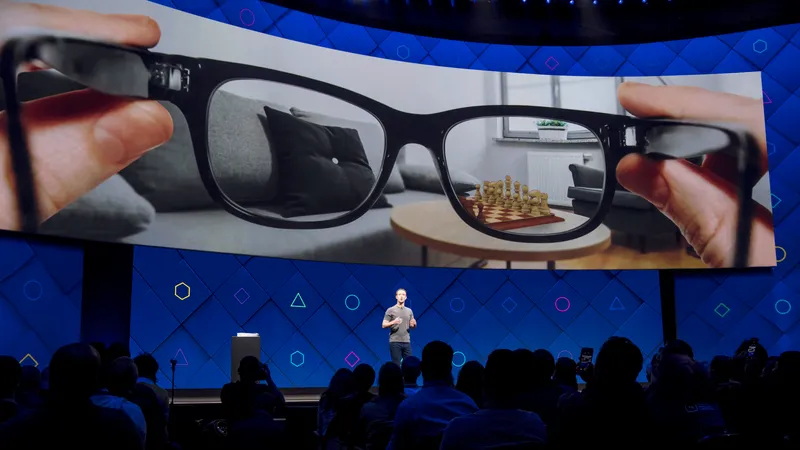

Meta's latest AI research system makes headsets and glasses understand your room layout and furniture.

Called SceneScript, Meta says the system leverages the same underlying technique as large language models (LLMs) except instead of predicting the next language fragment it predicts architectural and furniture elements within a 3D point cloud capture of the same kind that headsets already capture to enable standalone positional tracking.

The output is a series of primitive 3D shapes that represent the basic boundaries of the given furniture or element.

Meta's SceneScript in action

Quest 3 is capable of generating a raw 3D mesh of your room, and can infer the positions of your walls, floor, and ceiling from this 3D mesh. But the headset doesn't currently know which shapes within this mesh represent more specific elements like doors, windows, tables, chairs, and sofas. Quest headsets do let users mark out these objects as simple rectangular cuboids manually if they want, but since this is optional and arduous developers can't rely on users having done so.

If a technology like SceneScript were integrated into Quest 3's mixed reality scene setup developers could automatically place virtual content on or around specific furniture elements. They could replace just your windows with portals, or your chair with the seat of a vehicle, or change your sofa into a sandbagged barricade. These things are possible today by asking the user to mark the furniture out manually, or sometimes with handcrafted algorithms interpreting the scene mesh, but SceneScript could make it seamless and automatic.

Apple Vision Pro already gives developers a series of crude 2D rectangles representing the surface of seats and tables, but doesn't yet provide a 3D bounding box. Interestingly, the RoomPlan API on iPhone Pro can do 3D bounds, but that API isn't available in visionOS.

Meta's SceneScript in action

Technology like SceneScript could also have huge potential for AI assistants in future headsets and Meta's eventual AR glasses. The company gives the example of questions like “Will this desk fit in my bedroom?” or “How many pots of paint would it take to paint this room?”, as well as commands like “Place the [AR/MR app] on the large table”.

For now though Meta is describing SceneScript purely as research, not a near-term product feature update. While this technology may eventually come to Quest 3, there's no suggestion of that happening any time soon.