Mixed reality is one of the most impressive uses of virtual reality technology we’ve seen so far. By using a green screen and inventive camera setup, users can project the game world to the screen and let it surround the player — as if they are actually standing inside the VR experience itself. This not only looks great in video, but does a wonderful job of communicating what it’s like inside the headset.

Back in October, Owlchemy Labs, the developers behind the critically acclaimed and commercially successful Job Simulator and upcoming Rick and Morty VR game, announced their plans for iterating and improving on mixed reality capture technology. Now today, they’ve announced the follow-up to their developments, which includes incredible dynamic lighting, automatic green screen bounding, and transparency features.

UploadVR reached out to Owlchemy Labs CEO, Alex Schwartz, about these new features and how they will work with other non-Owlchemy games and applications. “It can be dropped into any app with zero integration and will work for any 3D content in Unity. At this moment we’re looking for people to try it out in a closed beta and the licensing model is yet to be announced.”

You can apply for the Owlchemy Labs mixed reality private beta and get more information on the full blog post right here.

[gfycat data_id=”LastDifferentAtlanticblackgoby”]

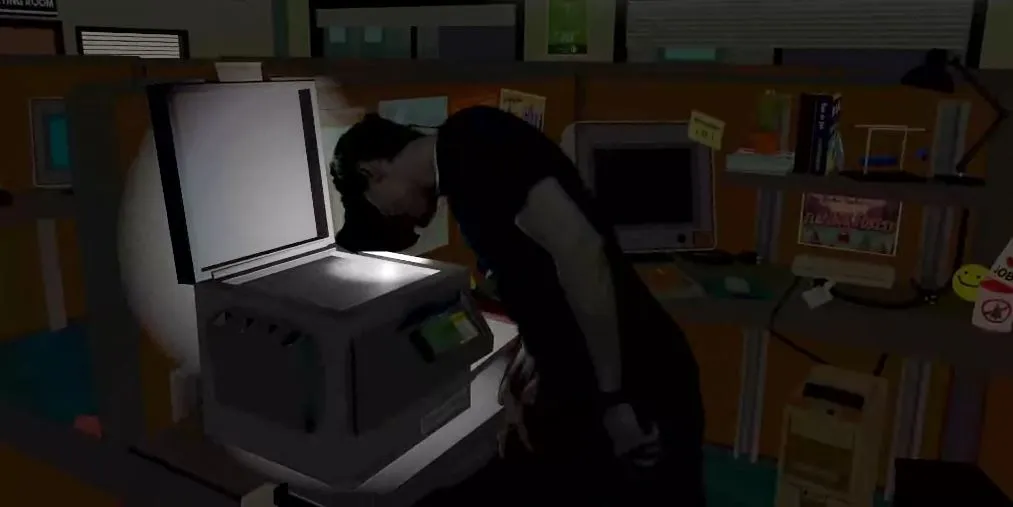

In regards to the dynamic lighting feature, the team writes in its blog post that, “Using the same lights you’re using in your game to light mixed reality footage allows for a seamless blend of both realities. From muzzle flashes to exploring dark caves to casting magic spells, we’re excited to see what interesting effects can be accomplished.” You can see it in action at the GIF above.

And perhaps most impressive is the new automatic green screen bounding effect, which is incredibly useful for users with smaller studio spaces. For example, if you can’t cover the ceiling and have patches of uncovered areas in your room.

[gfycat data_id=”CoordinatedPerkyHalibut”]

And finally, actually decent transparency support.

“This is the first time transparencies have been able to be drawn and sorted at proper depth in mixed reality,” Owlchemy writes in its blog post. “It finally just works how it should. The limitations of prior implementations of mixed reality have prevented transparencies from functioning but now that we’re in-engine, we can tackle this issue (anyone who has tried this before will tell you it is extremely complex to pull this off, let alone real-time). With proper alpha blending, we can also achieve amazing effects with particles such as having someone stand behind a wall of smoke, in the rain, snow, or any complicated transparent scene.”

[gfycat data_id=”HardtofindUnequaledKitten”]

For more details about the process and new features, head over to the Owlchemy Labs website.