In a surprise “announcement” at a SIGGRAPH Pixar afterparty, software engineer from Google Mach Kobayashi presented a way to render graphics into a virtual reality format using Pixar’s proprietary in-house software solution. With a few segments of code and an understanding how omnidirectional stereo imagery and ray tracing works, those developing on the Renderman platform can begin exporting animations for virtual reality.

The innovative approach surfaced during during a popular segment dubbed “Stupid Renderman Tricks” during a night of presentations. For the past few years the folks at Pixar have been organizing User Group Meetings during SIGGRAPH where RenderMan professionals and enthusiasts meet to learn about the latest developments from the RenderMan community. This was one of those nights.

This year, following a talk about how to model 3D objects in Renderman, Mach Kobayashi got on stage and introduced himself as someone who works at Google. Kobayashi also let the audience know that he previously worked at Pixar as an Effects Animator for a few popular movies – making him a perfect fit for a presentation related to VR, Pixar and Google.

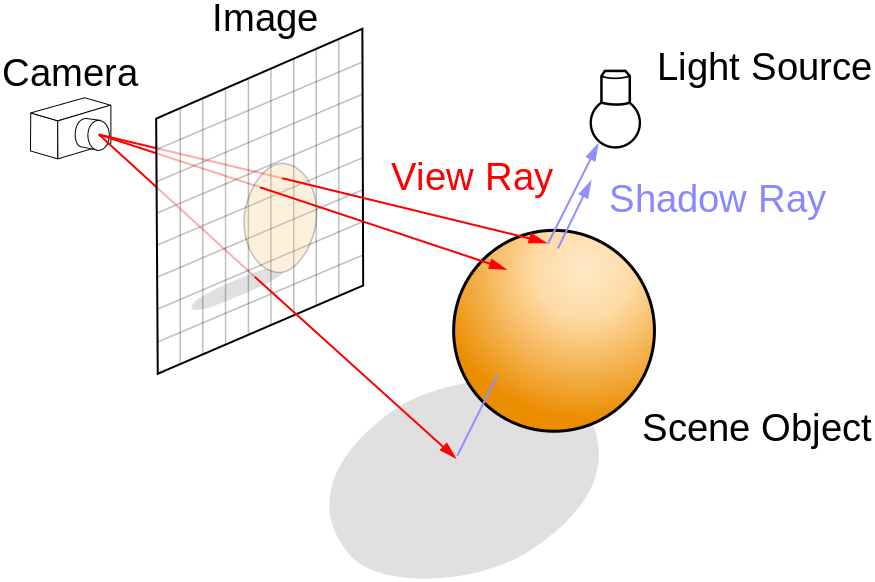

To understand what the trick/hack does, one must get familiar with Ray tracing, which is a technique for generating an image by tracing the path of light through pixels in an image plane and simulating the effects of its encounters with virtual objects. This type of rending technique is capable of producing a very high degree of visual realism.

The goal for this VR-enabled rendering trick was to produce a panoramic 360 view in stereo. Traditionally, a standard 2D render starts with a single camera and then the developer will ray trace everything. For stereo, you pretty much do the same thing, but with two cameras instead of one. This gives a 3D effect. However, it is not quite what the VR community is looking for.

To get closer to something more immersive, onmidirectional rendering must occur. This where a person takes the “camera” and puts it in the middle of a scene. Then, the developer ray traces in a sphere all the way around the environment- making the viewer feel like they are inside. Again, this method is still flat though – hence it is not stereo (so no depth). To make it stereo, one must position one camera and ray trace it in a circle from two points per section. See the diagram below, shown by Mack Kobayashi during his presentation.

To make this concept work in Renderman, a developer takes the scene and places the camera outside of it, rather than inside. Then, the person will put a square plane with a shader attached in front of the scene. From there, the ray tracing occurs.

This may not seem like much to an non-developer, but this is a brilliant way to get Pixar animators inspired enough to create virtual reality content. Kobayashi’s presentation, despite being only about 6 minutes, was the highlight of the night (in my opinion). His charisma and passion for the project/trick was felt through the entire talk, capping off nicely with Kobayashi throwing a Google Cardboard into the audience and yelling “Have fun!”

This may not seem like much to an non-developer, but this is a brilliant way to get Pixar animators inspired enough to create virtual reality content. Kobayashi’s presentation, despite being only about 6 minutes, was the highlight of the night (in my opinion). His charisma and passion for the project/trick was felt through the entire talk, capping off nicely with Kobayashi throwing a Google Cardboard into the audience and yelling “Have fun!”

Read More: OTOY’s light field demos are an amazing step forward for VR and AR

What’s nice is that this technique allows for higher levels of realism compared to other rendering methods. Still, it must be known that this at a greater computational cost. Plus, the techniqure poorly suited for real-time applications like video games where speed is critical. Ray tracing is best suited for applications where the scenes can be rendered slowly ahead of time, such as in still images and film and television visual effects. Because of this, it is capable of simulating a wide variety of optical effects, such as reflection and refraction, scattering, and dispersion phenomena (such as chromatic aberration) – adding a greater sense of presence.

This trick allows those already using the Renderman platform the ability to experiment with VR. New users can also download a free non-commercial software package online as well. In addition, Mach Kobayashi recently published documentation of this trick the Renderman community forum linking to a Google Drive; all so that anyone interested in VR can learn from it. The spark has been started. Further innovation surely comes next.