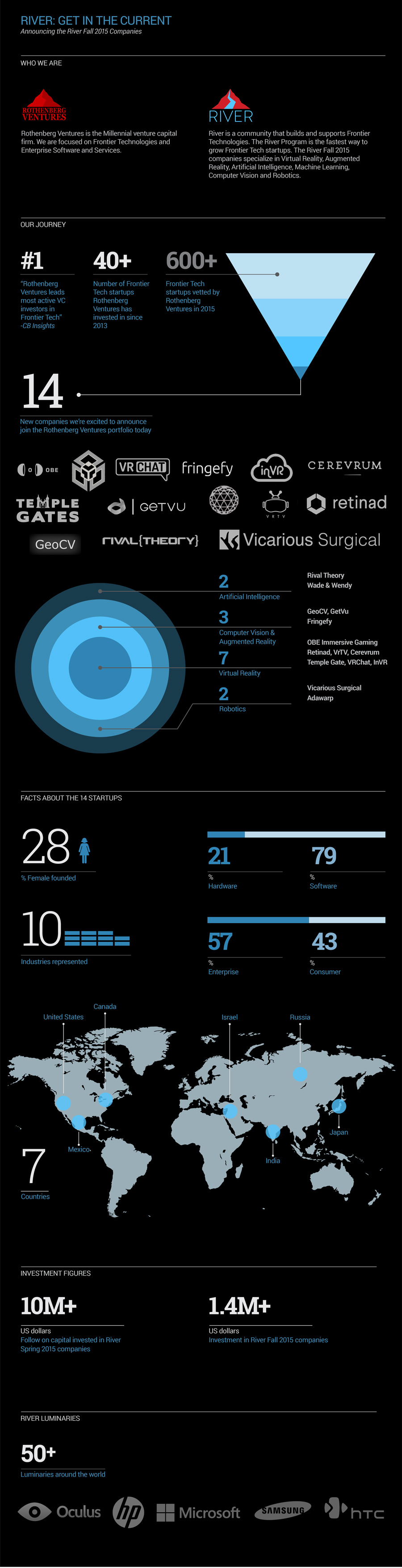

Today Rothenberg Ventures announced that River, their program originally founded exclusively for VR startups, will be opening its doors to “frontier technologies,” starting with this fall’s class. The class of fourteen consists of companies from the worlds of augmented reality, artificial intelligence, robotics, machine learning, computer vision, and of course VR. This marks the first of a number of big changes coming to the program.

Starting with next year’s spring class the firm will be significantly increasing River’s scope, promising to commit $10 million to the program over the next year and raising their investments to a minimum of $200k per company in the program. This will coincide with virtual reality’s (hopeful) consumer debut and (hopefully) the beginning of its rise towards being a mainstream technology.

See More: An in depth look at River’s inaugural class

Right now virtual reality is on the precipice of on becoming a reality for consumers, but we wouldn’t be where we were if it wasn’t for the convergence of many different technologies. The rise of smartphones led to rapid advancements in mobile processing power and display quality, as well as driving down the cost and leading to improved accuracy from accelerometers, gyroscopes and other sensors. At the same time rising interests in video games, big budget CGI summer blockbuster films, as well as ticks on Moore’s clock led to major improvements in both the quality and capabilities of computer graphics. These technologies converged into each other to enable the virtual reality future of our present.

This same kind of convergence is something that Mike Rothenberg, the firm’s CEO, sees with these frontier technologies. “A lot of these companies have technologies that really work well together,” he said in a phone interview, “there is a lot of overlap.” And it’s true, many of the projects are already representative of a convergence of two or more of the “frontier technology” categories, with a heavy emphasis still on VR.

We spent a lot of time with the previous River class and they all remember fondly the program’s collaborative atmosphere, one the Rothenberg team is looking to continue to foster with this class. Spending time with each of the companies yesterday at the River offices I witnessed two companies from different fields, VR and computer vision, collaborating with each other on the first day. It will be especially interesting to follow what projects arise from these companies all sharing the same space.

Speaking of those companies….

Meet the 13 companies in the fall 2015 River class

Adawarp

https://www.youtube.com/watch?t=11&v=IAglu2G2aUE

Category: Robotics, VR

Nope that’s not Ted, that’s Adawarp’s “tele-bear” – a VR connected telepresence robot in the form of a teddy bear. The tele-bear is the product of the combined efforts of Adawarp’s co-founders Tatsuki Adaniya and Fantom Shotaro. Tele-bear looks to bring a friendly edge to telepresence, masking the robot in an approachable and cute form. Using a pair of cameras with fish eye lenses embedded in the eyes a user can embody the robotic bear, seeing from its perspective. As you move your head around the bear moves with you and there even seems to be ways to control its other limbs. Adawarp hopes its solution will help to humanize telecommunication.

Cerevrum

https://www.youtube.com/watch?v=T9qBbfDJDL0

Categories: VR, Machine Learning

Cerevrum is looking to combine virtual reality and machine learning to help perfect brain training and solve the “transfer problem.” The transfer problem refers to a common issue with brain training games, that the skills learned in the game don’t typically transfer over in a meaningful way in the real world. The team at Cerevrum believes that the combination of a fully immersive environment with player-specific gameplay that adapts the difficulty in line with the specific user’s unique cognitive skills (tuned with the company’s machine learning technology) will allow them to “fix learning” and solve the transfer problem.

The company is the brain child (pun most definitely intended) of a group of neuroscience PhDs and geeks. Cerevrum is planning to bring its platform to all of the major headsets, and will launch first on the GearVR “hopefully by the end of the year.”

Fringefy

Categories: AR, Computer Vision, Machine Learning

Fringefy is focused on using a combination of computer vision and machine learning to change the way we search for things and laying the groundwork for some amazingly useful augmented reality technology. Using the company’s proprietary landmark and urban recognition technology, Fringefy is able to pull data about a location from an image alone. The company’s founders, Amir Adamov, Assif Ziv, and Stav Yagav, envision this technology as a core piece of augmented reality’s future. Imagine being able to don your pair of AR glasses, look at a restaurant and instantly be able to see all of the Yelp reviews or look at a landmark and learn all about its history – that is what Fringefy is looking to enable in the long term.

“We see ourselves as the missing link that will finally enable casual AR experiences in the urban outdoors,” says Ziv.

Currently the company has released its technology in a smartphone app called “Alice Who?” which is available on iOS and Android.

https://www.youtube.com/watch?v=sDj7NlgktGM

GeoCV

Category: Computer Vision

GeoCV is a computer vision company focused on 3D scanning technology for mobile utilizing depth cameras like Google’s Project Tango and Intel’s RealSense. The team consists of a group of computer vision veterans with ten years of industry experience who are looking to create photorealistic, accurate captures in near real-time using consumer devices. GeoCV was one of the two companies I saw already collaborating with each other at River. Working with VRChat GeoCV imported the living room environment you see below into a social VR environment, which I was able to walk around. In VR the scans are really convincing aside from a few errors here and there. The couch in particular is really impressive looking. If you are using a MozVR or Chromium browser you can take a look at the scan in VR yourself.

GetVu

Category: AR

Utilizing AR headsets like ODG and Microsoft Hololens Getvu is looking to change the way workers interact with their surroundings in a warehouse setting. For example, in an Amazon shipping facility a worker may be tasked to grab certain items from certain bins off a check list. As they pick up each item they have to scan it in then place it in their bin. GetVu wants to use augmented reality to streamline this process and others by displaying all the relevant information in a heads up display as well as scanning the objects, thus making the whole process hands free. Products like this are among the earliest viable use cases for AR and Microsoft is currently focusing Hololens exclusively on enterprise technologies, such as this, for the first year.

inVR

Category: VR

Virtual reality needs content, that much is a fact, but making that content is far from easy. For starters there is a barrier to entry for those who don’t know how to code (and aren’t looking to do immersive video) and for many that’s where it ends. But VR has the power to truly empower artists and other creatives who don’t fall under the game developer umbrella to create amazing content, and inVR gives them the ability to do that without having to mess around with any complex code. inVR enables artists to easily drop in creations from programs like Maya into inVR’s platform and instantly create versions viewable across all the different VR headsets.

Currrently inVR has a phone viewer application where users can view creations that have been uploaded to inVR’s platform. The app is available now on iOS and Android.

OBE Immersive Gaming (Machina)

https://www.youtube.com/watch?v=vUtKdG5Y8dY

Category: VR

When you think of virtual reality peripherals typically “fashionable” is the last word that comes to mind. OBE Immersive Gaming is looking to change that. Using a hooded jacket designed for everyday wear (it looks like a nice Northface jacket) OBE combines haptic feedback, motion capture, biometerics, and “haptic game controls” into one peripheral. The system works using the company’s own hardware, dubbed KER, which is the first open source hardware platform design for the fashion industry. OBE believes that their focus on making the product fashionable as well as functional will help them have a unique advantage in reaching the image conscious consumer market.

OBE will be demonstrating this tech at TechCrunch Disrupt in San Francisco next week.

Temple Gate Games

Category: VR

Temple Gate is the Oculus VR Jam award winning studio behind Bazaar, a gorgeously designed, procedurally generated puzzle exploration game for VR. The game uses an aesthetic that removes black completely from the color palette, giving way to a vividly surreal environment that absolutely pops in the VR. As far as stylized games go, Bazaar is among the best looking we have played in VR.

Other than being beautiful Bazaar also manages to bring some pretty interesting ideas to the table, including utilizing a monoscopic view where one eye is blacked out to look through a telescope that reveals secrets on the map. The game also makes use of an environmentally integrated UI which displays inventory items as constellations in the sky. They sky can also act as way to navigate through the map.

Temple Gate Games utilizes a custom built engine which “gives [their] game a unique flavor,” as well as giving them tight control of its development. The studio is comprised of a team of industry veterans including Tom Semple, the creator of Plants vs. Zombies, Theresa Duringer, creator of Cannon Brawl, Jeff Gates of Spore, Patrick Benjamin of SimCity, and Ben Rosaschi, from Zynga. Currently they are aiming to have Bazaar be a launch title for all major headset platforms.

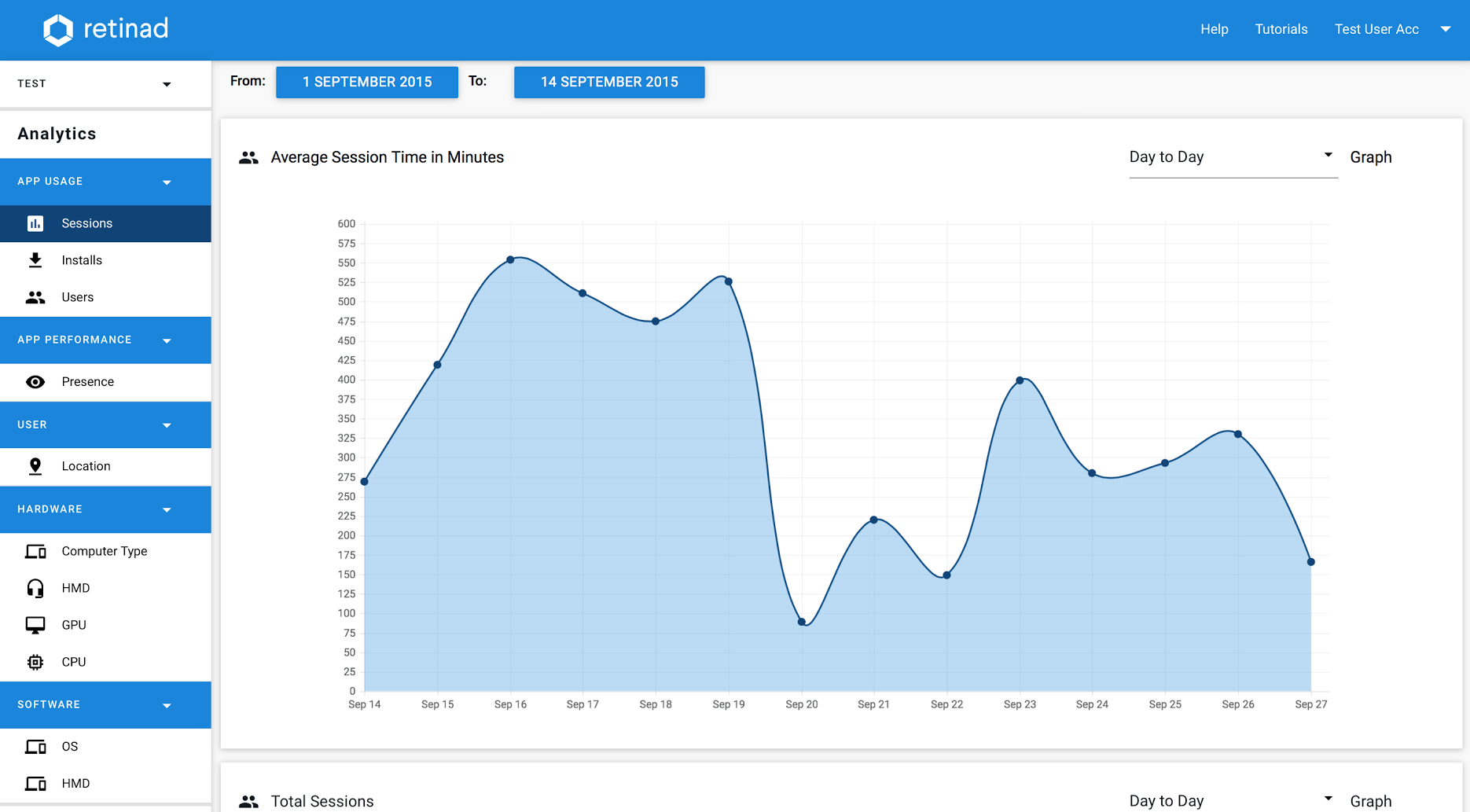

Retinad

Category: VR

Retinad is an advertising and analytics platform built for VR. While that may make a number of you cringe and bring up images of the time the Futurama gang entered the VR internet, I encourage you to put down the pitchforks for a moment and listen. VR ads are an inevitability. They are one of the easiest and most direct paths to monetization for developers and bringing money into this young industry is vital. Retinad’s approach appears to be focused on the developer first, allowing them to collect vital data about user experiences within the application. Data like that will prove to be instrumental as we continue to uncover the as of now unwritten “rules” of VR content.

On the actual ad side of things Retinad wants them to be as unintrusive as possible, opting to go with immersive ads that can be set to load at a number of different times, like before the experience or during a loading screen. The advertisers will have access to the same set of analytics, giving them even more finely tuned data about the customer’s experience.

Rival Theory

Categories: AI, VR

Rival Theory began as one of most widely used artificial intelligence engines in the world, with over 40,000 projects utilizing it, but now the company is looking to take that engine and put it to new uses in VR, namely enabling advanced interaction with characters.

“Entertainment in VR sits at the intersection of movies and games,” says Bill Klein, Rival Theory’s President and Co-Founder. “In both movies and games, characters are the heart of the experience, driving story and providing meaning and connection with the audience.” Utilizing their technology, Rival Theory is creating narrative experiences in Virtual Reality that focus on the interaction between human participants and AI-based characters.

“We’re attempting to realize a massive goal in human imagination – the ability to meet, talk to, and form relationships with the characters from our stories and our dreams,” says Klein, “we are no longer just passive viewers of the narrative – we can be fully engaged participants.” Klein emphasized that you will be able to converse with the AI using conversational language, not simply a set of commands.

That level of simulated communication would be a game changer for VR, bringing characters to life in new ways. Rival Theory wants to utilize this technology to change the way we tell stories in VR, bringing a unique level of user controlled interactivity to a narrative. They are looking to release their pilot episode utilizing the technology in Q1 2016, likely with the launch of the Rift.

Vicarious Surgical

Categories: VR, Robotics

Vicarious Surgical is currently operating in some what of a stealth mode. The company is working on a solution that combines virtual reality with surgical products.

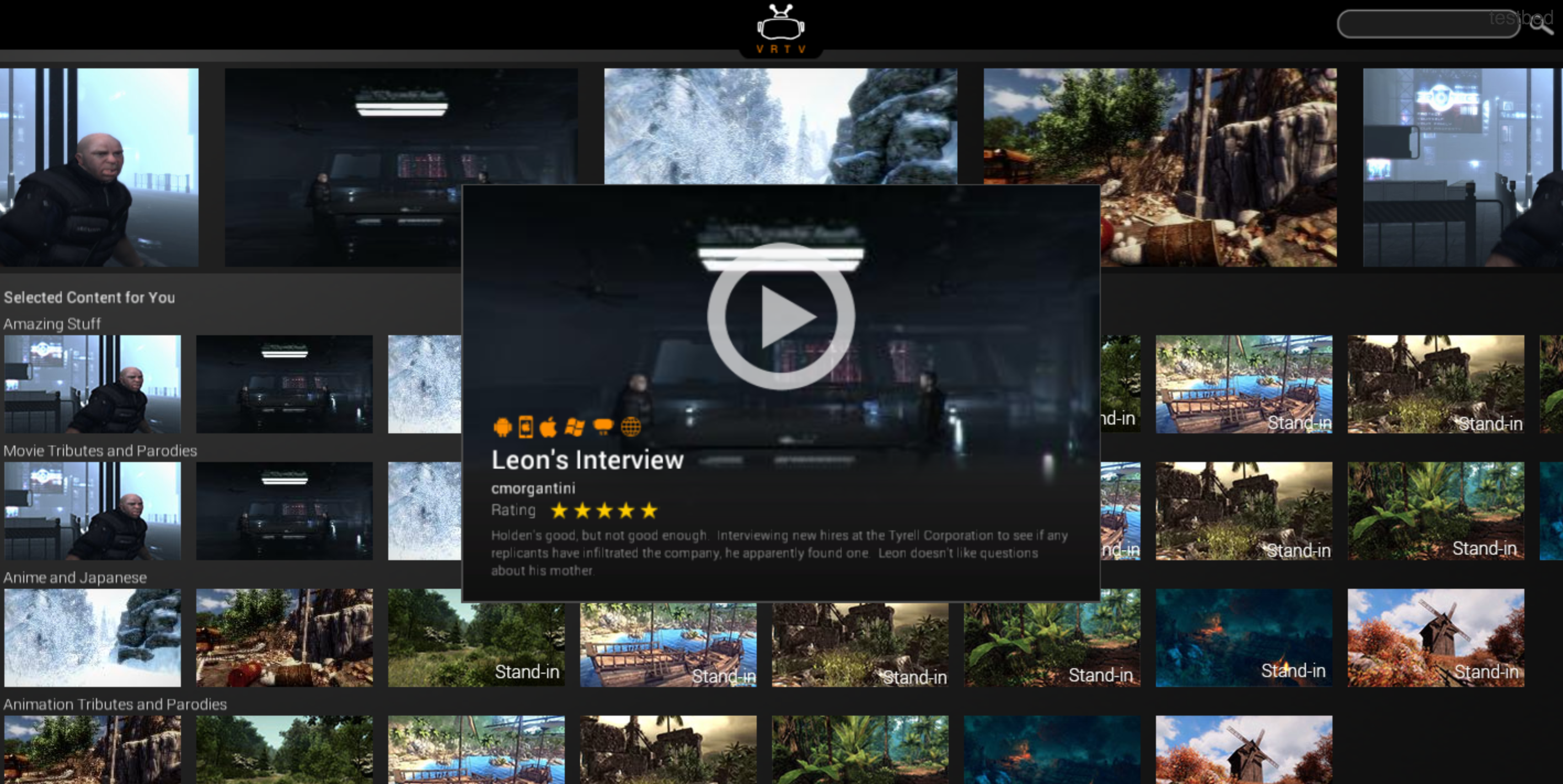

VRTV

Category: VR

On the surface VRTV is a web portal, as well as a mobile and desktop VR app ,that allows users to view, create and publish VR experiences. But looking further is looking to tackle a rather lofty goal, full 3D interactive VR experiences that are streamed, not downloaded.

The company is using proprietary streaming engine that the company claims can stream “very large (practically infinite) data to reach all devices over a variety of bandwidths with a consistent Level of Quality (LOQ) with no pauses and no popping.” This is done through a process of predicatively loading the experience as the player moves through it allowing for an experience to launch almost instantly. In an age where everyone expects things instantly, technology like VRTV could prove to be vital for the mass success of the industry.

VRChat

Category: VR

VRChat has long been a darling of the VR enthusiast community. As an open VR social platform, VRChat allows users to create their own worlds and avatars using Unity. As a rule, VRChat does not limit what the community is able to create (for better or worse, massive non optimized avatars can ruin everyone’s time). The result is a bustling creative community who are actively experimenting with everything from VR talk shows to kart racing in the social sphere. VRChat also is a natural destination for realtors who can easily import 3D scans, like the ones from GeoCV, and walk clients through them in a social VR space.

VRChat is currently available as an alpha build.

Wade & Wendy

Wade & Wendy is an AI powered platform for the recruiting space. Wade is an AI career assistant, helping people navigate their careers and discreetly learn about new job opportunities. Wendy is an AI hiring assistant for companies, alleviating some of the recruiting pain points from hiring managers and helping them to intelligently identify talent for their open roles from a community-sourced applicant pool.