So, unless you’ve been living under an out-of-focus rock, you’re probably aware of Lytro — the company that makes refocusing cameras. But you may not have noticed their latest move: they raised $50 million to work on virtual reality.

Wait, what? A manufacturer of refocusable cameras is going to go into VR, a medium that’s always in focus? How, why, WTF? We’ll try to answer all those questions here — or at least speculate wildly. Buckle up tight, here we go.

(Full disclosure: I briefly consulted with Lytro about using their technology in the professional cinema market, circa 2009, and a good friend still works there. But I have no stake in the company, received nothing from Lytro to write this article, and never expect to consult for them again. And I have no inside knowledge at all — everything I’m writing about Lytro here is either gleaned from publicly available docs or is wild, crazy, inaccurate speculation. You’ve been warned.)

4D > 3D, or: what the heck is a light field, anyway?

You may have heard Lytro’s technology referred to as ‘lightfield.’ But what does that mean? Well, it most definitely doesn’t mean “3D,” though you can do some optical tricks and infer 3D from a light field. But 3D is lame, by comparison. No, a ‘lightfield’ really just means all the light passing through a given area:

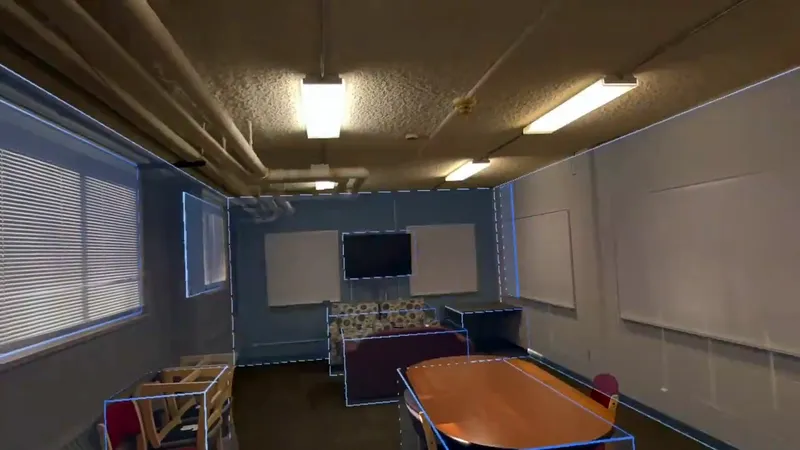

Think of a window: every point on that window has light hitting it from every direction. If you could capture the light from each direction that strikes the window at every point, and keep those rays separate from each other (or at least, don’t downsample too aggressively), you’d have a lightfield representation of the light striking that window. Reproduce that light, and you’d have … a hologram. Really.

Now, Lytro’s cameras take a hologram behind a lens, where light doesn’t really behave the way we expect. A hologram inside a camera looks, well, kind of weird:

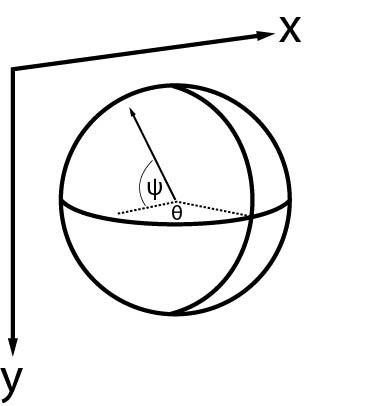

Each of those ‘cells’ is the image from underneath one of thousands of microlenses on the camera’s sensor. The individual pixels within the little cells show the light intensity and color coming from a particular direction that struck that microlens. So each cell records its location (x,y) and the intensity of light coming from each direction (let’s say, what the heck — θ, ψ).

So that’s your four-dimensional image: two dimensions of location, and two dimensions of direction. Lytro and other lightfield cameras (no really, there have been a few) produce a traditional 2D image by ‘slicing’ the 4D lightfield. If you can just imagine a 2D surface curled up inside a 4D manifold, you can understand how Lytro cameras work. Also, you’re probably a genius or insane or both.

Okay, but what does this have to do with VR?

Readers who work in computer graphics may have already jumped to the conclusion, but bear with me here. Lytro didn’t invent light field cameras, and they weren’t even the first lightfield camera company — they were almost 100 years too late: the first light field patent dates back to 1908. (No, that’s not a typo.)

So how did they raise — gulp, $140 million? (Also not a typo.) Well, the first $90 million related to Dr. Ren Ng’s dissertation, and it’s all about speed. Now, I’ll be honest, I can’t personally follow the math in the paper, but it won some fancy awards, so it must be correct. And the paper takes a hideously slow calculation and makes it considerably faster. That calculation is the 2D slicing we talked about earlier. This allows Lytro cameras to refocus on-camera, using a mobile chip, so the user can experience the refocusing live. Pretty cool, and important for a consumer lightfield camera.

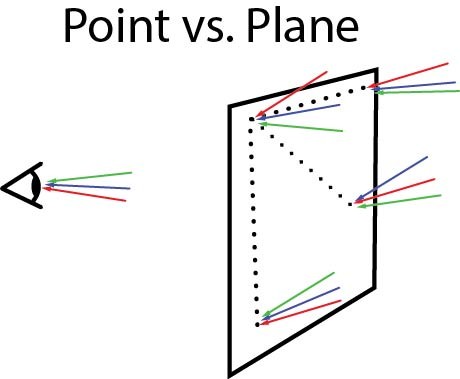

But, VR. Right. Well, there are different kinds of 2D slices you can take out of that 4D manifold. A ‘flat’ one corresponds to a lightfield that has moved in space: to first order, ‘focusing’ is just moving the image plane back and forth — moving closer to the lens focuses further away, and further from the lens focuses closer. This is how macro extension tubes work, by pushing the lens further away from the camera, you can focus closer than a lens would ordinarily allow (gotta throw out some love for the camera nerds here, too). But no one in VR cares about changing focus — we need perspective. Specifically, a two-eye perspective that relates to the location of the viewer’s eyes in virtual space. But of course, we can’t know where the viewer is going to look ahead of time, so we need to be able to calculate the viewer’s perspective on the fly. In other words, you want to know what a viewer would see in all directions (θ, ψ) if they were at a particular position (x,y). That’s just another 2D slice of a different shape. (Another way to understand it: imagine tracing the light rays backwards or forwards as though they hadn’t hit a sensor, and you can calculate which light rays would strike a virtual image plane — or virtual viewer’s eye.)

The ability to do that calculation fast — like, headset refresh-rate fast — might be the key to Lytro’s VR play. (Assuming it’s not just ‘get acquired by Magic Leap.’)

Lytro Wins because Live Action VR is Hard

All you VR devs are probably yawning right now — you get on-the-fly perspective calculation for free in your favorite game engine. But think for a moment about the problem of shooting live action VR: you’re stuck with the perspective of the actual, physical lenses on the actual, physical cameras you’re using to shoot the scene. You can distort them later, infer brand-new pixels with machine vision techniques, stitch and infill-paint, etc, but at the end of the day you only have the perspective of your taking lenses, period. Everything else is fiction — you can make convincing fiction, but it takes work. And fiction often has seams, artifacts, crippling limitations or other ugliness. (How many dolly shots have you seen in live-action VR? Yeah, that’s what I thought.)

I’ll leave it as an exercise for the reader to understand that there is no physical camera rig that will give you seamless 360 degree, stereo 3D images. Can’t be done. And none of them will give you head-tracking. (If you think you have a general solution to live-action VR, email me. I’m dubious but can suspend a surprising amount of disbelief.) So all major approaches to live-action VR are crippled to varying degrees.

Except possibly Lytro’s.

Now, this is where the speculation gets totally woolly: imagine Lytro produces a ‘camera’ that’s essentially a holographic box; it captures all the light rays entering a defined space. Using creative 2D slices, you could synthesize any perspective from within that box (and perhaps some beyond the box, looking back through it, with limited field of view). It wouldn’t even matter if the user tipped his head sideways. Try that with any of today’s pre-rendered VR content — CG, live action, A-list or otherwise — and the stereo illusion will break immediately.

And a holographic camera would be superior to ‘3D’ techniques, even some that are being touted as ‘lightfield’ but aren’t (ahem, NextVR — love your stuff, but wireframe != lightfield). Why? Imagine a character shining a flashlight in the viewer’s eyes: with a plenoptic, Lytro-style lightfield array, the viewer could slide to the side and avoid the beam of light, see beyond it. But with fake lightfield, the machine vision inference techniques will break badly.

And this is not a trivial issue; just ask any cinematographer worth her salt about the importance of specular reflections to our perception of reality. Doing away with highlights, kicks, the little glints of light — it’s like going back to CG animation before Pixar. What about footage that has water in it? Try telling a director she can’t film the ocean at sunset, because the reflections on the water don’t compute correctly in post, or it’ll require an army of animators in Bangalore. Forget it, and without those specular reflections you’re back in the Uncanny Valley. Some 3D-ish live action will no doubt look great anyway, but if Lytro can pull off a holographic camera it’ll eat every other camera array on the market for breakfast, because we’ll just be able to shoot what we see without apologies.

Unless … it Fails Completely.

So, the cold water to the face: none of this is going to be easy. First, there’s the issue of sensor size. The reason Lytro took holographic images inside of cameras is that’s where the sensors are. And sensors are teeny, tiny, expensive slabs of melted beach sand. Imagine the size of the CMOS chip you’d have to fabricate to build a box around a user’s head, just to give them limited lookaround, and you can see the problem with this approach. Now, that just means the naive approach won’t work, and they’re no doubt cooking up something interesting over there in Mountain View. For example, Jon Karafin, Lytro’s Head of Light Field Video, was recently spotted on Cinematographer’s Mailing List asking about some rather … unusual lenses:

“I’m hunting for some monster lenses to purchase and wanted to see if anyone either has, or knows someone, with any of the following lenses:

– Nikon Apo-Nikkor 1780 mm F/14

– Goerz Red Dot Altar 1780 mm F/16

– Rodenstock APO-Ronar 1800 mm F/16

Hopefully someone can help track these down? If you have something similar, I’m interested as well.”

For those of you who aren’t hip to ultra-large-format lenses, ‘monstrous’ is probably an understatement. Behold:

I have no idea if Karafin’s planning to use those beasts for lightfield VR video, regular old lightfield video, or crushing walnuts. But the point is, there’s a bunch of smart people over there, with another $50 million in the tank, and they’re working on something interesting.

Of course, every technological advance requires timing — that lightfield camera from 1908 didn’t exactly set the world on fire. Even the smartest minds can’t crack a problem if it’s too early, and the kind of resolution and processing power required by a holographic camera is daunting. Now, lightfields outside of cameras are very, very redundant: just slide your head right and left, and see how little your scenery changes. But you can’t just throw that redundancy away without aliasing (missing those glints and sparkles), so you need a large capture area and high resolution. Last I checked that means mega-bucks. (That’s assuming Lytro is doing anything remotely like what I’ve discussed, of course.) And if Lytro’s holographic camera requires massive advances in silicon fabrication and processing speeds, they might not stick around long enough to see this to fruition, $50m notwithstanding.

But I’m hoping they are working on this, and I’m hoping they succeed. Because we all deserve beautiful live-action VR — I don’t want to live in a virtual world with seams any longer.