The VR spotlight might be on Oculus right now after last week’s developer conference, but we haven’t forgotten about Google’s Daydream ecosystem. In fact, with the first headset, Daydream View, due to launch next month, we’re getting pretty excited about the company’s more prominent arrival on the scene.

You may have already seen a snippet of our chat with VP of VR Clay Bavor from last week’s Made By Google event, but he had plenty more to share during our in-depth interview. Here are five things we learned from Google’s VR figurehead last week.

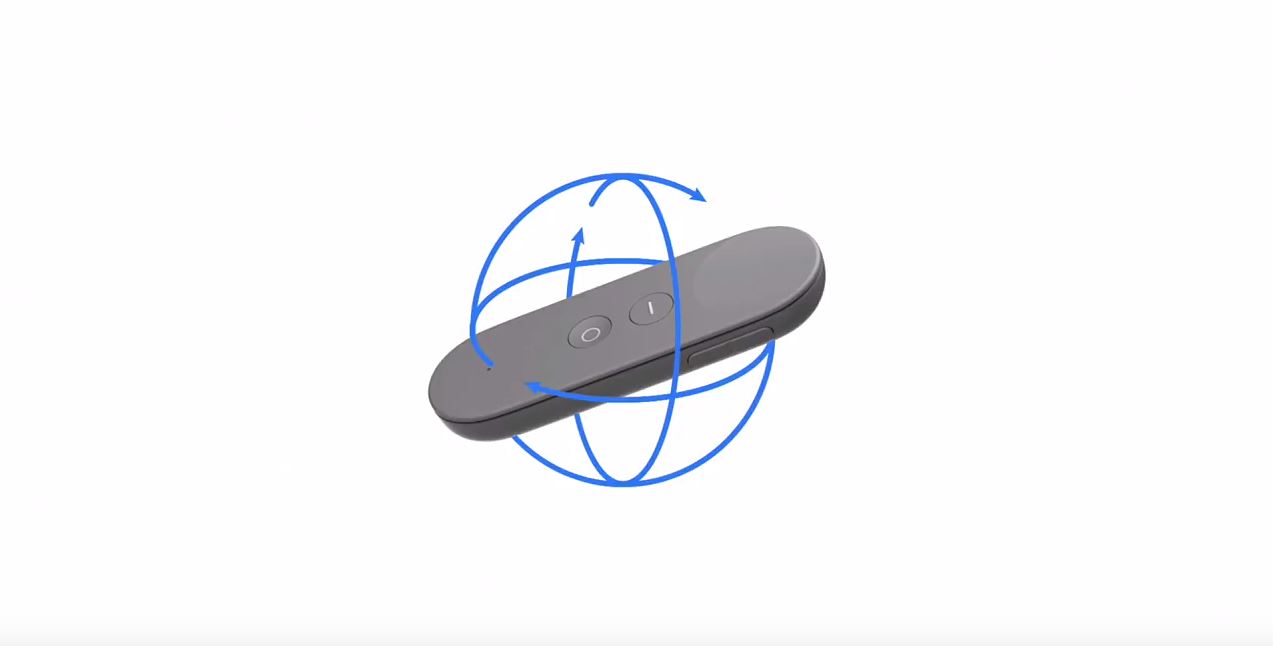

The Controller Doesn’t Have Positional Tracking, But Google Isn’t Worried About That

From what we’ve seen, the Daydream controller has more in common with a Wii Remote than it does an Oculus Touch or a Vive wand. According to Bavor, it uses “a bunch of different sensors: IMUs, accelerometers, magnetometers”. You can use it to move your hand left and rift and rotate it, but won’t be able to move them forward and backward.

The trick, according to Bavor, is to do some “clever things” with the software. He talked about how the headset uses parallax to simulate a small bit of positional tracking. “We do something similar with the controller,” he said. “So we actually have a wrist and arm model. You can actually set your hand in the setting, left hand, right hand. And from that we can actually create a far more realistic sense of the controller moving in space about you than if we were just kind of treating it as a thing rotating independently in space.”

Hopefully his comments hold true; Daydream needs to control as smoothly as possible.

Social VR Won’t Be Realistic For a Long Time, But That Doesn’t Matter to Google

Google has done some really interesting work with social VR already. We were big fans of the company’s anti-trolling techniques that were shown off back at I/O, and Bavor calls its Google Expeditions app one of the original social VR experiences. When it comes to Daydream, the company knows it’s not going to achieve anything realistic soon, but that might not matter.

“But what we’ve found is even just giving people the sense that someone else is there and giving them a place fixed in space where I can look and see you there, I can hear you there when you talk, you’re not alone,” Bavor said. “You’re there with someone.

“Do you look like you? No. But do I register you as you? Yes. Think about like cartoon caricatures of people. Like you can glance at a well done cartoon and know ‘Oh, that’s Ronald Reagan’ or ‘Oh that’s Marilyn Monroe’. You just know. So actually that kind of avatar representations can be very powerful but we’ve got a long way to go.”

The VR Team Did Not Build The VR Apps. All of Google Did.

With Google’s VR team expanding, you might assume it would be the one to handle VR support for popular apps like YouTube and Street View. That isn’t the case; these apps were actually built by the original teams behind each.

“One of the few things people have asked me like ‘Oh, so YouTube VR is a part of your team. Oh your team built Photo–‘ No, the YouTube team built YouTube VR,” Bavor revealed. “The Photos team built Photos VR. The Maps team built StreetView VR.

“And it’s been one of the really special things about doing this at Google. We had a vision for what more comfortable, friendlier, accessible VR could look like. We knew we had something at this intersection of really high quality, low latency, the tracking, the controller, the headset. And we went to our friends around the campus and said like ‘Hey, Android team? Let’s make this a thing. Hey, YouTube team? Let’s build YouTube VR.’ And just kind of mostly organically, we built this stuff.”

The result is an entire company that knows how to work with VR, not just a small slice of it. That can only be a good thing.

Accessibility And Content Will Keep Headsets On Heads

It’s a sad fact of life that there are some VR headsets out there gathering dust. The truth is some hardware just doesn’t have the content keep bringing people back, while others are too complicated for regular use by some people. Those are two areas Google is specifically targeting to make sure Daydream is used and used often.

“It’s the menu that gets you back again and again and again,” Bavor said. “And for us, literally the first project that we started after Cardboard was JUMP. Why did we do that? Because we knew that the future of VR was going to be mobile. It was going to be something you had with you. We knew that creating VR experiences is hard. Super hard if you’re trying to create a novel 3D world from scratch. But it’s also hard if you’re going to do something with real-world capture, with video. We wanted to make it easy.”

He also talked about the importance of comfort to the VR experience, which will be essential for Daydream to be accepted by millions. That’s very true, though we have issues with Daydream’s comfort right now.

We Can’t Say “Okay Google!” Yet, But One Day We Might

While VR was a big focus at the event, machine learning and AI played an even larger role. But could the two ever converge? Bavor doesn’t think it’s due to happen any time soon, but could see some of Google’s more immediate tech like voice commands could be implemented in the near future.

“That’s achievable, for some definition of current,” he said. “But it’s something we’re all excited about, right? ‘Hey, let’s go to the aquarium tonight! Okay Google, take me to the aquarium.'”

As for something a little more ambitious, Bavor was vague but did provide an exciting tease.

“A computer you can interact with as naturally as I can with this coffee cup or I can talk to a person over there, but one which is informed and driven by and helped by an agent which is itself very smart, can reason about what I’m doing in the world and help me navigate my information, my computing experience far more effectively. I think that’s a long way out, but boy am I excited.”