As always, one of the highlights of this year’s Oculus Connect developer conference was the staggeringly detailed keynote talk from Michael Abrash, Chief Scientist at the newly-renamed Facebook Reality Labs. Abrash’s team is paving the future of VR and AR for Oculus, developing breakthrough technologies that could someday make headsets even more immersive than they are right now. For this year’s talk, he provided an update on just how far the team has come in the past two years.

Specifically, Abrash revisited predictions he made for VR in 2021 back at Oculus Connect 3 in 2016. His talk then included a look at the future of aspects like display, audio and haptics alongside estimations of when we’d get our hands on improved versions of each. Last week, he went over each of those estimations and assessed how they were holding up. It was a lot to process, so we’ve run down five main takeaways from his insightful talk.

Displays, Foveated Rendering And Virtual Humans Are Developing Quicker Than Expected

Abrash summed up his talk by suggesting that his predictions were pretty much “on track”, though some areas have made much more progress than others. Reassuringly, he stuck by most of them.

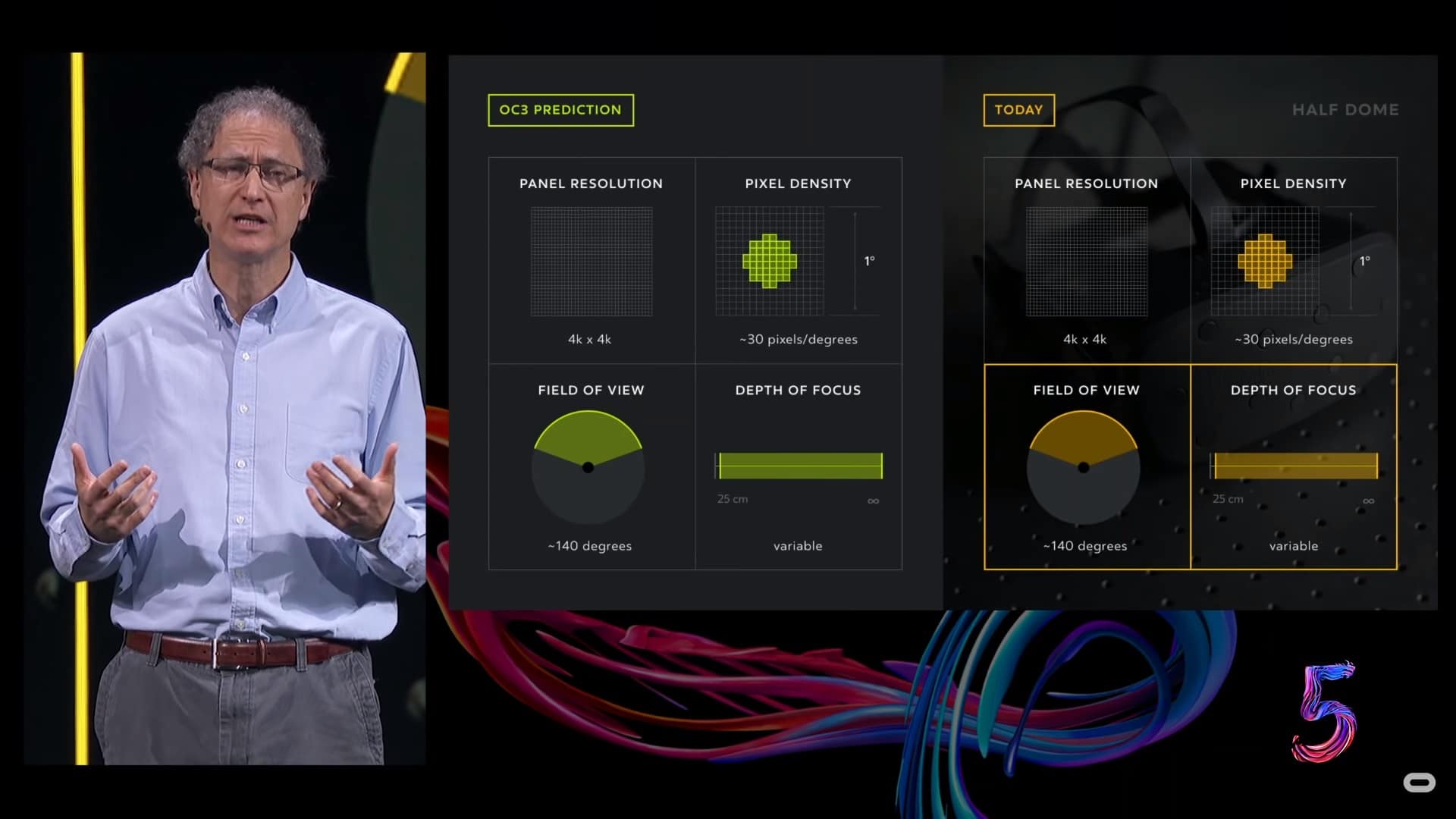

For example, one of the most intriguing segments of Abrash’s OC3 predictions concerned displays. He estimated that, five years from 2016, we would see a VR headset with a 140-degree field of view (FOV), variable depth of focus, and a 4k x 4k panel resolution with 30 pixels per degree density. Well, things are looking good in this area.

At F8 earlier this year, Facebook introduced its Half Dome headset prototype. Though still very much a work-in-progress, the kit featured varifocal displays and a 140-degree FOV. “Half Dome achieved two of my three display predictions three years early,” Abrash admitted, further adding that implementing 4K displays with 30 pixels per degree would be “straightforward”.

Going into more detail, he explained that Reality Labs had made “significant progress” in varifocal displays (which provide accurate blur based on the proximity of virutal objects to your eyes) using an AI-driven renderer called Deep Focus.

Work in foveated rendering, which uses eye-tracking to fully render only the area of the display the user is looking at and thus save on computational power, is also on track thanks to the help of a deep learning tool that fills in missing pixels.

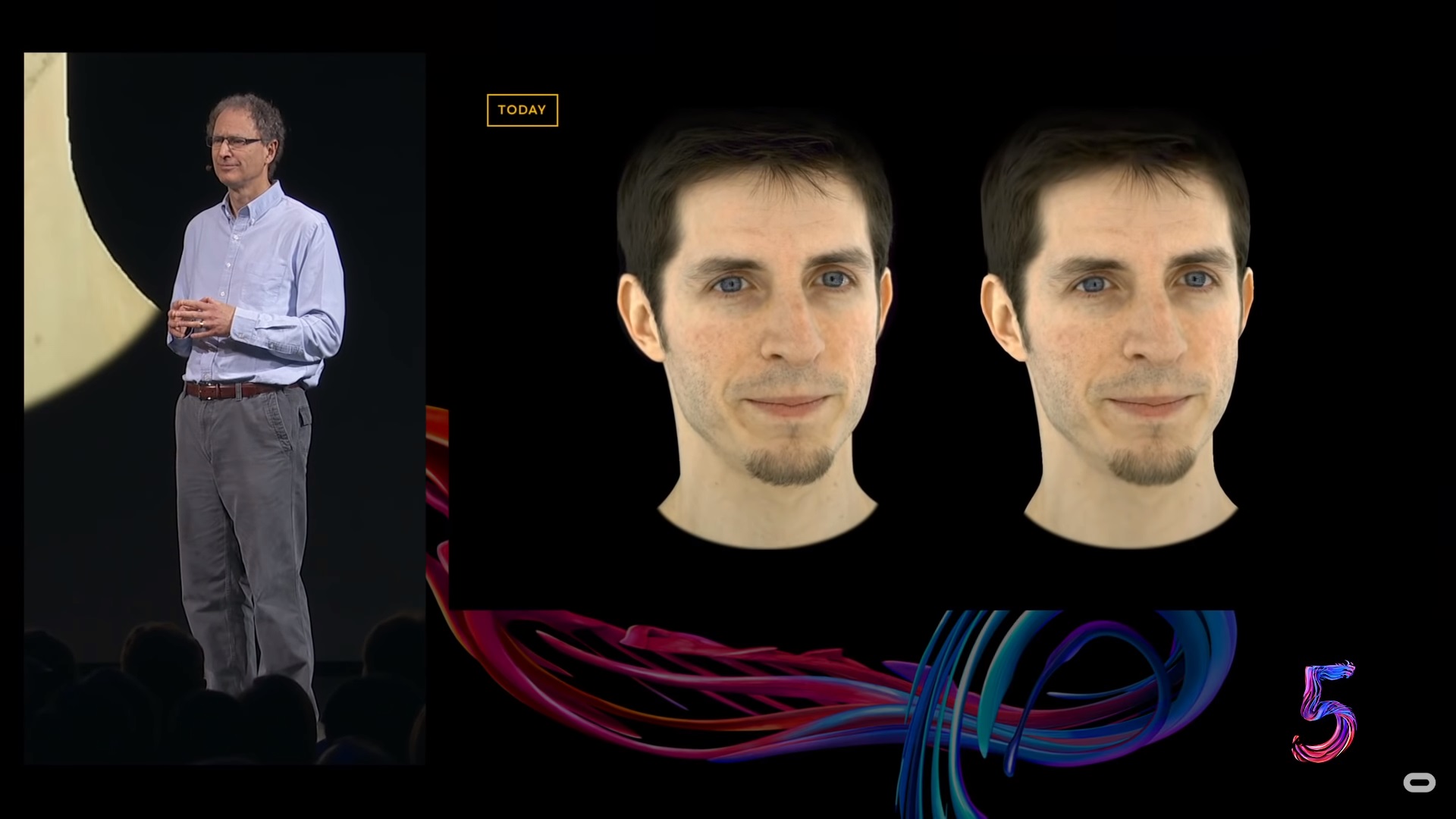

Virtually real humans have also come some way using an early system known as codec avatars, a “novel machine-based learning approach” that could one day “revolutionize how we communicate and collaborate.”

There are other technologies pushing past Half Dome, too. Abrash spoke of Pancake Lenses, which will allow much sharper images at resolutions that go beyond 4K, and could even support a FOV of around 200 degrees and more compact headsets (though he did note the latter two couldn’t be achieved in the same device). Even these are likely to be surpassed by waveguide displays currently in development for AR devices, however (more on that in a bit).

But Eye-Tracking, Realistic Audio And Other Features Might Take Just A Bit Longer

While many of Abrash’s predictions had positive outlooks, he did also have to adjust his timeline slightly on some aspects. He now expects competent eye-tracking and high-quality mixed reality (which includes photorealistic replication of real world environments) to be here around 2022 rather than 2021, for example.

He also acknowledged that, despite recently experiencing an incredibly compelling demo of personalized head-related transfer function (HRTF) audio, it may take longer to get here than he thought. Personalized HRTF (the Oculus SDK already uses a generalized version) is able to replicate the distance and position of audio within a VR environment with incredible accuracy, though Abrash’s most recent demonstration required him to have his ears scanned for 30 minutes. Not ideal.

“Overall my two-year predictions are looking pretty good, but I was perhaps a little more specific than I should have been, not about the technologies themselves but about their timing. I think most of what I talked about will be in consumer’s hands a year later than I thought; four years from now rather than three,” Abrash noted. “But, apart from that, not only are the predictions on track, it’s actually starting to look like I underestimated in some areas.”

There Were New Predictions Too

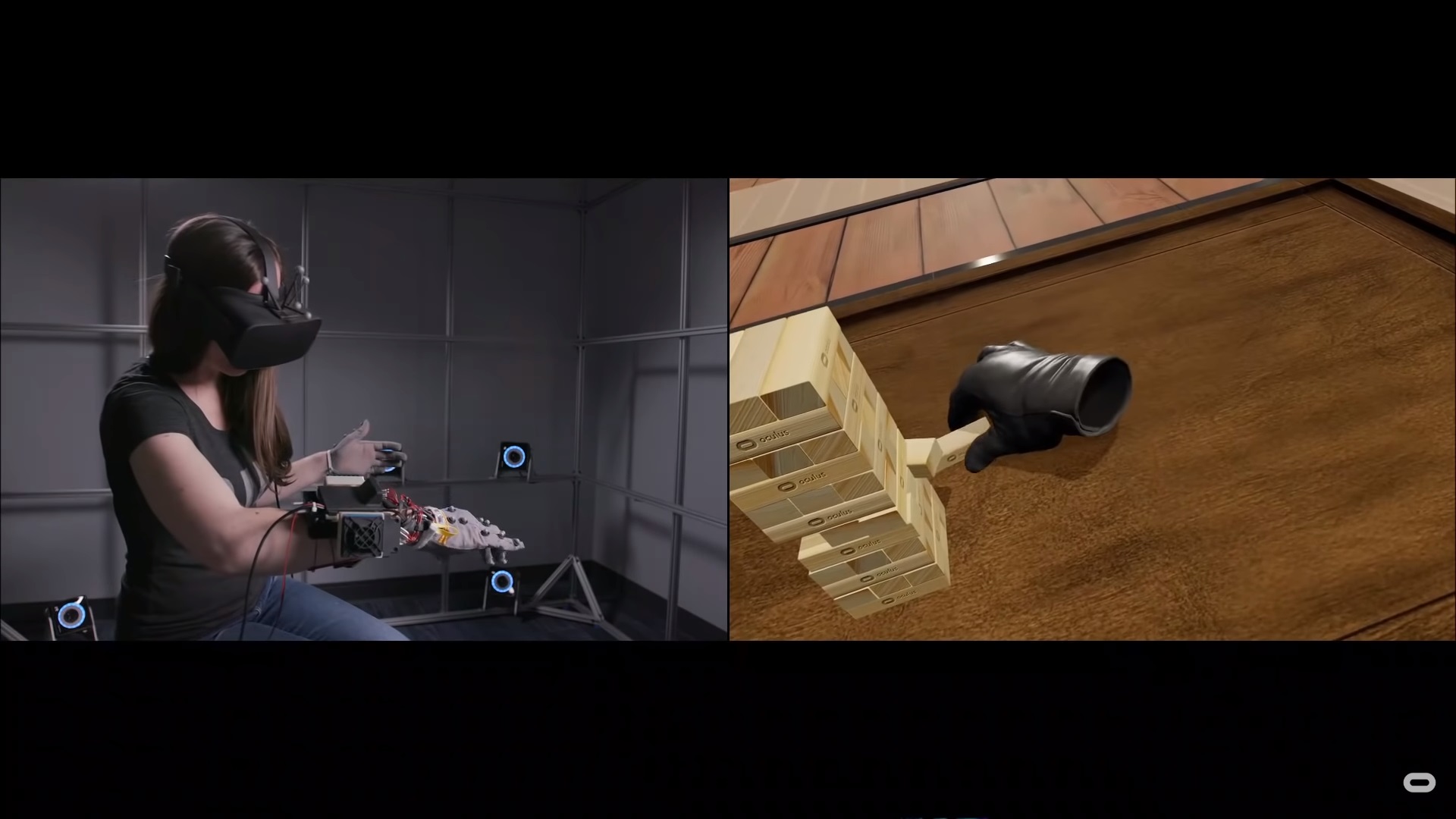

One of the best bits of Abrash’s talk was a video showcasing Reality Labs’ very early work with haptic gloves that can provide realistic resistance when grabbing objects. A VR user played a game of Jenga with startling accuracy, including full hand-tracking to allow her to grab individual blocks and even place a virtual rubber duck atop the tower. It looked incredibly promising, though Abrash stressed that this was very early work, predicting that a form of haptic control similar to it would reach consumer hands within 10 years. As he said himself, this is the farthest out VR prediction he’s yet made, but it’s an important one.

To recap: Abrash believes that by 2028 our sense of touch could be simulated realistically by a glove-like VR attachment.

“I predict the first time you get to use your hands with haptics in VR it will be as much as a revelation as the first time that you put on a VR headset,” he said.

Facebook’s Work In AR Has ‘Ramped Up’ And It’s Helping VR Too

Oculus may have started out as a VR company first and foremost, but continued progress in augmented reality over the past few years has made it increasingly harder to ignore. Indeed, Facebook’s hiring spree over the past few years has made it obvious that Oculus is now working in AR, and Abrash confirmed that Facebook Reality Labs’ work in the field “has ramped up a great deal and over the last two years.”

Does this mean we’ll see an AR headset from Oculus one day? It’s very possible, but Abrash also noted that this progress in AR is helping its work in VR as the two share a “great deal of overlap.”

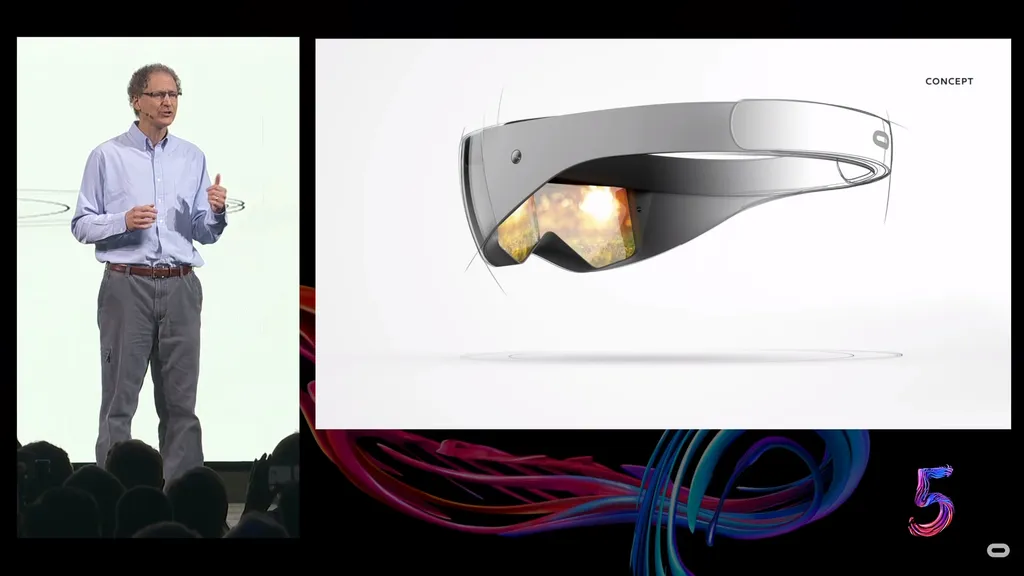

“VR can advance further and faster by leveraging AR technology,” Abrash noted. He explained that Reality Lab had to invent an entirely new display system for its work in AR, which could in turn “take VR to a different level.” The company’s work with AR waveguide displays, which utilize light injected into a thin lens no bigger than a few millimeters, is helping push work in VR to the point where Abrash even showcased concept art (seen above) of a strictly hypothetical Oculus headset that utilizes them.

Ergonomics is another big area of overlap, as the demands for a socially acceptable AR headset are far more than that of VR. By applying those same demands to VR, we could one day have much sleeker headsets. “Applying AR technology to VR, especially display, silicon, audio and computer vision, will make it genuinely possible to build something like the visor I showed you earlier,” Abrash claimed.

In Fact, VR May Be The Best Place For AR In The Next Few Years

Ergonomics aside, though, Arbash claimed that VR headsets may even be able to offer better ‘mixed reality’ experiences than AR devices in the coming years. Whereas devices like Magic Leap One and HoloLens use AR displays with see-through optics upon which digital elements are overlaid, Abrash argued VR’s more controlled environment with opaque display — using camera and sensor-based reconstructions of the real world — might offer a more compelling package for mixing two realities in real time.

“Mixed reality in VR is inherently more powerful than AR glasses because there’s full control of every pixel, rather than additive blending,” he said. “VR can also provide a richer experience than AR because the display doesn’t have to be see-through, the form factor is much less constrained, and it doesn’t have to run off of a battery for an entire day. So the truth is that VR will not only be the first place that mixed reality is genuinely useful, it will also be the best mixed reality for a long time.”

Eventually, though Abrash does say these experiences will converge, but that’s a long way off.

The VR/AR Takeover Is Inevitable

A personal favorite moment of Abrash’s talk came towards the start, when he spoke about Steve Jobs’ early visits to see the first-ever personal computer, the Xerox Alto, in 1979. He lifted a key quote from the Apple icon about how the technology was so powerful it would inevitably become a part of our lives.

The quote reads: “And within… ten minutes, it was obvious to me that all computers would work like this some day. It was obvious. You could argue about how many years it would take. You could argue about who the winners and losers might be. You couldn’t argue about the inevitability, it was so obvious.”

It’s a quote that seems very appropriate to the current state of the VR and AR industries, where early, expensive hardware is fueling doubt about their future as a market. Abrash then updated it a bit, saying: “Imagine a VR headset that’s a sleek, stylish, lightweight visor with a 200 degree field of view, retinal resolution, high dynamic range and proper depth of focus, with audio that’s so real you can’t believe it’s computer generated, that lets you mix real and virtual freely, that lets you meet, share and collaborate with people regardless of distance and that lets you use your hands to interact with the virtual world. If that existed, we’d be playing, working and connecting in it every day.”

“Imagine AR glasses that are socially acceptable and all-day wearable, that give you useful virtual objects like your phone, your TV and virtual work spaces, that give you perceptual super powers, a context-aware personal assistant, and above all the ability to connect, share and collaborate with others anywhere, any time. If those glasses existed today, we’d all be wearing them right now.”

Crucially, Abrash reaffirmed that “while it may be hard to believe, it’s all doable.”