For the tech, film, and gaming exhibits SXSW, VR and 360-degree media will be leaving a major imprint. From promotional work for different brands to VR roller coasters, you’ll be hard pressed not to run into some sort of immersive content. Graphics chip giant AMD will be represented in many of the different sessions taking place, but they’re specifically going to have some new technology on display that could change journalism in a big way.

Journalists have started taking advantage of 360-degree media, even going so far as having dedicated shows that utilize the format exclusively. Cameras are becoming more accessible and powerful, but there are still strides to be made when it comes to the production, editing, and streaming of such content. AMD is trying to take steps forward with their Radeon Loom 360 stitching technology, lowering the complexity of stitching together the footage pulled from the many cameras needed for 360-degree video to enable stitching in real-time. UploadVR discussed the new technology over email with AMD’s head of software and VR marketing, Sasa Marinkovic, ahead of their SXSW panel “Virtual Reality: The Next News Experience” taking place on March 14.

UploadVR: How will real-time stitching impact 360-degree production crews the most?

Sasa Marinkovic: Previous hardware and software limitations meant that it would take many hours or days to stitch a high-resolution 360-degree video, which clearly posed a major challenge for 360-degree production crews. While some stories can wait, many cannot, especially those we consider “breaking news” events.

Radeon Loom, AMD’s open-source 360-degree video-stitching framework, helps to resolve this issue, empowering production crews to capture and stitch content in real time and produce immersive news stories with almost the same immediacy as other digital or broadcast mediums.

There are still a few challenges that need to be resolved, both in terms of the kind of equipment being used and how the equipment is being used. There are various types of journalism, from news reporting (short to intermediate time frame) to investigative storytelling and documentaries (intermediate to long term). The type of the story will dictate how quickly the story needs to be published, and therefore the kind of editing that needs to be applied.

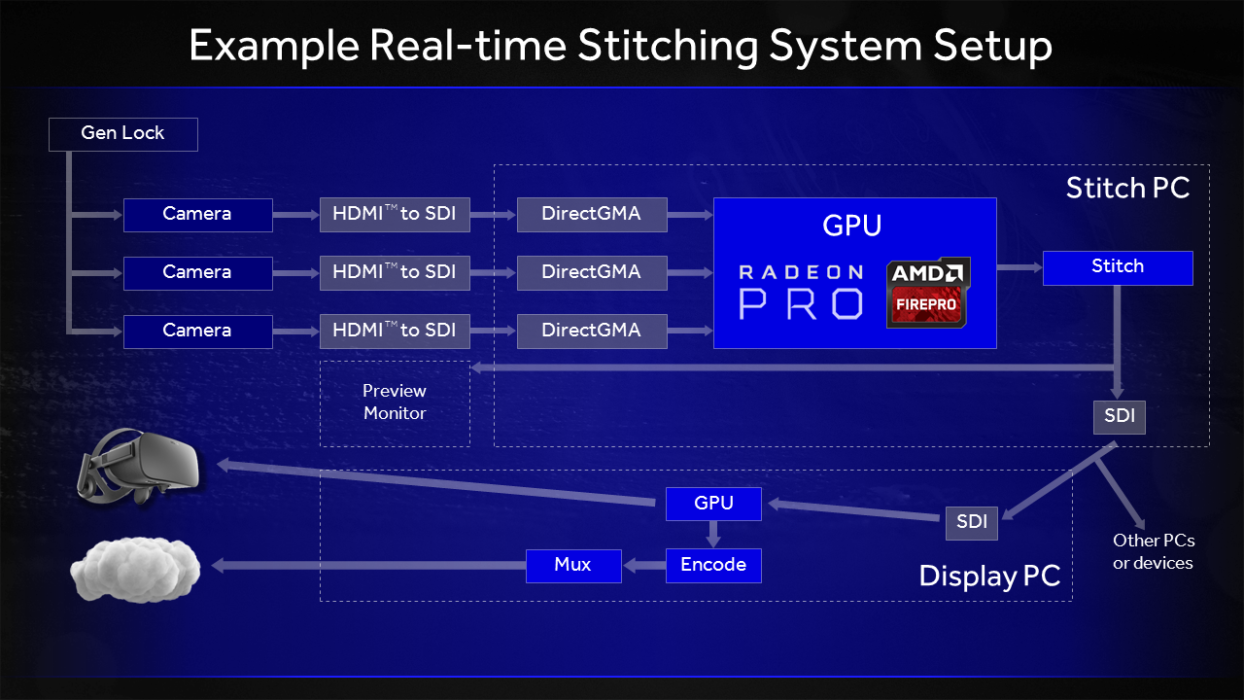

For a real-time setup, placement of all the equipment also needs to be considered. Each situation is unique, but you can imagine several different scenarios, such as filming an interview with a single rig or broadcasting a live concert with multiple camera rigs. With 360-degree cameras, you don’t generally get to have a camera operator behind the camera since he/she would be seen. So you probably want to locate the stitching and/or viewing PCs far away or behind a wall or green screen, for example.

UploadVR: Could this be the type of technology that could help cable news networks break into the 360-degree platform for their live showings?

Sasa Marinkovic: As previously mentioned, previous hardware and software limitations meant that it would take many hours or days to stitch a high-resolution 360-degree video, which meant that 360-degree content was often reserved to longer lead mediums, like investigative reporting and documentary journalism.

Radeon Loom, in combination with our Radeon GPUs and the fastest CPUs, enables both real-time live stitching and fast offline stitching of 360 videos. What this means is that even breaking news stories can be shown in 360-degree video, creating the ultimate immersive experience in events as they unfold.

This has the potential to invigorate journalism, and really all storytelling, adding immediacy to immersion. Just imagine how impactful it would be to watch street demonstrations live in 360 degrees, such as those that have taken place in Cairo’s Tahir Square.

UploadVR: What are the immediate benefits of using VR for journalism? Any perceived obstacles that must be overcome?

Sasa Marinkovic: This innate desire by people to immerse themselves in 360-degree images, stories and experiences is not new to our generation. In VR, however, for the first time ever, the spectator can become part of the action – whether it’s recreating a historic moment or attending a live sporting event – from anywhere in the world. The experience in VR is like nothing we have ever experienced before, aside from real life. However, there are still some basic problems that need to be worked on, such as parallax; camera count vs. seam count; and exposure differences between sensors.

- Parallax: First, the parallax problem. Very simply, two cameras in different positions will see the same object from a different perspective, just as a finger held close to your nose appears with different backgrounds when viewed from each of your eyes opened, one at a time. Ironically, this disparity is what our brain uses when combining the images to determine depth. At the same time, it causes problems when we try merging two images together to fool your eyes into thinking they’re one image.

- The number of cameras vs. the number of seams: Using more cameras to create higher resolution images with better optical quality (due to less distortion from narrower lenses as opposed to fisheye lenses), also means having more seams. This creates more opportunities for artifacts. As people and objects move across the seams, the parallax problem is repeatedly exposed with small angular differences. It’s also more difficult to align all the images when there are more cameras, and misalignment leads to ghosting. More seams also mean more processing time.

- Exposure variances: Third, each camera sensor is observing different lighting conditions. For example, taking a video of a sunset will have both a west facing camera looking at the sun and an east facing camera viewing a much darker region. Although clever algorithms exist to adjust and blend the exposure variations across images, it comes at the cost of lighting and color accuracy, as well as overall dynamic range. The problem is amplified in low light conditions, potentially limiting artistic expression.

- Storage: The amount of data that 360-video rigs generate is enormous. If you’re stitching 24 HD cameras at 60 fps, you generate around 450 GB /minute.

We want to unleash the creativity in the industry for cinematic VR video experiences, and the mechanics of creating high-quality 360-video to become common place. We’re going to do everything possible to make it as easy as possible for developers and storytellers to create great content, in real-time.