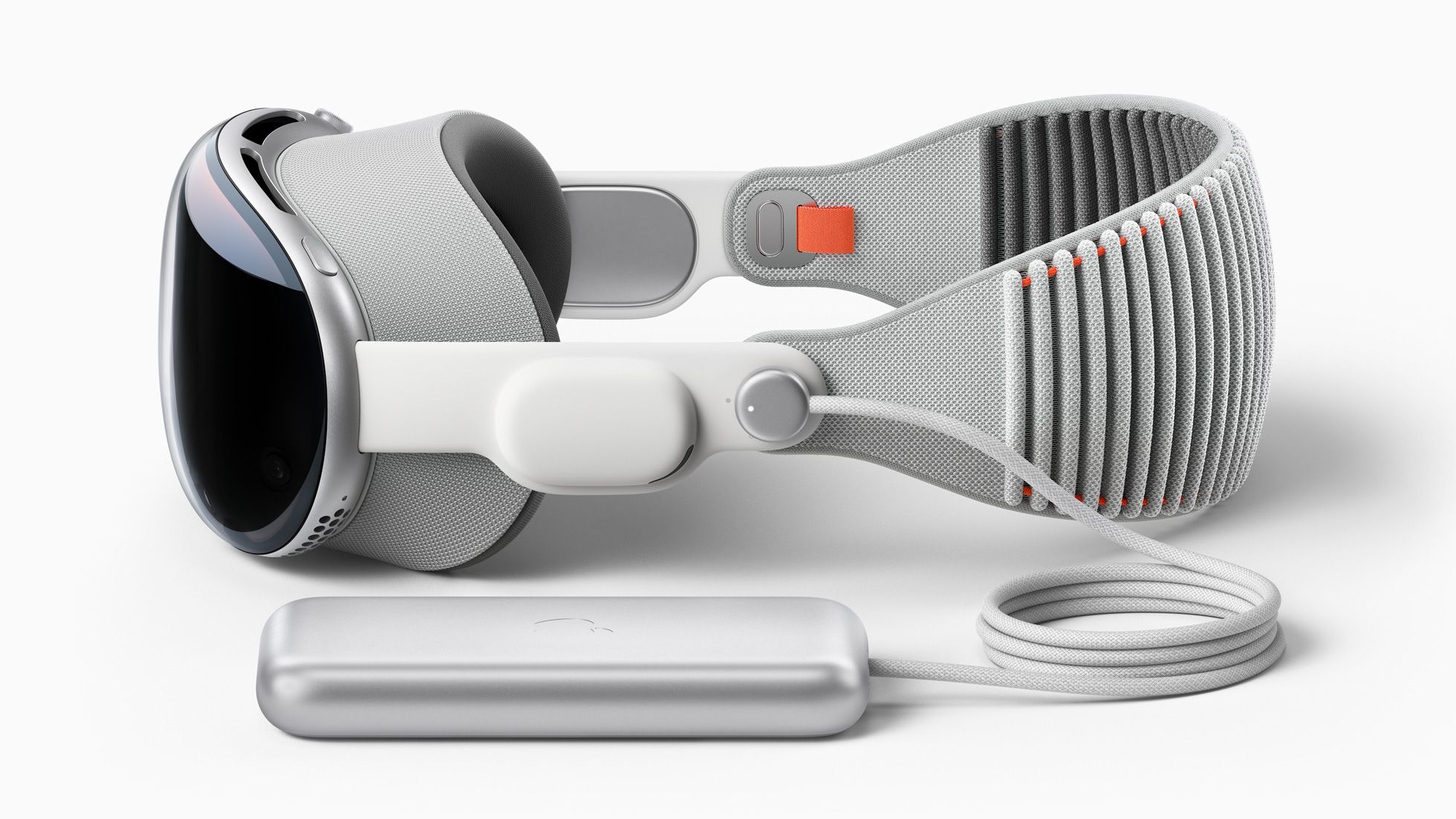

We went hands on with Apple Vision Pro at Apple Park in California during WWDC23 and were blown away by the best headset demo ever made. We still need to learn many things about the device, but read on for our initial impressions.

Personas are what Apple calls human scans performed by the Vision Pro headset that are locked to the scanned individual to prevent others from wearing them. Meta calls its research-stage version of its comparable technology Codec Avatars, and we saw the latest version of them at Meta's offices late last year.

Whether from Apple or Meta, the idea is perhaps the ultimate killer app of virtual reality. It's the key to unlock the sense that someone is actually in a room with you even though they're physically distant. Meta's research leader overseeing the project described a handful of miracles needed to deliver the concept at scale. Namely, that's to scan a person accurately by quickly using a phone or headset, combined with headset-based sensors capable of watching eye movements and facial expressions to drive the avatar believably.

Meta last year showed two relevant demos, one with an avatar scanned from a phone and another with a person scanned in Meta's “Sociopticon" by 220 high-resolution cameras over the course of hours, with processing time for the resulting virtual human measured in days or weeks. The phone-scanned avatar was deeply unsettling and I didn't want to look at it anymore after a few seconds. The ultra-high fidelity one, meanwhile, was driven by a PC-powered headset and I felt like it surpassed the so-called "uncanny valley". With that version of the codec avatar, it truly felt like he was conversing with me and present there making eye contact. I felt like I could talk to a person thousands of miles away from me as comfortably as if we were in the same room together. I felt like I had seen the north star guiding the future of spatial computing.

Apple's demo, meanwhile, featured a live FaceTime call with another person also wearing a Vision Pro and he conveyed the idea that we could collaborate on a FreeForm whiteboard together in this format. Their "Persona" had been scanned by the headset, Apple said, and it felt like the quality level fell squarely between the two demos from Meta last year. This was a representation of a person I'd feel uncomfortable talking to after a few minutes, and it certainly didn't feel like they were there with me while constrained within a floating window.

I'm starting here with Personas because this was the only part of Apple's demo where I felt disappointed. While the highest quality version of Meta's avatar tech impressed on PC, it was nowhere to be found in standalone on Quest Pro. Apple, meanwhile, is planning it as a core aspect of its first-generation Vision Pro and showed it to me directly in a standalone spatial computer.

Put another way, both Meta and Apple are reaching a bit into the future with this particular piece of their technologies and neither hyper-real visage is both perfectly believable and easily created.

When it comes to everything else? Vision Pro outclassed Meta Quest Pro and every other headset I've ever tried to a degree that is utterly show-stopping. I could see the weight of the headset still being a bit straining, and Apple wouldn't talk about the field of view, but it felt at least competitive if not wider than existing headsets. Overall, Vision Pro provided easily the best headset demo I've ever tried, by a wide margin.

Vision Pro's Passthrough Is So Good It Questions Transparent AR Optics

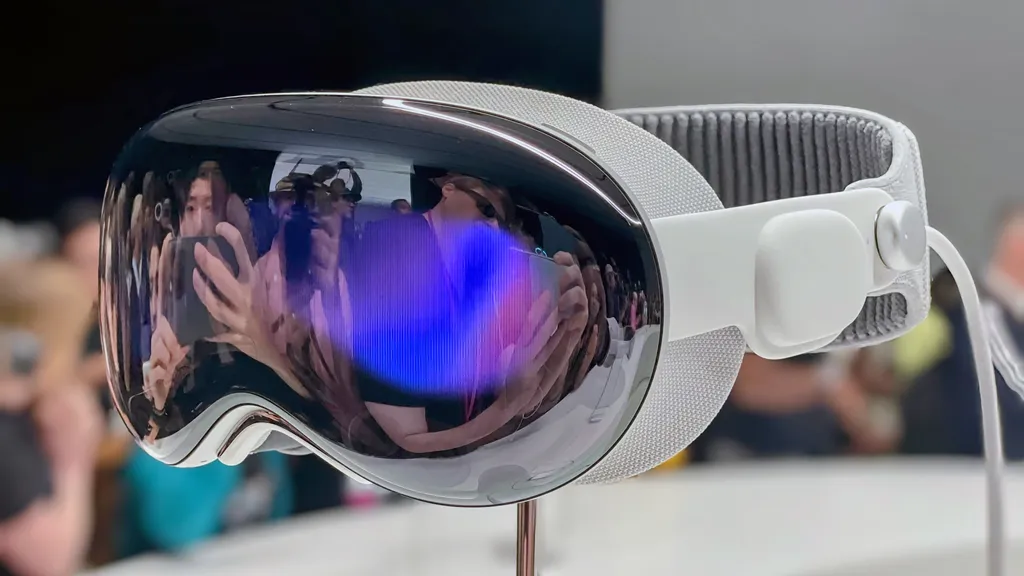

My first moment with Vision Pro seeing the physical room viewed through the headset's display in passthrough, I looked down at my own hands and it felt as if I was looking at them directly. This was a powerful moment, more powerful than any previous "first" I'd experienced in VR. I feel the need to reiterate. I was looking at my own hands reconstructed by a headset's sensors and it felt as if I was looking at them directly.

For the last decade, VR developers struggled with a number of tough design questions. Should they only show tracked controller models? Should they attach cartoonish hands? What about connecting those hands to arms? Sure, those are all interesting design questions, but those should be secondary implementations to a person simply looking down and feeling like their hands are their own. Vision Pro did this right out of the gate for the first time in VR hardware. It worked so well that I question how transparent optics, like those in use by HoloLens 2 or Magic Leap 2, will ever hope to match Apple's version of passthrough augmented reality in an opaque headset.

Passthrough was by no means perfect – I could still see a kind of jittery visual artifact with fast head or hand movements. Also, in some of Apple's software, I could see a thin outline around my fingertips. But these are incredibly minor critiques relative to the idea that this was night-and-day better than every other passthrough experience I've ever seen. From now on, every time I look through Quest Pro passthrough, I'll be frustrated that I'm not using Vision Pro.

Is the difference between the $1000 Quest Pro and $3500 Vision Pro worth it?

I'll frame my answer to this question this way – can you afford to pay $2500 extra for a better sense of sight?

The difference is like using sunglasses for reading instead of having your prescription-corrected vision. You can argue the price difference is so large as to make these gadgets incomparable, or you could argue that seeing more clearly is worth thousands of dollars to some people.

For me, I already dread looking through passthrough in Quest Pro. I just want to spend more time with Vision Pro. Notably, Quest Pro will be more than a year old by the time Vision Pro ships and Quest 3 reportedly features better passthrough.

Input & Multitasking

I try to stay objective and balanced. Sometimes I fail, and the moment in Vision Pro when I had three apps running side by side in panes in front of me – repositioning them and resizing them effortlessly with eye-tracked pinches while sitting on a couch? Well, somewhere in Apple's user feedback reports there might be a few cuss words I uttered about Meta Quest's user interface. It just felt so good to see Apple get this so right. Pinch and drag to navigate menus, or look at what you want to select and pinch to select it. That's it.

I left my Vision Pro demo convinced that eye-tracked pinches will indeed be the mouse click of AR and VR. Meta's wristband technology also showcased a route to this in all-day AR, and Quest Pro should hypothetically be capable of the same input paradigm with both eye and hand tracking. But how exactly does Meta support that experience on Pro parallel to Quest 2 and Quest 3? The cheaper Quests both have hand tracking but they lack the critical eye tracking technology that's key to targeting what you're looking at. It's what made Apple's hand tracking feel like it "just works" and Meta's, well, doesn't.

Zero Artificial Locomotion & Immersive Media

Apple's demo featured zero artificial locomotion. No analog stick to teleport or make you sick. I sat on a couch and viewed and resized panoramic photos as well as 3D movies like Avatar 2. Spatial photos and videos captured by the headset featured a nice sense of depth, and so did the 180-degree "immersive video" Apple showed that looked like the fruits of Apple's NextVR acquisition. You can't move around this content positionally, but it still looks great on Vision Pro.

The last portion of my demo opened a wall-sized portal through which a dinosaur stomped and made eye contact with me as I walked around it in the room. The Apple employees reminded me the wall was still physically there – possibly indicating that not many people request to stand up in their demos. I laughed and assured them I wouldn't be the first person in a Vision Pro to try and make a beeline through a solid wall.

We'll have more as we digest Apple's overwhelming information dump at WWDC23. For now, we can't provide more in-depth recommendations or advice about this headset, but we highly recommend you see Vision Pro whenever you get the chance.