Google’s ARCore is getting a new feature which will enable full occlusion of virtual objects in real scenes.

ARCore is Google’s Android augmented reality runtime and SDK. It provides positional tracking, surface detection, and lighting estimation so developers can easily create AR apps for high end Android phones.

But currently, virtual objects will always be shown on front, because ARCore has no good understanding of the depth of the real objects in the scene. Positional tracking works like on VR headsets- it tracks high contrast features in the scene, not the entire scene.

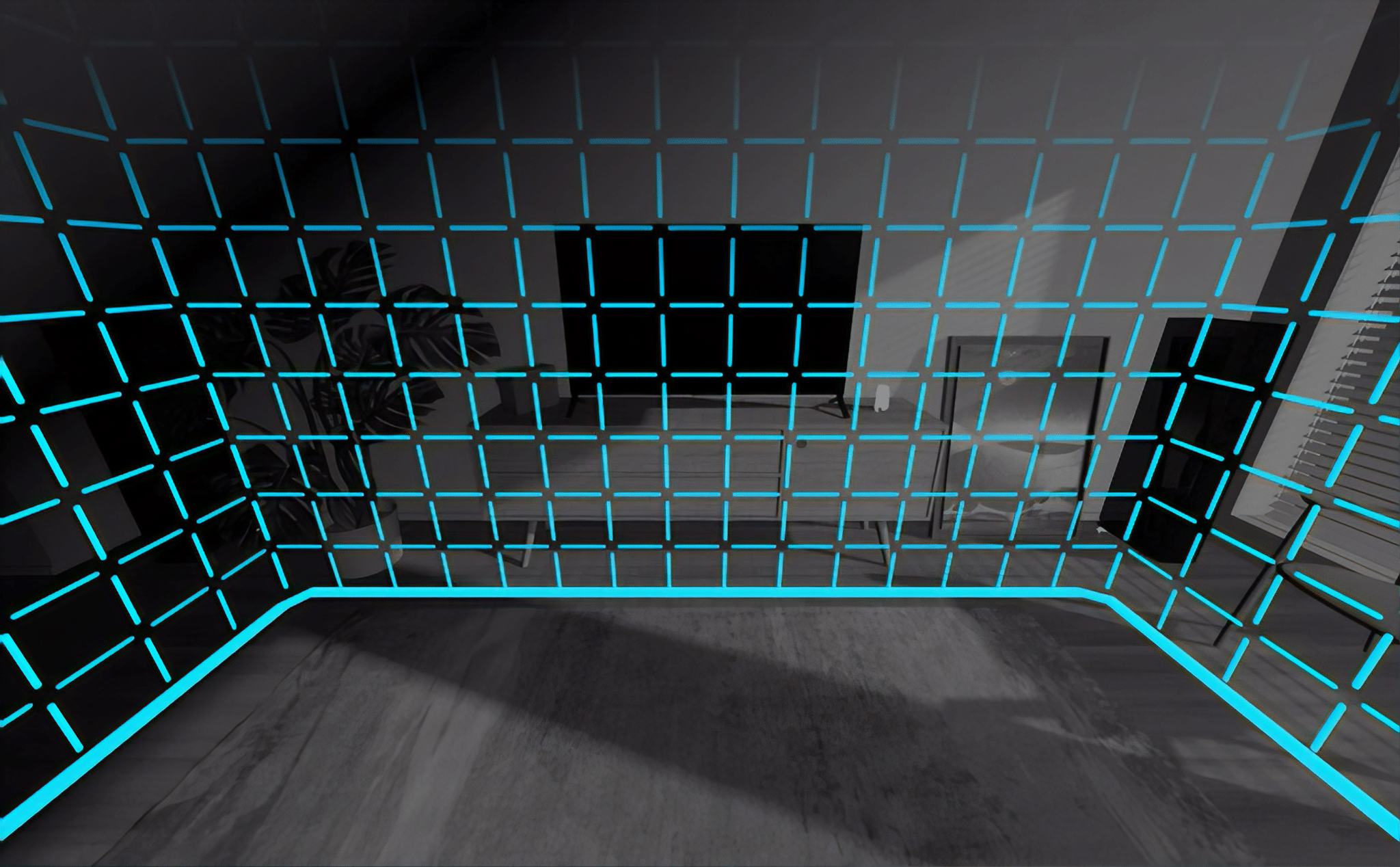

The ARCore Depth API estimates the depth of everything the camera sees. This allows for occlusion– virtual objects will now appear behind real objects if the real object is closer to the camera.

Occlusion is arguably as important to AR as positional tracking is to VR. Without it, the AR view will often “break the illusion” through depth conflicts.

Apple’s ARKit for iOS and iPadOS doesn’t have full depth occlusion yet. However, on the most recent powerful devices it does now have occlusion for human bodies, including hands. Whereas ARCore and ARKit have stayed roughly equivalent for now, it’s interesting to now see them diverge on different paths. This will make life harder for developers, but allow for specific innovation on each platform.

Real time understanding of scene depth could also be hugely beneficial for virtual reality. Current VR headsets keep you aware of your real surroundings by having you draw out your playspace during setup. If you come close to this boundary it will be shown. But this technology could allow this to be automatic and 3 dimensional boundaries – walking too close to your couch could make it appear in the headset.

Facebook, which owns the Oculus brand, has shown off real time mobile depth mapping too, but hasn’t shipped it in a product yet.

ARCore’s Depth API isn’t publicly available and Google hasn’t given any release window, but developers can sign up for approval to try it out.