Everyone says that augmented reality (AR) will be bigger than virtual reality (VR): analysts, investors, technologists, and futurists alike. But because you’re much likelier to hear about VR today, especially this month with the launches of Playstation VR, Google Daydream, and Oculus Touch, AR can feel distant and overhyped.

This gigantic list of future AR use cases should get you reeling with the possibilities. Although most of these are future applications, they’ll arrive within the next 10 to 15 years. Let’s make this a living document: if I missed a major use of AR, comment below and I’ll add it.

Three quick notes before we start. First, let’s clarify the difference between AR and VR.

VR blocks out the real world and immerses the user in a digital experience.

If you’re putting on a headset in your living room and suddenly transported to a zombie attack scenario, that’s VR.

AR adds digital elements on top of the real world.

If you’re walking down the street in real life and a Dragonite pops up on the sidewalk, that’s AR.

Second, most use cases for VR prioritize interaction with the virtual world over the physical one. Because you’re fully immersed in a screen, you aren’t interacting much with the real world. Many of VR’s limitations today involve avoiding awkward interactions with the real world, like bumping into walls in room scale or the social acceptability of immersing yourself in a mobile headset while in a public place like a train. Because users generally stay in a single spot or small area in VR, the medium lends itself well to uses like gaming, watching videos, and education.

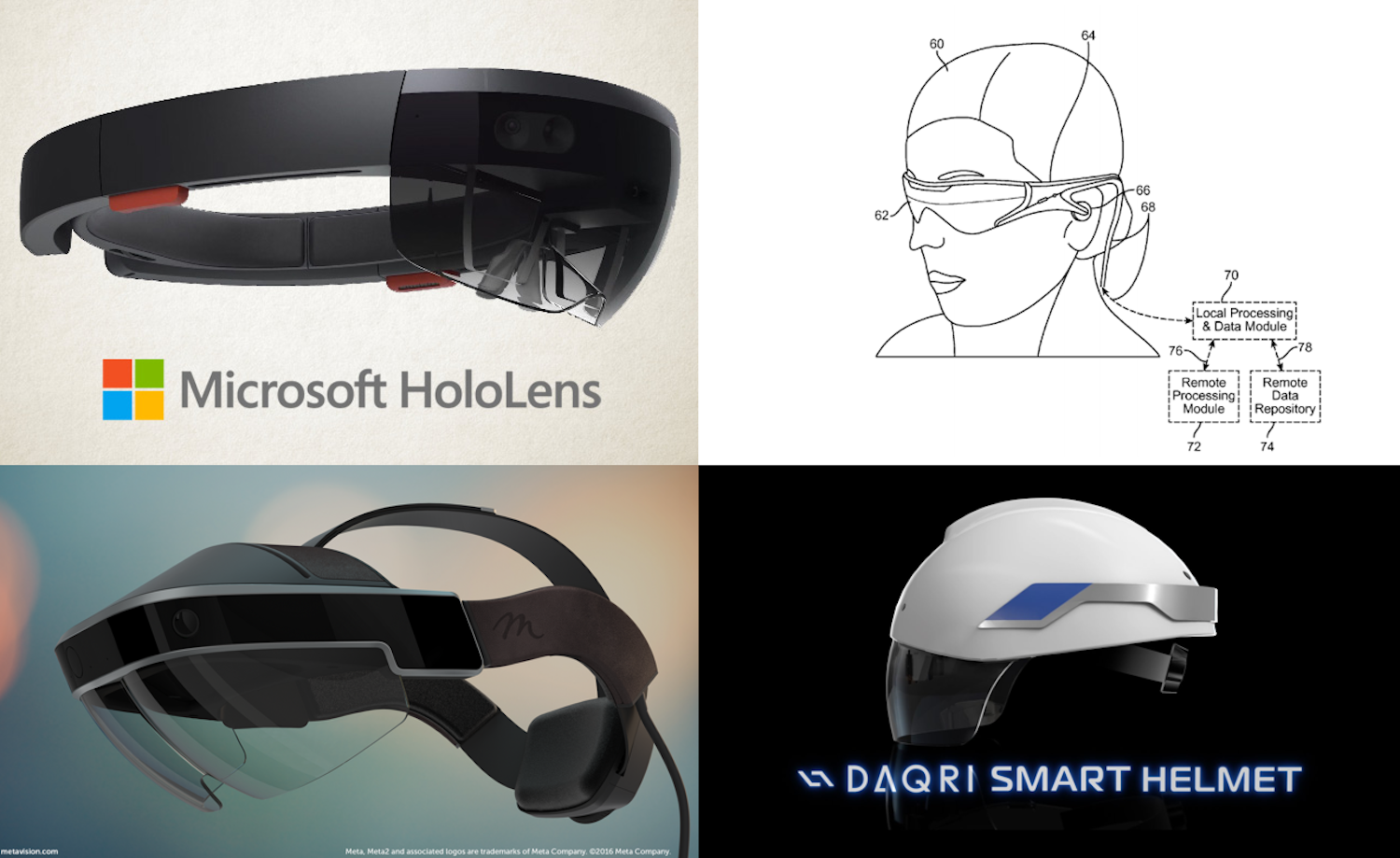

AR is more focused on bridging digital and physical spaces. It accompanies you as you move through the world and augments your activities with information, whereas you typically step out of that world to immerse yourself in VR. When we talk about AR headsets, they’re big helmet-like visors now, but they’re heading toward normal-looking glasses and ultimately contact lenses.

Third, AR and VR are converging into the same thing. Our ability to tell what’s “real” and not digital will decrease as graphics get increasingly better. An AR sign outside a building could be as real and as significant as a physical one if everyone is using AR tech, everyone sees it when they walk by, and it is persistent in that location. Ultimately, we’ll have hardware that lets us switch between AR and VR modes, with less and more opacity for the context. We’re discussing AR and VR distinctly for now because they’re developing separately and on different timelines.

6 quick (and key) AR concepts

These underscore most of the AR use cases in the list, so it’s helpful to understand them:

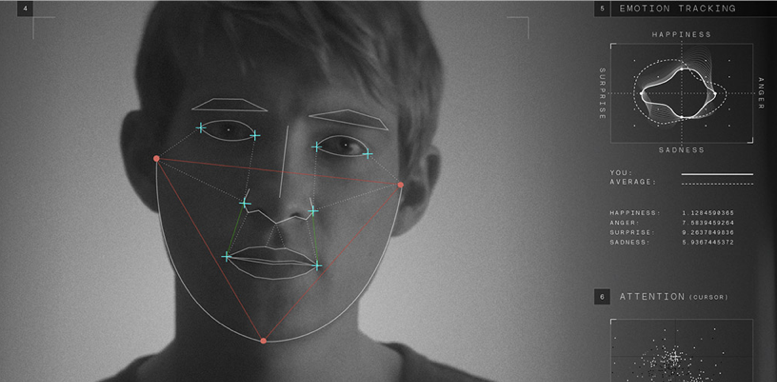

- Sentiment analysis: scan a person, or a group of people, and run apps to analyze body language, micro-expressions, language, and behavior. Get real-time feedback on how that person or group appears to be feeling or reacting, and adjust accordingly.

- Facial recognition: scan a face and match it to an existing identity database to learn a person’s name and background information just by looking at him or her.

- Object identification: use computer vision to detect and identify objects and track their physical location. This includes tracking the AR user’s location in relation to objects.

- Information augmentation and display: once an object or person is identified, automatically search for information about it and display it to the AR user.

- Porting from mobile phones to AR headsets: generally, AR moves a user’s attention from downward-facing on a phone to forward-facing in a headset. Almost anything that can be done on a phone (or any display device, really) will be done in AR.

- Processing, sensing, and scanning: AR devices will contain their own processing units. To start, they’ll be big, clunky, and may be housed externally and connected to the headset, but they’ll get much smaller until they’re an indistinguishable part of the AR glasses. They’ll also be able to track wearers’ movements and locations and take rapid 3D scans of wearers and environments to project them elsewhere.

And now onto the list:

Productivity and work

Screen creation: project high-resolution screens anywhere at any time. If you need a monitor, simply pull one up in your field of view and adjust the size. This will reduce reliance on display devices like TVs, computers, phones, and laptops.

Conference calls: call participants see AR projections of each other as they talk, increasing engagement and attention.

Real-time background checks: upon meeting a person, pull up their online footprint. Today, that would include things like LinkedIn, their tweets, Instagram photos, significant Google search results, and more. Get instant context on a person’s background.

3D modeling and design: mock up the real world. Create and view 3D models in physical space. View data and designs in new, interactive ways, like walking through a scatter plot in three dimensions.

Browsing, note taking, writing, project management: all day-to-day productivity tools will be available in and adapted to AR formats.

Personal assistant: view your schedule in-real time as you move through your day, with alerts for changes, travel time, and other relevant items.

Collaboration: POV sharing is the next generation of screen sharing, allowing easier collaboration.

Measurement: get length and distance between objects using AR’s tracking capabilities.

Monitoring: customize a feed of anything you’d want updates on, like social media, your house, your kids, work dashboards, and the weather. Visualize your updates however you like, such as a data control room with lots of screens.

AR UI/UX: Because it is free of physical screens, AR makes greater use of controls through voice, hands and hand-tracking, and brain computer interfaces.

Past interaction recall: using facial recognition tied to productivity tools like CRM and email, quickly recall the last times you interacted with a person, what you talked about, and any follow-ups. This is the AR equivalent of being a people person who always remembers the details about those in your life.

Research: conduct real-time searches and get immediate answers. If you’re in a meeting and someone references a name you don’t know, use AR to look it up stealthily and display the results in your field of vision.

Psychology

Public speaking: sample emotional response among a crowd to tell whether they’re reacting well to a speech and which parts they like best.

Meetings: tell how the person across from you feels about you and the subject matter.

User testing: gain objective feedback from user and product testing, psychological experiments, and more, using sentiment analysis.

EQ training: use training exercises and real-time feedback to help provide baselines for appropriate social interaction, such as amount of eye contact, when to make jokes, and how close to stand to another person. Special applicability to populations within the Autism spectrum.

Empathy training: put people in each other’s shoes to increase empathy. A man can see himself in AR as a woman, or a person can see herself as a different race. Sighted people can perceive the world the way that someone with cataracts or macular degeneration does.

Interior design: visualize changes to your living space before making them permanent, like different colored walls, art placement, or furniture layouts.

Utility monitoring and control: control IoT devices and monitor power consumption and current utility bills. Increase energy efficiency and have a better sense of what your monthly usage and costs will be.

Cleaning: visualize areas to be cleaned and get reminders and percentage completion bars. An un-vacuumed carpet displays as red but changes to blue as you pass over areas.

Baby-proofing: scan your living space to highlight hazardous objects and get recommendations on how to make them safe for young children.

Transportation

GPS directions: navigation superimposed on your field of view. Getting instructions while looking up at at the road should reduce distracted driving accidents caused by looking down at a phone.

Collision avoidance: whether used by autonomous vehicles or AR-assisted drivers, computer vision detects and reacts to potential hazards more quickly than humans alone.

Hazard flagging: drivers and passengers flag dangers or slowdowns that will be visible to others using AR. Think Waze applied on top of the physical road.

In-building navigation: overlay interior maps and directions in spaces like malls, schools, convention centers, and more.

Accident recording and analysis: AR users enabling point-of-view (POV) recording will have more evidence for fault determinations for accidents.

Instruction and repair: visualize the steps to assemble and repair things, from cars to furniture to malfunctioning components on a space station. Replaces owner’s manuals and setup guides.

Astronomy: look up at the night sky to identify planets, constellations, meteors, and other celestial bodies.

Job training: trainees can onboard and pick up new job skills faster with AR instructions and remote supervision.

Classroom enhancements: use AR course materials to delve deeper into study topics. Teachers can quiz students live and see their answers displayed above them. Students can work remotely on group projects and homework. Teachers can detect which students are not engaged or paying attention and focus on them. See chemical reactions by combining digital elements without the danger of a real interaction.

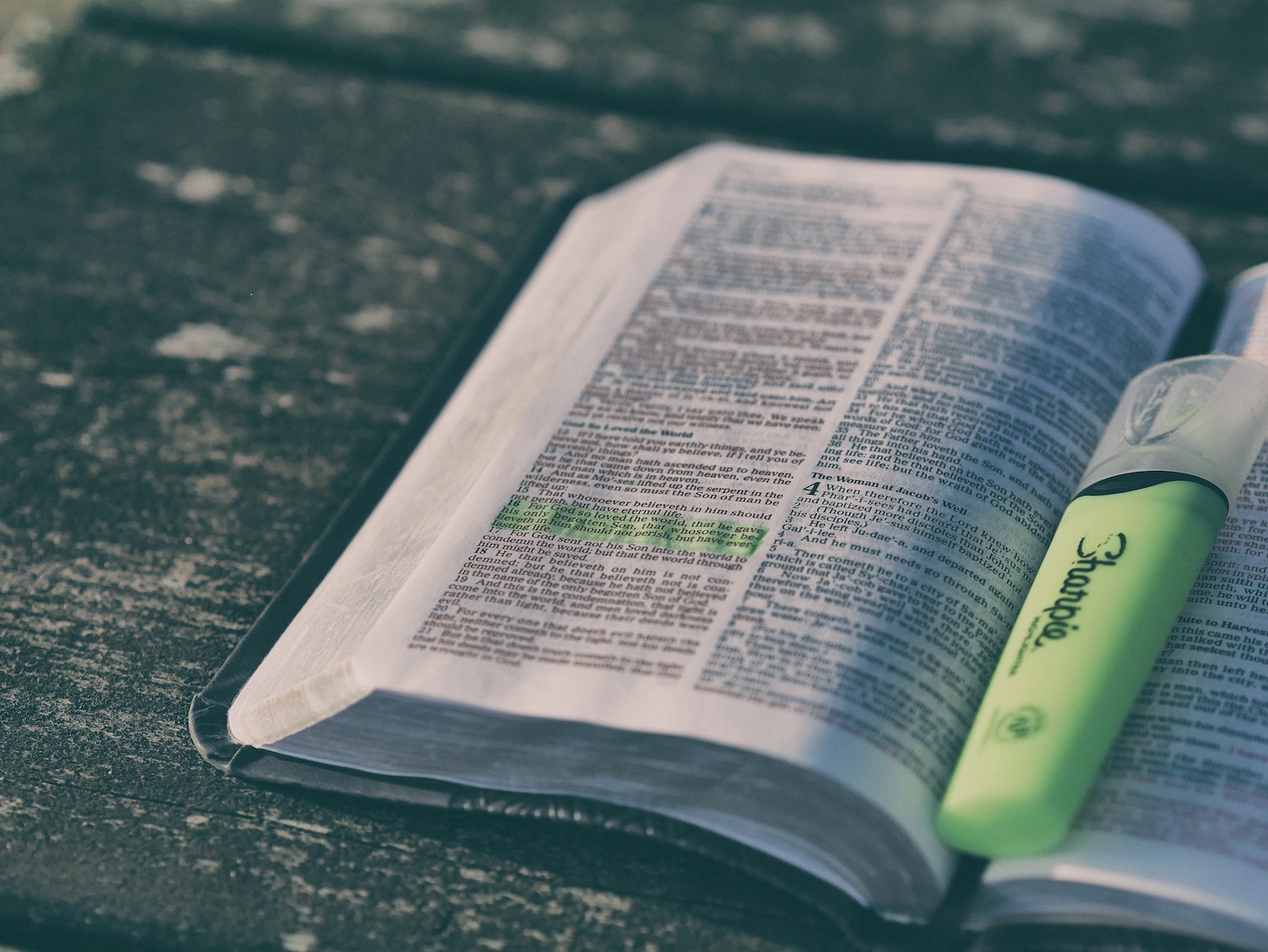

Books: read a physical book with AR properties, like pop-out figures, optional links to extra information, or word definitions. Also read fully digital AR books on virtual screens.

Law enforcement and legal

Lie detection: analyze whether a person is likely to be telling the truth from micro-expressions, speech, and body language. Has broad implications in politics, negotiations, and relationships as well.

Sobriety testing: faster, more efficient means of analyzing sobriety by visual indicators like pupil dilation and body swaying than by traditional field sobriety tests.

Suspect searches: find and identify suspects from facial scans on the street; may combine with other identifiers like gait analysis. Especially powerful if it’s crowdsourced among civilians’ AR equipment, but poses major privacy concerns.

Weapon or contraband detection: scan for abnormal bulges under clothing and highlight to law enforcement for closer inspection.

Jury selection and monitoring: more powerful jury insights using things like sentiment analysis and attention level to craft legal strategy.

Crime scenes: detect and highlight important areas of crime scenes for better analysis.

Depositions and testimony: record depositions easily with AR equipment. Automatically compare statements given during testimony to those from depositions. Analyze witness behavior to glean insights into truthfulness and motivation.

Contracts: receive and sign documents in AR. Add verification with biometrics like voice or retina scans for greater security.

Accessibility

Blind assistance: AR’s object recognition and voice interfaces can give blind users real-time information on what’s present and happening around them.

Deaf assistance: users can receive auditory information translated into text cues in their AR devices, like a loudspeaker announcement on a plane or the screech of brakes.

Real-time translation: AR can convert foreign text or audio and display it back in the user’s native language.

Wheelchair maps: receive AR maps that highlight wheelchair-friendly routes.

Magnification: zoom and enhance small or far-away text so it’s legible.

Medical

Surgery assistance: identify organs and tissues during surgery and get instructions, whether from doctors observing your POV or knowledge-base applications you’re running.

Medication identification: helps medical staff identify the right medications even if they aren’t trained to do so. Eases demand on pharmacists and doctors and reduces mistakes in administering medications and filling prescriptions.

IV insertion: lets medical staff find veins for IV insertion even if they aren’t easily detectable with the naked eye, and makes a first time insertion more likely.

More access to doctors: AR gives less experienced medical staff boosted abilities if a remote doctor views their POV stream and assists. Gives doctors more flexibility to work from varied locations and scales their expertise.

Symptom checking: lets patients check visual symptoms against medical databases or share real-time with doctors.

Second opinions: make collaboration with other doctors faster and easier, like looking at an X-ray, sharing your POV with another doctor, and talking through the results.

Real-time fact-checking: lets audiences and politicians validate statements’ truthfulness.

AR teleprompters: politicians can read prepared speeches off AR displays without looking forced or unnatural. They can also pull up and read statements on issues during debates without necessarily understanding those issues.

Crowd polling: gauge how a crowd is reacting to a politician via sentiment analysis, including their likelihood of supporting that candidate and shifts in their support.

Donor recognition: lets politicians better support and engage with their donors by pointing them out in groups and providing background information like name and affiliation.

Military

Dead drops: agents can leave objects for each other and flag them with AR signals visible only to a qualified recipient.

Friendly detection: exaggerated AR overlays for friendlies and civilians to minimize casualties. Friendlies and civilians could have bright blue auras surrounding them, while enemy combatants and vehicles have bright red auras.

Enhanced pilot vision: identify targets, avoid obstacles, navigate, and aim with greater precision.

Roadside bomb detection: bomb detection scan information is fed to back via AR and locations are highlighted to make avoidance easier.

Weapon operations: real-time instructions on how to use weapons, vehicles, UAVs, and other resources.

Status heads-up display: display remaining ammo, food and water, personal and squad health, mission details, and other key information.

Art and culture

Museum and city tours: learn about a museum’s exhibits and a city’s landmarks at your own pace as you walk through them.

Art analysis: see previous iterations of paintings superimposed on the final product and learn about the techniques the artist used.

Art instruction: follow step by step AR cues to learn to paint, draw, sculpt, etc.

Historical recreations: see historical sites as they were before aging or destruction.

Books: anchor a book in your field of vision so you can read while you’re walking or running. Read together with someone remote, like your child or a book club.

Nature trips: identify species of plants and animals and learn about them. See where you’re most likely to spot different animals based on past recorded sightings.

Song and artist recognition: display names of artists and song titles for music you hear.

Shopping

Clothes shopping: try on and buy clothes without leaving your house. Superimpose clothing on yourself and try different sizes and colors.

Furniture shopping: put AR models of furniture in your living space to see how they fit and which colors, finishes, and fabrics look best.

Smart grocery shopping: set shopping goals for things like nutrition, couponing, and recipes and your AR system highlights what to buy as you move through the aisles and gives you a path to follow.

Personal styling: try out digital hair and makeup options.

Body modifications: see how tattoos, piercings, and plastic surgeries would look. Visualize yourself gaining or losing weight or muscle, or going up or down in age.

Street buys: if you see something you like in the real world, get instant information about it and the option to buy. If someone walks by wearing sneakers you like, you can learn the brand, price, size and color options, and order instantly.

Showrooming: easier, faster price comparisons across retailers for products you’re looking at in brick and mortar stores.

Social

Private clubs: mark otherwise nondescript entrances to physical spaces where private groups meet up. Someone who’s not a member of the group sees nothing; members see a sign or illuminated door, like an AR speakeasy.

Date evaluation: analyze a date as it’s happening, including how attracted your date is to you. Pull up background information on him or her to get to know interests and likes.

Self-branding: choose short bits of biographic information to display about yourself to other AR users, like a virtual profile as you move through the world. Switch it to more personal and social on a weekend at a bar, or more career-oriented at a conference or meeting.

POV vlogging: AR will make life-logging much more seamless. Broadcast what you’re seeing as you’re seeing it.

Status posts: users can choose to set statuses around themselves that are visible to other people. Set “do not disturb” if you don’t want to be bothered, or “available” if you’re open to conversations. Display the song you’re listening to or a status update.

Putting names to faces: scan faces to retrieve names. AR will be extremely useful to anyone who’s bad with names.

AR hangouts: project a friend into a hangout space using AR. If you’re both sitting on your couches in different cities, she’d see you on hers in Boston and you’d see her on yours in NYC. Friends can come together from different physical locations to watch movies, play games, and just hang out.

Food and restaurants

Restaurant reviews: see a restaurant’s rating and customer reviews as you pass by. Restaurants can also broadcast specials or events.

Restaurant reservations and availability: learn by glancing if a restaurant has open tables or how long the wait is.

Allergy flags: identify your allergies to display in food-related contexts. This way, restaurant staff can adhere to allergy restrictions without having to ask each guest.

Food calorie scanning: get fat, protein, carbohydrate, calorie, and nutritional information by looking at and scanning food.

Diet auto-tracking: passively record everything you eat and drink to keep a running calorie and macro count for the day. Get notified when you’re over your caloric limit, and get recommendations on healthier options to swap in for what you’re about to eat.

Cooking instructions: how-to’s on making recipes you’ve chosen, including visual or other cues to help with measuring ingredients. Enables amateur cooks to step in for more experienced ones and scale production.

Recipes based on inventory: scan a fridge and kitchen and learn what you can make with the materials and equipment you have.

Fitness

Holographic personal trainers: instead of watching workout videos or following instructions on an app, project a virtual personal trainer in your workout space. Walk around this trainer to see how good exercise form looks from all angles. Work out alongside the trainer to stay on pace.

Personalized workouts: view content through the AR device and get real-time workout adjustments based on heart rate, fatigue, user feedback, goals, and more.

Gamification of workouts: turn runs, walks, or bike rides into quests where you’re getting XP or unlocking features by exercising. Do jump squats to hit an AR goalpost or wall sits against a backdrop of a digital ski slope. Visualize a path up a climbing wall as a colorful line and get points for making it up quickly. See leaderboards of your friends and compete against them.

Fitness heads up display: bring up personal records and historical workout information when relevant. When you’re about to bench press, see your max and scan through past sets. Record current sets, weight, exertion, and more.

Construction and architecture

Project management: scan projects to see if they’re following the model’s specifications, and if not, where the aberrations are.

Blueprints and models: much easier visualization and sharing in 3D AR projections than paper or CAD models.

Project visualization: walk through a project site and see what will the finished building will look like.

Project instruction: follow AR instructions to increase skills, like a carpenter seeing where to insert a nail or how to attach a roof shingle.

Gaming

Location-based games: overlay a game layer on top of real-world maps and places. Get players experiencing the real world in new ways and meeting each other.

Gamification of real life: apply game dynamics to real-life activities and make anything a competition.

Tabletop gaming: animate traditional board games and play fully digital ones.

Dynamic avatars: animate an avatar to reflect a user’s real emotions or characteristics as they occur.

AR pets: have a digital pet that follows you and see and interact with other people’s.

Enhanced PC and console games: while TVs and monitors are still heavily used, add an AR layer to display additional information. Once AR reaches a level where users can create screens anywhere, console and PC gaming becomes portable.

Esports data: overlay game and player information on top of broadcasts.

Sports

Player stat overlay: see player stats and info as a data overlay while watching a game. This has special utility for fantasy sports.

Enhanced analytics: get extra info on any data captured about that sport as you watch, like the speed of a fastball, the force of a tackle, or the angle of a golf ball.

Player enhancements: improve athletes’ detection and reaction abilities by expanding their field of vision, like alerting them that a tennis ball’s trajectory is headed to a specific spot on the court.

Play visualization: take the coach’s plays and map them to players’ AR vision for easier execution.

Film and TV

Actor info: overlay an IMDB-like layer to get actors’ names, biographical info, and past roles.

Simultaneous viewing: Watch something remotely with another person or group at the same time.

Subtitles: add optional subtitles to anything you’re watching.

Mobile watching: watch while you’re running, walking, or commuting.

Advertising

Personalization and targeting: everyone sees unique AR ads directed to them, using their name, interests, emotional state, gaze, location, and more. One AR ad space can serve different ads to every person walking by.

New ad creatives: advertisers will experiment with more aggressive AR ad tactics to capture attention. AR ads can pop out in front of people. Objects can roll out of videos and onto a viewer’s floor. Ads can follow viewers’ eyes or location and make avoidance difficult.

AR ad space competing with physical: AR ads appearing on top of physical ones will create friction between new and old technologies and compete for attention.

Interactive product placements: viewers can select products in ads or content to research or buy them, like a more effective QR code.

Eye tracking: ads can detect if you’re looking at them and move to your center of vision. If you’re required to watch an ad before accessing content, ads will detect if you’re looking or if your eyes are closed. Let’s hope this doesn’t happen.

What did I miss? Comment below, email me at sarah(at)accomplice(dot)co, or tweet me @SarahADowney. Sarah Downey is a principal at Accomplice, which is a sponsor of Upload.