Serious tech observers generally agree our mixed-reality future will be amazing, and we’re not there yet. Beyond technical hurdles like frame rate and positional tracking, mixed reality presents unique challenges for the designers, artists, and storytellers who craft the narratives that give meaning to digital experiences. The challenge facing today’s developers is to recognize that the paradigm for personal technology is shifting. From Rift to Apple Watch to Snap Spectacles, the trend is toward wearable computing. But this poses a tricky problem for MR headsets: how should users interact with a machine that they’re wearing on their faces?

An illuminating parallel can be found in the evolution of the PC industry. Computers have been commercially available since 1946, but it took key insights regarding the graphical user interface (GUI) to make computing personal, practical, and intuitive. The point-and-click GUI proved so effective that it remains the dominant computing interface more than thirty years after the 1984 Macintosh. Today’s MR hardware seems a bit like a 1970’s IBM. Corporations see the potential, and hardcore hobbyists are feverishly experimenting, but the core technology remains inaccessible to the everyday user. Mixed reality needs a GUI-like revolution in user interaction.

One thought-leader on this subject is neuroscientist and entrepreneur Meron Gribetz, CEO of Meta. Meta is working on an AR headset, which puts them in the same league as Microsoft and Magic Leap. Gribetz often speaks of a “zero-learning curve” computer, a machine that is so intuitive that you’ve always known how to use it. Implicit in such a machine is the conclusion that it must function as an extension of your brain. And therein lies the opportunity for brain-computer interfaces to redefine how humans interact with the world around us.

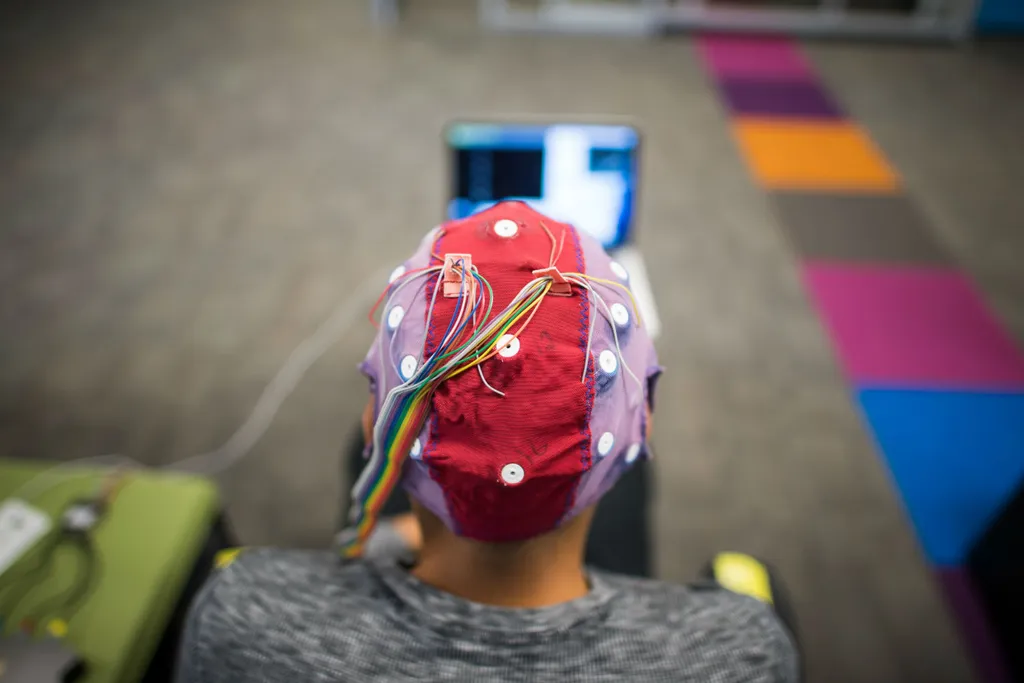

Researchers have been working on this subject for a long time. Brainwaves were first discovered in humans in 1924. In the 1970’s scientists at the University of California popularized the term “brain-computer interface” or BCI, referring to a hardware and software system that uses brainwaves to control devices. While BCIs are already commonly used in medical devices, it is now, with the advent of wearable computing, that their potential could be fully realized.

Current Limits

Consider the use cases most commonly listed for augmented reality: work and productivity, construction, industrial assembly, transportation, sports, the military and law enforcement – all of these speak to dynamic environments that place heavy demands on user attention. Do current AR devices simplify these environments or complicate them? For example, how will a construction worker operate a “smart helmet” while simultaneously using tools or operating heavy machinery? How will a soldier quickly navigate an AR display while carrying equipment and speaking on a radio?

These scenarios reveal the limitations of existing methods for interaction. Gesture controls preclude hands-free operation. Voice commands are awkward in public and fail in noisy environments. While each method has its advantages, their cumulative effect is to create a new language of inputs for the user to master. We should expect that this barrier will dramatically slow the rate of adoption of AR devices. The best solution is a brain-computer interface that allows users to scroll menus, select icons, launch applications and even input text using only their brain activity. Imagine the productivity revolution that a high-performance, non-invasive, intuitive BCI would unleash in AR.

Cognitive overload

Beyond simple command inputs, BCIs will solve another problem looming on MR’s horizon: the inevitably of cognitive overload. MR is potentially an incredibly feature-rich environment – imagine a 3D internet with no adblockers.

Users could easily be overwhelmed by too much information. BCIs reduce cognitive overload by enabling co-adaptive interfaces, or software that responds to user biometrics to display only the most relevant information. Researchers in Finland are experimenting with brainwave analysis as a method for content curation.

VR also stands to benefit from BCIs by dramatically reducing the barrier to true “presence.” Consider two distinct gamers: one who creates magic and casts spells using her mind, and another who performs the same actions using analog buttons on a handheld controller. The authenticity of the VR environment is not the same for both. We all want to live our Jedi dreams, but the Oculus Touch controllers are not quite “the Force.” BCIs are necessary to deliver the transformative experiences that VR promised us.

Despite growing interest from big tech companies, commercial applications of BCIs have been hamstrung by prohibitively high costs and impractical hardware. For example, one popular BCI system is based on electroencephalography (EEG), which measures electrical waves in the brain for clues about user intention. EEG-based BCIs typically cost tens of thousands of dollars and require hair gel to improve signal quality. You won’t see this tech on the shelf at Best Buy anytime soon.

Next Steps

However, pioneering companies such as Emotiv and Interaxon have brought low-cost headsets to market that offer “mindfulness” training to improve your meditation skills or enhance concentration at work. Others startups such as Halo Neuroscience are targeting professional sports teams with brain-sensing headsets that improve athlete training through neurofeedback. While these applications are somewhat narrow in scope, they reveal that consumer-facing neurotechnology is becoming more practical and affordable. BCIs for the masses may not be as far off as we think.

It’s not difficult to see the evolutionary trajectory for the litany of devices that clutter our homes and offices: extinction. Eventually our smartphones, tablets, laptops, TVs, and so on will likely be replaced by one singular, wearable device. Form factor will become increasingly miniature, progressing from the current generation of cumbersome head-mounted displays to light-weight glasses, and perhaps eventually to contact lenses that simply project light directly onto your retina. But as these devices become smaller and more mobile, the challenge of interaction becomes increasingly difficult, and brain-computer interfaces emerge as the best solution.

Where AR-VR headsets have broken new ground, BCIs constitute the next evolutionary milestone in transformative technology. Their arrival will radically reshape our relationship with personal technology. While 2016 witnessed great strides in visual immersion, 2017 needs a revolution in user interaction – otherwise mixed reality will remain on society’s fringes for some time.