Facebook published a research paper for its machine learning-based reconstruction for foveated rendering that the company first teased at Oculus Connect 5 (in late 2018).

The paper is titled DeepFovea: Neural Reconstruction for Foveated Rendering and Video Compression using Learned Statistics of Natural Videos.

Foveated Rendering: The Key To Next Generation VR

The human eye is only high resolution in the very center. Notice as you look around your room that only what you’re directly looking at is in high detail. You aren’t able to read text that you aren’t pointing your eyes at directly. In fact, that “foveal area” is just 3 degrees wide.

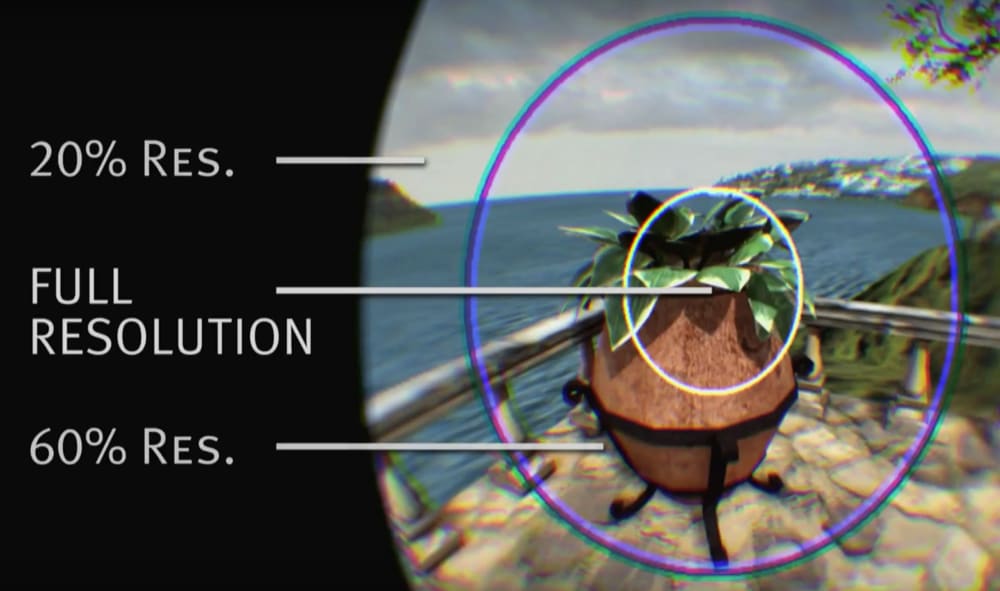

Future VR headsets can take advantage of this by only rendering where you’re directly looking (the foveal area) in high resolution. Everything else (the peripheral area) can be rendered at a significantly lower resolution. This is called foveated rendering, and is what will allow for significantly higher resolution displays. This, in turn, may enable significantly wider field of view.

It’s Not That Simple

Foveated rendering already exists in the Vive Pro Eye, a refresh of the Vive Pro with eye tracking. However, the foveal area is still relatively large and the peripheral area still relatively high resolution. The display itself is the same as on the regular Vive Pro (and in the Oculus Quest). On Vive Pro Eye, foveated rendering is used to allow for supersampling with no performance loss, rather than to enable significantly lower rendering cost for ultra high resolution displays.

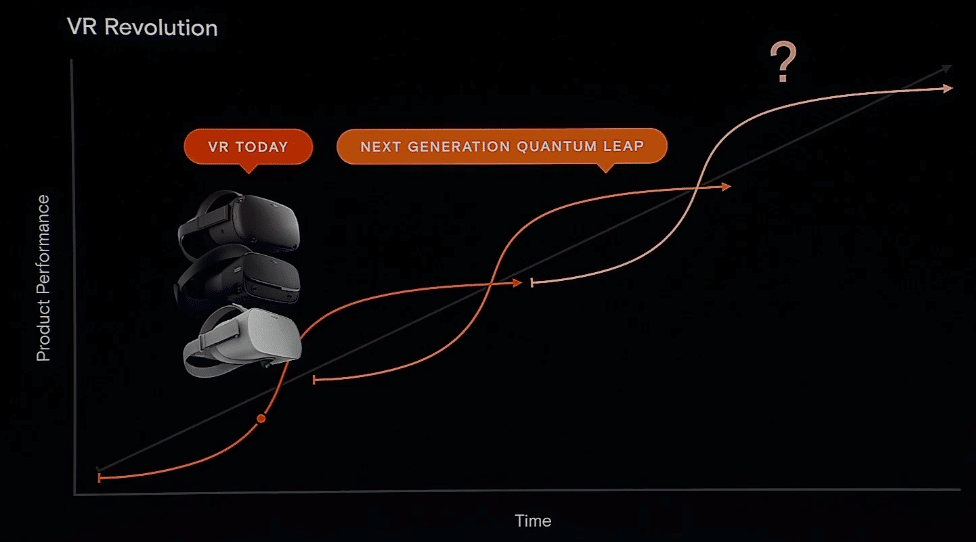

Facebook seems to be seeking how to decrease the number of pixels needed to be rendered by an order of magnitude or more. This could allow even a future Oculus Quest mobile-powered headset to have a significant jump in resolution or graphical detail.

At Oculus Connect 6 back in September, John Carmack briefly revealed that these efforts were not going as well as expected, due to the lower resolution periphery being noticeable:

And it’s also kind of the issue that the foveated rendering that we’ve got… when it falls down the sparkling and shimmering going on in the rest of the periphery is more objectionable than we might have hoped it would be. So it becomes a trade off then.

DeepFovea: A Generative Adversarial Network

DeepFovea is a machine learning algorithm, a deep neural network, which “hallucinates” the missing peripheral detail in each frame in a way that is intended to be imperceptible as being lower resolution.

Specifically, DeepFovea is a Generative Adversarial Network (GAN). GANs were invented in 2014 by a group of researchers led by Ian Goodfellow.

GANs are one of the most significant inventions of the 21st century so far, enabling some astonishing algorithms that almost defy belief. GANs power “AI upscaling”, DeepFakes, FaceApp, NVIDIA’s AI-generated realtime city, and Facebook’s own room reconstruction and VR codec avatars. In 2016, Facebook’s Chief AI Researcher, a machine learning veteran himself, described GANs as “the coolest idea in machine learning in the last twenty years”.

DeepFovea is designed and trained to essentially trick the human visual system. Facebook claims that DeepFovea can reduce the pixel count by as much as 14x while still keeping the reduction in peripheral rendering “imperceptible to the human eye”.

Too Computationally Expensive

Don’t expect this to arrive in a virtual reality headset any time soon. The paper mentions that DeepFovea itself currently requires 4x NVIDIA Tesla V100 GPUs to generate this detail.

As recently as September, Facebook’s top VR researcher specifically warned that next-generation VR will not arrive “any time soon”.

For this to be able to be shipped in a product, Facebook might have to find a way to significantly reduce the computational cost — which isn’t unheard of in the machine learning world. The computational requirement for the algorithm used to power Google Assistant’s current voice was reduced by 1000 times before shipping.

Another alternative would be that Facebook could utilize, or develop, a neural network accelerator chip optimized for this kind of task. A report last month indicated that Facebook was developing a custom tracking chip for AR glasses tracking — perhaps the same could be done to allow foveated rendering in a next-generation Oculus Quest.