David Pio, VR Software Engineer at Facebook, presented methods for compressing, warping, and viewing VR-ready footage during Oculus Connect 2 this year. The talk provided a glimpse into the technical research and development that the social networking giant is working on.

The discussion was divided into to 3 areas of interest, starting with compression. Pio began by mentioning several ways to condense wirelessly video information. The problem to overcome is that the bandwidth required to pass packets of data along for VR is greater than the average speed that consumers currently have, which floats around 5 megabytes per second in the U.S. Realistically, 360 videos without compression needs approximately 30 megabytes per second to accurately playback footage at an acceptable quality, according to David Pio.

One of the ways to cut down the gap in bandwidth is to transition the color depth from 8-bit to 10-bit. This removes color banding and compresses the data better. Moving to 10-bit reduces the bit rate by 5-6%, as mentioned by Pio. This is good, but issues still arise as there is not many readily available software solutions that decode 10-bit color depth in today’s market.

Another method of reduction relies on new compression algorithms that are emerging, rather than using standard ones like H264. These alternate tools are super slow though, meaning they aren’t feasible unless the computing power is high. Multi-view coding can help with compression efficiently, however, by splitting the left and right eye images into two seperate streams that reference each other. Having independent streams fit better into hardware decoders, making them easier to manage. In contrast, methods like ‘SBS’ or ‘Over Under’ are super wide or tall, putting a lot of strain on the hardware decoders.

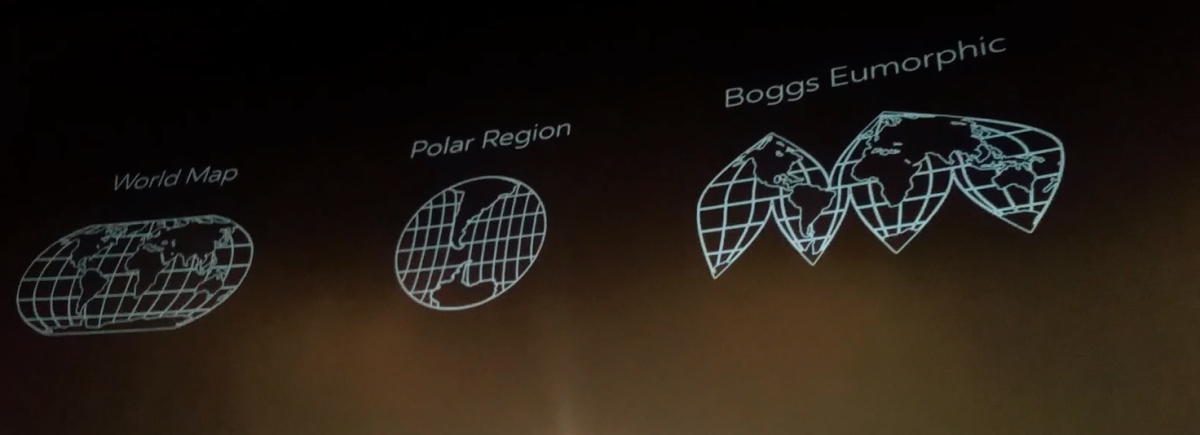

From there, the 2nd topic Pio discussed included warping and alternating equiregtangular projections. In a regular projection, areas on the poles of the video spheres are often super stretched and distorted. Other projection methods, like Boggs Eumorphic projection, attempts to solve this, but it leaves gaps and wasted space.

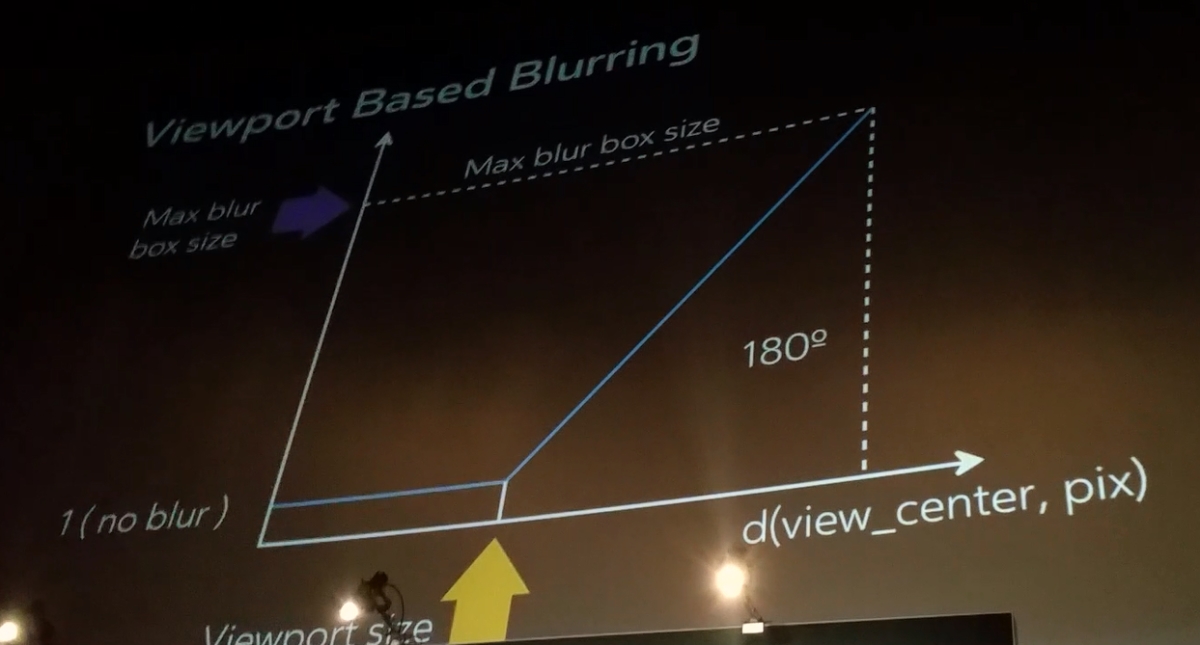

Another interesting technique Pio described involves modifying parts of the footage depending on where the user is looking. Since the field of view is only a small portion of the video, the remaining areas that the user is not seeing can be blurred, resulting into better compression. This is where real time transcoding comes into play. As a server transmits video data to a headmounted display, the computer also streams the user’s head position simultaneously. The server then retranscodes the data and sends it back, minimizing bandwidth loss. This requires a lot of computing power though, as each person streaming from the server is transmitting unique data sets at the same time; meaning that the platform must retranscode on the fly per viewer.

To reduce the cost of processing power, another method selects a pre-determined amount of viewports offline, allowing the users to switch between them when moving their heads. The portion of the frame where the user is looking will be in high resolution, while the other viewports are reduced in quality, reducing network latency. Combining the two techniques produces equirectangular projections with portions of the frame blurred out.

Facebook engineers spent a lot of time experimenting with various blurring percentages, finding an optimal level. Interestingly, increasing the blurring strength actually doesn’t reduce the bit rate that much. Decreasing the viewport sizes makes a bigger impact. At the same tie, downgrading the viewports’ resolution that are not seen in view concludes in a bit rate at about the same level as blurring (around a 30% reduction), but the visuals results are astoundingly better.

Furthermore, changing the projection geometry cuts the bit rate down as well. Rather than using a cube, a pyramid shape naturally reduces resolution along the sides as it wraps around to a single point behind the user. The triangle is then resampled and repacked so that there’s no wasted space in the frame. The areas directly in the field of view are high quality resolution, while the other sections are not, keeping the size of the data down to a minimum.

Applying the techniques described by David Pio results in data files (including spatially downgraded pyramid 4K and 8K videos) that can be streamed efficiently over standard WiFi connections today. As more techniques arise, the streaming capabilities of 360 video will get even better, allowing for large amounts of people to watch VR-enabled content without having to download entire files; finally.

Featured image pulled from Norebbo.com