Researchers at Facebook figured out how to add natural looking eyes to their photorealistic avatar research.

Facebook, which owns the Oculus brand of VR products, first showed off this ‘Codec Avatars’ project back in March 2019. The avatars are generated using a specialized capture rig with 132 cameras. Once generated, they can be driven by a prototype VR headset with three cameras; facing the left eye, right eye, and mouth. All of this is achieved with machine learning.

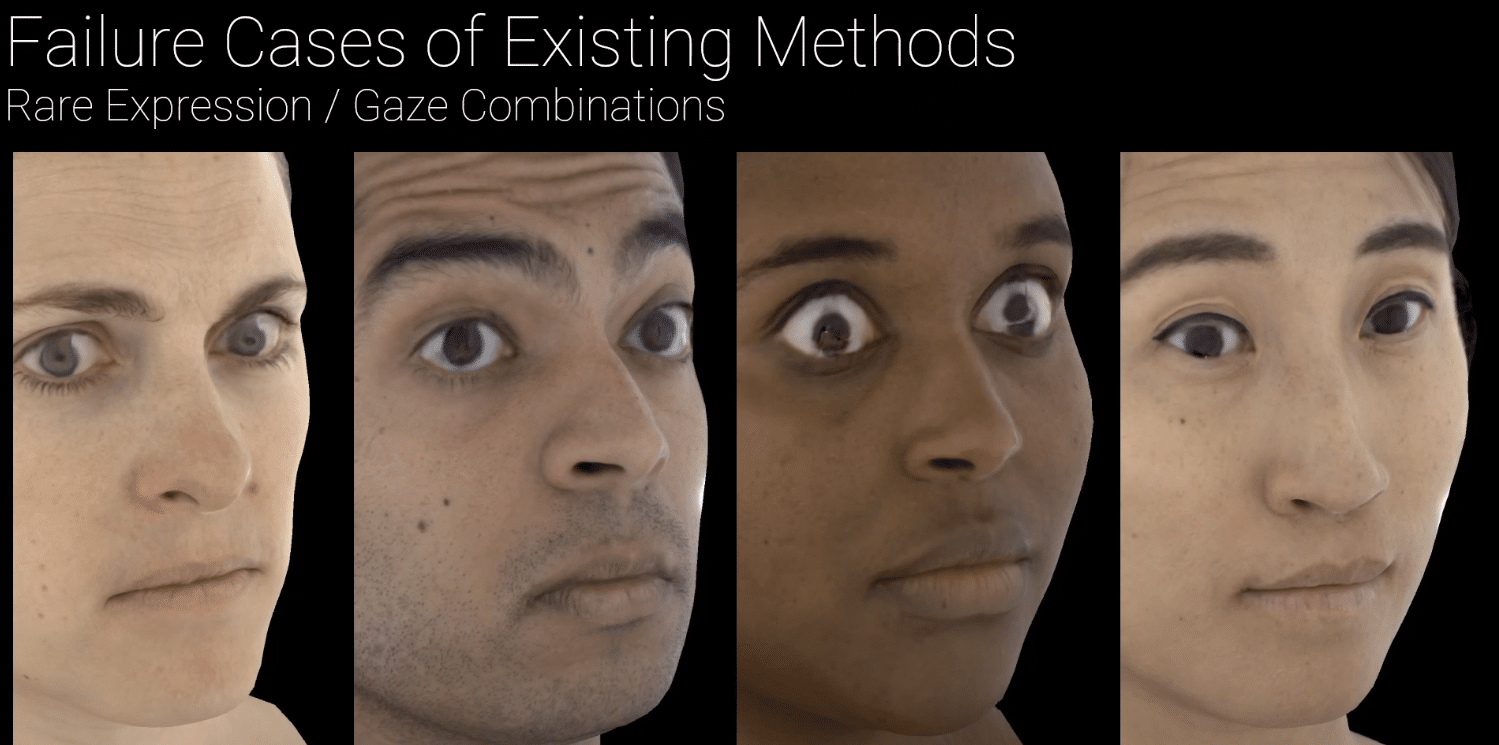

While the graphics and face tracking of these avatars are impressive, the eyes tended to have an uncanny feeling, with odd distortions and gaze directions that didn’t make sense.

In a paper titled ‘The Eyes Have It: An Integrated Eye and Face Model for Photorealistic Facial Animation‘, the researchers present a solution to this problem. Part of the previous pipeline involved a “style transfer” neural network. If you’ve used a smartphone AR filter that makes the world look like a painting, you already know what that is.

But while this transfer was previously done at the image stage, it’s now done on the resulting texture itself. The eye direction is now explicitly taken from the eye tracking system, rather than being estimated by the algorithm.

The result, based on the images and videos Facebook provided, is significantly more natural looking eyes. The researchers claim eye contact is critical to achieving social presence- a feature you won’t get in a Zoom call.

Our goal is to build a system to enable virtual telepresence, using photorealistic avatars, at scale, with a level of fidelity sufficient to achieve eye-contact

Don’t get too excited just yet- this kind of technology won’t be on your head any time soon. When first presenting codec avatars, Facebook warned the technology was still “years away” for consumer products.

When it can be realized, however, such a technology has tremendous potential. For most, telepresence today is still limited to grids of webcams on a 2D monitor. The ability to see photorealistic representations of others in true scale, fully tracked from real motion, with the ability to make eye contact, could fundamentally change the need for face to face interaction.