Google may one day make our virtual avatars more expressive by tracking our eyes.

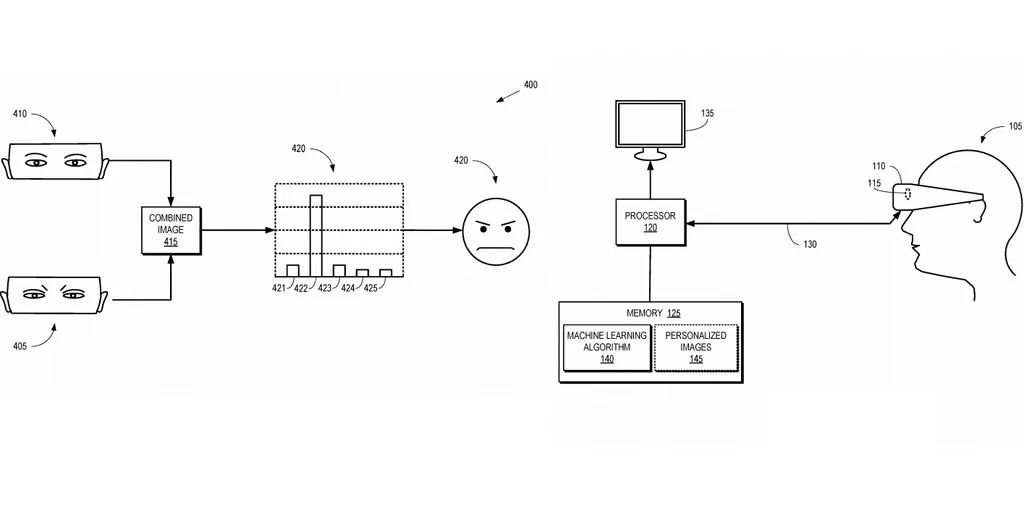

That is according to a patent published last week in which the company details a system for ‘Classifying Facial Expressions Using Eye-Tracking Cameras’. According to the description, the method uses “one or more eye tracking sensors implemented into one or more head mounted devices”.

Eye-tracking is thought to be one of the next big things for VR, though that’s largely because an accurate, reliable system will enable foveated rendering, which only fully renders the area of a screen the user is directly looking at. Google, however, wants to use an algorithm to scan a user’s expression in real-time and then translate that into a virtual expression on their avatar.

The patent also notes that these expressions could be personalized, which we’d guess means developers would be able to make reactions work with their style of avatar. The cheery little diagram below shows you how slight changes in the size and shape of an eye could change what others see when they look at your virtual avatar.

If accurate, such a system could be revolutionary to social VR applications which, notably, Google doesn’t have yet. Of course, it also doesn’t have a VR headset with an embedded eye-tracking sensor just yet but, if it did, you’d have to imagine this was just one of a variety of potential uses for the tech.

Given that Google only just released the Lenovo Mirage Solo Daydream headset (and is busy bringing 6DOF controllers to it), we wouldn’t expect to see anything come of this patent for a good while yet.