Leaked images are nothing new in the smartphone world but a recent set purportedly showing the larger of the two Google Nexus phones that will be released later this year gave us reason to pause. The leaks, which come from the Nexus focused site iNexus.co, show the back of the new phone with an odd protruding camera design.

The photos show a design that differs from many smartphone cameras which are usually flush with the device itself. The company has yet to comment on the reason for this design, giving us plenty of room to speculate rather wildly.

It would seem the device would have the standard things you would expect from a smartphone camera – the LED flash and the camera itself, positioned next to each other. But then there is the third sensor outlined by the case. Other outlets have already taken to speculating that this is a laser autofocus sensor, which would make sense for a camera, but it would not explain the reason for the bump.

Another possibility could be that the bump is the result of the addition of a sort of depth camera for smartphones, something that would make a lot of sense given Google’s recent direction both with Cardboard and Project Tango. Last week, in fact, Google and Intel showed off a phone that was embedded with the Tango technology, but the phone they showed appeared to have significantly more sensors embedded. The alignment was even along the landscape, rather than along the top like in these leaked photos, so it seems unlikely that it is the same setup. That said, this doesn’t seem to be the only configuration that they are going with – as another phone based tango project with Qualcomm shows the sensors aligned in the upper left corner. But those appear to still be more robust than what we are seeing here, so again, it remains to be seen.

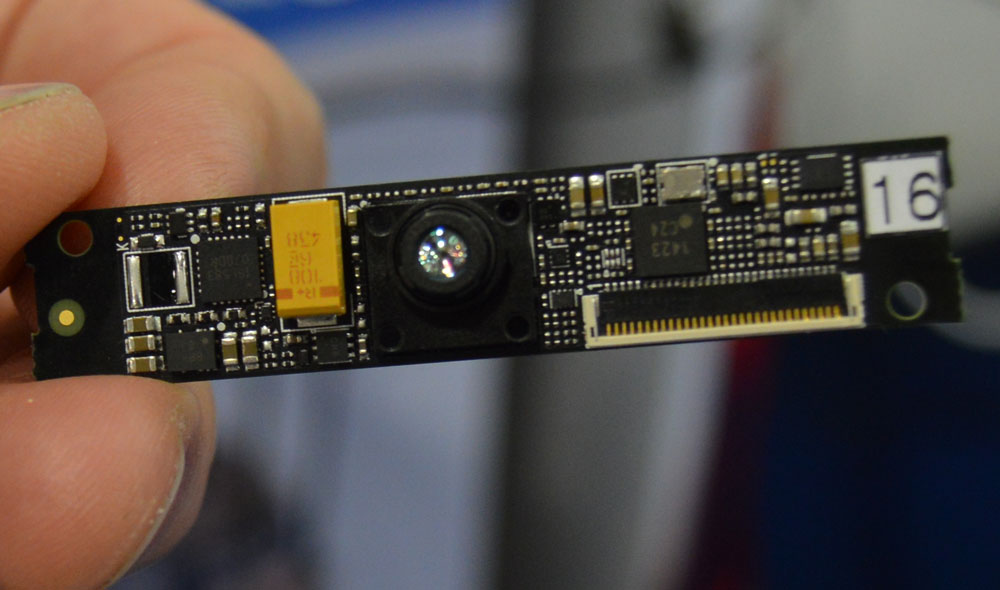

But perhaps most fueling the speculative fire are the sensors currently being developed by Softkinetic, a leader in gesture recognition technology. Earlier this year at GDC, the company’s co-founder, Eric Krzeslo told me he was “100 percent” confident that his company would tackle the issues associated with mobile positional tracking “by the end of the year.” It was obviously a bold claim, but could it be telling?

Softkinetic provided the technology behind the first generation of Intel Realsense cameras and given Intel’s continued relationship with Google in this field, it wouldn’t be outside the realm of possibility that Softkinetic and Google have some kind of relationship at least by proxy. Furthermore, the size of the prototype depth camera we were shown at GDC would appear to fit rather well on the top side of a phone, perhaps bringing with it a bit of additional bulk due to the protrusion of the depth camera.  This is far from anything confirmed, and could very well end up being wildly off base, but the stars seem to be aligned for some kind of positional tracking breakthrough on smartphones. Google is pushing its mobile-based VR platform and seems like a likely candidate to emerge with an embedded solution.

This is far from anything confirmed, and could very well end up being wildly off base, but the stars seem to be aligned for some kind of positional tracking breakthrough on smartphones. Google is pushing its mobile-based VR platform and seems like a likely candidate to emerge with an embedded solution.

The biggest argument against all this may be within the pictures themselves which cover the long black bar at the top which might be covering an IR sensor of some sort (think mini Leap Motion) is completely covered by the case, which only reveals what appears to be a standard camera, an LED flash, and the aforementioned third sensor (as well as a circle on the back that many are guessing is a fingerprint scanner). So it seems almost more likely that the bulge is more associated with a heavily improved camera in general, perhaps like that on the Nokia 808 Pureview. A big increase in resolution would prove effective in more than just taking pretty pictures. It would also allow for better monocular SLAM.

SLAM stands for simultaneous localization and mapping and is a technique used in computer vision to construct an updating 3D map of an environment. This technology can be used in VR for inside out positional tracking. Many SLAM techniques rely on multiple cameras, however there is research around the topic that is focused on single camera solutions, called monocular SLAM. This is the technique that is being used by, for example, Univrses who are currently trying to perfect it as a solution for VR.

As you can see from the video the technique isn’t yet perfect. There are still issues with jitter, for example. But a higher resolution camera (or the addition of extra sensors) could definitely play a role in helping to make approaches like these possible.

With Oculus and Samsung potentially collaborating on a phone built for VR, it might make sense for Google to do the same with their Nexus line. Time will tell all, but in the meantime we are happy to speculate some.