On a table located in the “Main Expo” hall during the 10th anniversary of a DIY event called the Maker Faire sits a machine spinning invisible lasers from two cylindrical chambers. It perches atop a tiny, black tripod as thousands of people walk around searching for something interesting.

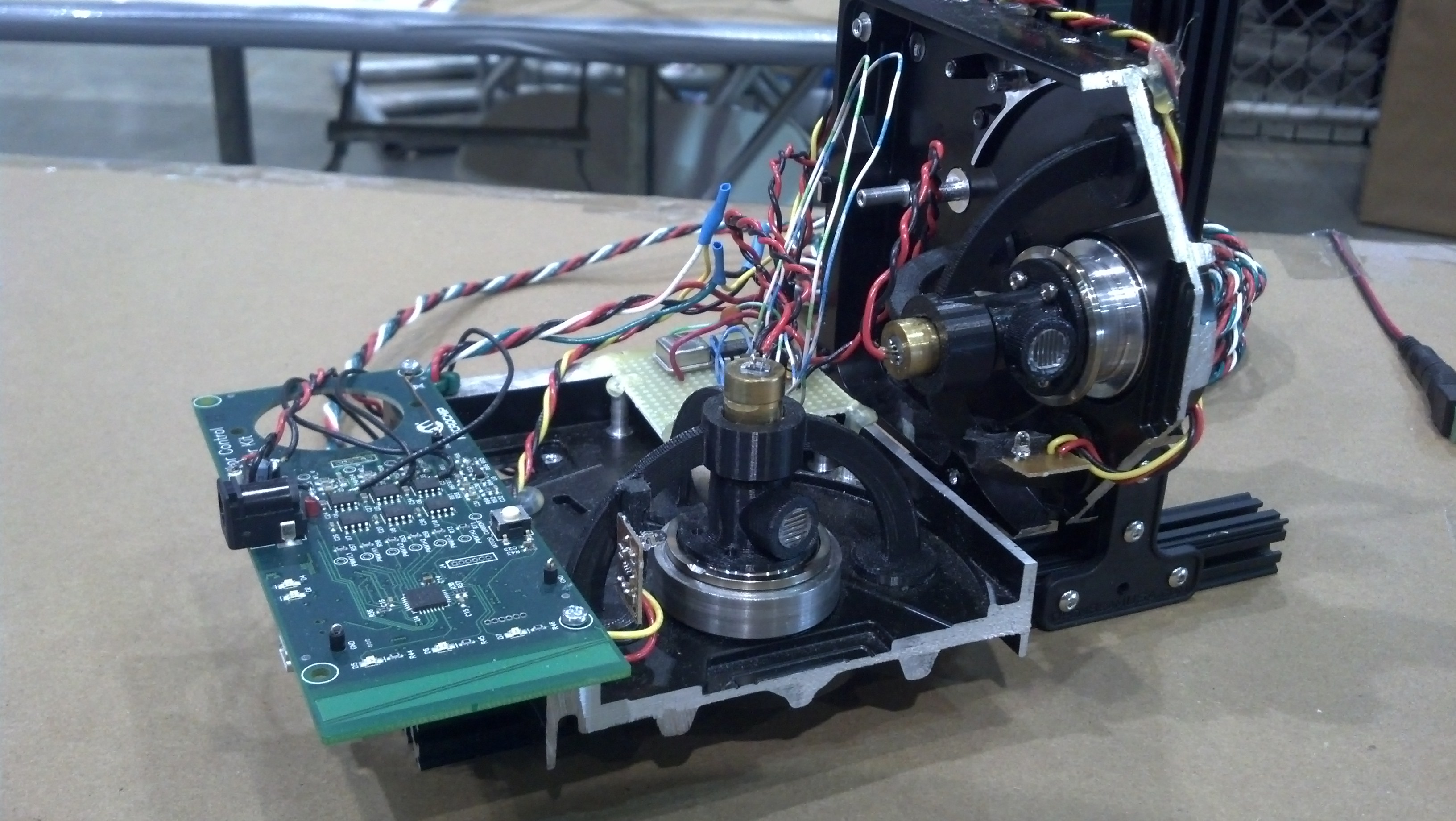

There are a circuits and photodiodes (light sensitive detectors) spread across the surface below, along with a wireless controller framed with in 3D printed plastic. Next to those items is a handheld prototype, an early one – a proof of concept. It contains a tiny screen outputting continuously changing numbers. That device would eventually lead to what is arguably the premium input solution for virtual reality systems about to reach consumers’s hands, and it was just sitting there waiting to be understood.

The person behind the exhibition booth was Alan Yates, Valve’s “Chief Pharologist,” which is a fancy name for a brilliant maker, developer, electrical engineer all wrapped in one. Being that this is not a virtual reality focused convention, the topic of conversation hovered around what non-VR applications the Lighthouse system could be applied to. According to Yates, this tracking solution has the potential to be used with robotics and micro flight, indoor drones.

At first, the basestations will hit the virtual reality market allowing the system to track VR headsets and wireless controllers. This is an obvious application as the demand for the medium is finally skyrocketing. However, the platform is scalable to unimaginable heights, thus being able to be integrated into just about anything; including but not limited to robots and drones. Interestingly enough, the Lighthouse can be used outdoors. There are some issues with light interference, plus the range of the system is relatively small for outdoor use, but it has the capabilities to run outside nonetheless.

Another interesting tidbit is that the basestations themselves don’t necessarily need to be networked together. They can run asynchronously. Also, only one Lighthouse is needed. Adding more to an area helps with occlusion problems that may arise depending of the movements of the user. As long as 5 photodiode sensors detect the infrared lights sweeping across an environment, the device can determine its orientation in a 3D space. In addition, the sensors pick up modulated light emitted from the cluster of LEDs attached to the front as well.

Even further, an additional nugget of information surfaced at the Maker Faire. It was discovered that the Lighthouse basestations can detect disruptions. If they get picked up, knocked over, or adjusted in any way, the system can sense the movements. This means that internal algorithms can make adjustments from there, keeping the experience as stable and streamlined as possible.

Valve even has a software solution in the works for creating custom controllers and device too. CAD models of an object that are outputted to STL files can be looked at, and the user can be told where the optimal places to add sensors are. That makes it much easier to start prototyping units, with a testing phase following shortly after.

As long as people maintain the standards associated with licensing the Lighthouse system, individuals can make pretty much anything they can think of. It will be interesting to see what developers do with this system next. Personally, I want VR wands, swords, and gloves, but the possibilities are endless. If you have ideas of what to create, let us know what you might make in the comments!

To listen to the interview with Alan Yates, click the play button below: