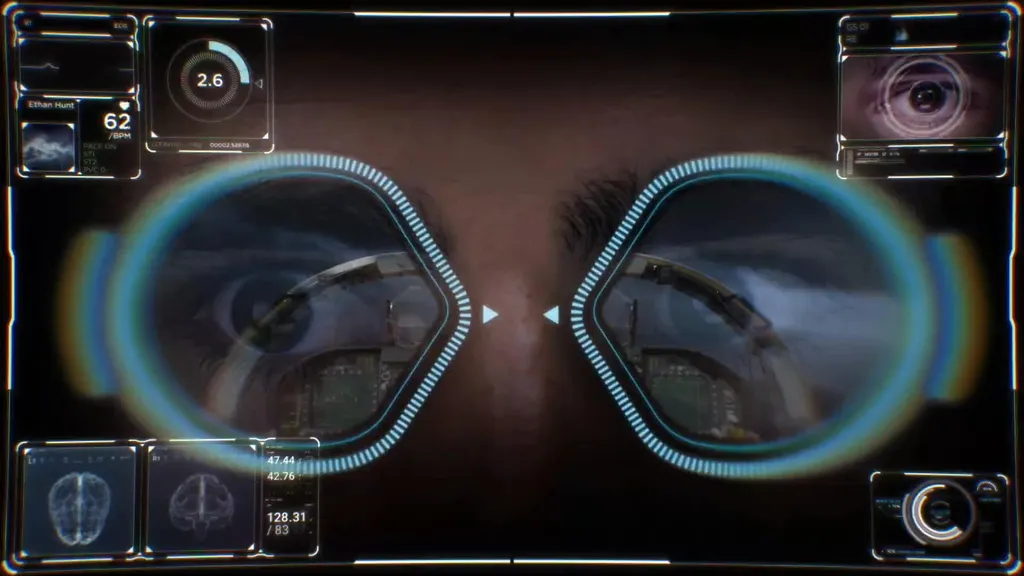

With 4K resolution, eye tracking, face tracking, and Valve audio, HP’s Reverb G2 Omnicept Edition will be a headset like no other. We spoke to HP & Tobii about making it a reality, and how each sees the future of VR.

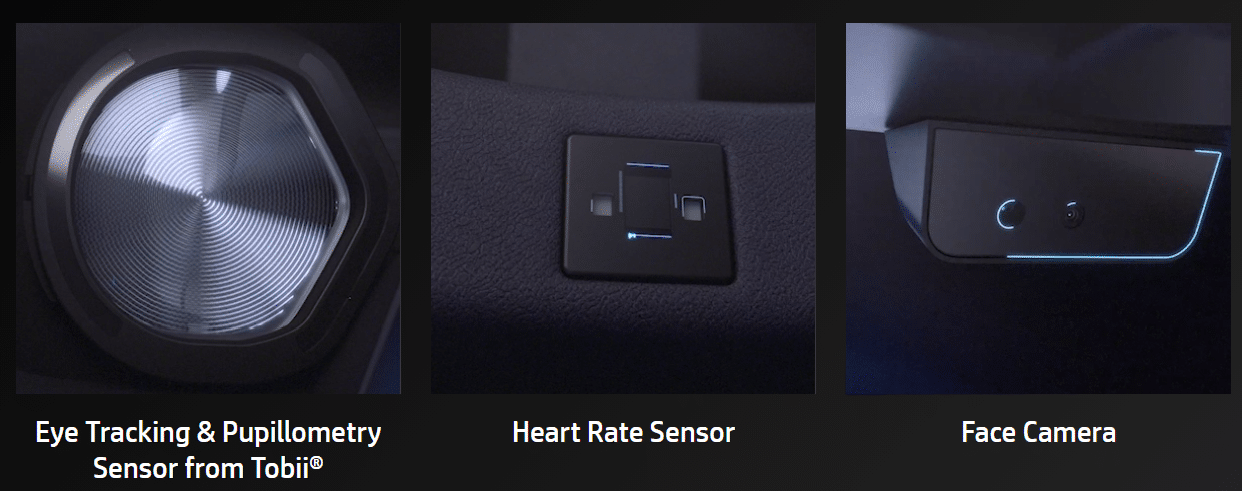

Omnicept Edition was announced back in September. It’s got the same comfortable design, best-in-class resolution, near-off-ear speakers, and precise lens adjustment as the consumer-focused Reverb G2. But it adds three sensor suites: eye tracking, lower face tracking, and a heart-rate sensor.

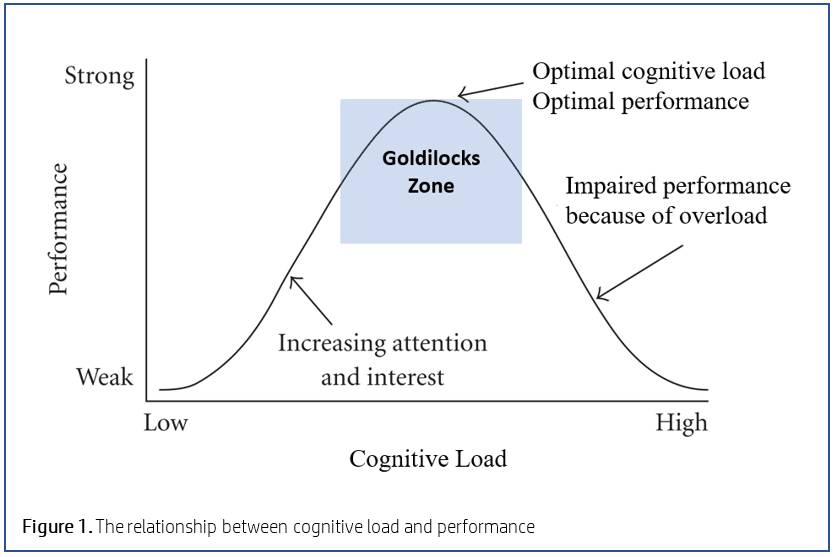

Omnicept is targeted at developers and businesses. The extra sensors, paired with HP’s machine learning powered SDK, can allow clients to assess the “cognitive load” – useful for training and evaluation.

HP’s Head of XR On Omnicept

We spoke to Peter Peterson, Head of XR Software & Solutions at HP.

What do enterprise customers and their developers tell you they care about?

“It needs to be extremely easy to integrate. Difficulty integrating APIs sours the entire developer experience when you’re trying something new. You just want to be able to bring it into your application and turn it on. We made that extremely simple – bringing in the plugins, being able to run through and get it connected. Omnicept is a B2D – business to developer – play for us.“

How does the horsepower of being PC-based fit into your strategy?

“We can deliver fidelity in the experiences on PC. The commercial use cases for specific things in the training space is about realism. In some cases life-saving skills. And the more real you can make that increases the training efficacy.

If you look at the audio on Reverb G2 too, it’s about total immersion in the scenario and making sure we don’t break that immersion.“

Are you seeing many companies start using VR at this stage, or is it existing companies upgrading?

“A lot of interest has come from companies that already have VR software products in development or already publicly released, and they looked at the features of Omnicept and recognized that it could augment their products in positive ways.

There are some we’ve talked to doing grounds-up development today, and Omnicept has triggered some interesting thinking for their use cases, so they’re developing product alongside.”

What do the sensors on Omnicept open up for virtual meetings? Does HP meet in VR yet?

“With eye tracking, I can see where your eyes are really looking. I can see if you’re blinking. And by combining that with the sensor looking at the lower part of the face, now we don’t need to use sound processing to drive the lips, that could make the interaction strange.

The thing with social VR is that the two ends need to have a similar device to have a similar experience. I might be dating myself a little, but it’s like when there were AT&T videophones before cellphones. So we expect that in order for social VR applications we’d need a device that democratizes the playing field.“

Right now these sensors use your HP SDK. Do you see this being built into OpenXR in future?

“Myself and my architect actually attend most of the OpenXR meetings. Obviously being a standards body it’s going to move a little bit slower than the technology but that’s OK.

We need to see the runtimes pick up OpenXR, which we see going on. We need to see the game engines pick up OpenXR, which is happening now, and then there’s going to be very specific things that are going to be really important to bring to OpenXR, either a standardized interface or specific vendor extensions.

We want to see eye tracking as part of OpenXR, absolutely. Eye tracking is going to have more and more use cases, not just commercial, and we’ll see other manufacturers implement eye tracking. But for some other specific things, it might not be clear that we would want it to be a part of OpenXR initially.“

How far off is wireless?

“Wireless is difficult because one of the key things about VR is performance – motion to photon latency. While all of our HMDs today are wired, there is still latency involved.

So if you add a wireless connection you’ll have increased latency.

Now I’m not saying these are insurmountable challenges, it’s just you have to use the trade-offs of the wireless technology and in what you expect. Do you want short range, but increased performance? Do you want long range and decreased performance? Do you want to increase latency if it can be in a frequency band that doesn’t require line of sight?

You’re probably aware of the early sort of wireless adapters that ended up on HTC Vive – those were extremely line of sight based because they were 60 GHz. We’re not in the office today, but eventually we’ll probably go back to the office. They all have a WiFi mesh network. It’s eventually going to be WiFi 6. But that also has trade-offs when talking about high bandwidth low latency connections required for VR. So really it’s going to be about finding the sweet spot without needing to deploy new infrastructure.“

Is hand tracking something you’re exploring?

“I can’t say anything about what’s going on with hand tracking. Personally, I think hand tracking is an important modality for input. I think that we’re not ready for hand tracking as the only input.“

What’s your approach to privacy?

“HP has a great internal privacy office that we consulted before even the first line of code was written. We thought about: how do we make sure any of the data that’s going through the API is well cared for and privacy-compliant. Our device doesn’t record any data. There’s nothing stored on the device. Its passing those to the PC, the PC is processing that.“

What’s more important: VR getting cheaper, or better?

“You’ve got to have both. There’s going to be levels and tiers that are going to start as technology progresses- Good, Better, and Best.

What happens in the ‘Good’ space is we’re going to see more units, and commodity prices will fall, and we’ll be able to get VR into more spaces. I think there is a tier of individuals that would like to use VR but it’s expensive. Not just from the device standpoint, also the PC you have to use in order to get a high fidelity experience. Getting a high end graphics card is not an easy ordeal right now, especially when you sometimes can’t even buy them, but that’s a whole other discussion!

I think there’ll always be a market for high-end VR to continue to drive immersion.“

Tobii’s Head of XR On Eye Tracking

We also spoke to Johan Hellqvist, Head of XR at Tobii, about the use of eye tracking in XR headsets.

Tobii is a Swedish company providing eye tracking tech for HTC Vive Pro Eye, Pico Neo 2 Eye (the first standalone headset with the feature), and soon the HP Reverb G2 Omnicept Edition.

Eye tracking is an added expense for a headset. What justifies it?

“You have a couple of challenges in XR in that the specs keep getting better – wider field of view, higher resolution, more horsepower needed to run the device. Foveation is a way to utilize your resources in a better way.

Another challenge eye tracking can help with is the alignment of the lenses. That can give nausea if you don’t do it properly. And if you have a multi-focal display so you don’t only have one focal plane, you can use eye tracking for that as well.

If it’s a standalone device where you want to tether to a PC or to the cloud, one challenging aspect will be the bandwidth. In this case you could use foveation for the compression.

From a total systems perspective, eye tracking can actually save cost. Right now we see that eye tracking is basically fundamental in all enterprise headsets.”

How can eye tracking assist with interaction design for spatial interfaces?

“When you know where the user is looking, you can combine that with gestures to understand their actual intent.

One example is throwing. It’s very difficult in a virtual environment, but if you have gaze then it’s easy to guide it. Ultimately, if you don’t have eye tracking on a device, it will just not behave as you would want it to.“

Is foveated rendering an on-off thing, or does better tech allow for better outcomes?

“Technically the better the eye tracking, the smaller the region can be, and the bigger the savings. That said, it depends on what your target group is. If you know the target group you can optimize towards it, but what we’re emphasizing is supporting a full population, because each person’s eyes are slightly different. So we design for 95%-98% coverage.

But if you have a situation, on tethered headsets for example, where you’re already changing graphics settings, you could run foveation with more or less benefit depending on who you are – but our guidelines are for as much of the population as possible.

The biggest factor is the latency. It can to some extent be compensated by a bigger area, but if you have too much latency then it doesn’t make sense. For foveation to work you need a good eye tracker with low latency – less than 50 milliseconds end-to-end.”

What are the barriers to getting foveation for that remaining 2-5% of people?

“There’s always outliers, if you have an eye disease or other anomalies in the eye. For example, Steven Hawking’s cornea was not even, it was dented sphere – he was extremely difficult to track. Some conditions are unfortunately impossible to solve.

Apart from the medical conditions, the main complexity when it comes to performance is thick glasses. The frames can block the illuminators or imagery. In AR ultimately we think the lenses will be RX adjustable or RX individualized. In VR we’re seeing the trend of pancake lenses where you’re much closer and can accommodate the lenses rather than the eyewear.“

Will calibration be a required step for all eye tracking systems, or will that step eventually go away?

“You do it once, just like when you do fingerprint or face recognition setup on mobile. We require calibration to work with good performance. We can run without calibration, but the performance would be much worse.

You could potentially have no calibration step with much more compute. But we’ve found that the balance of having optimized algorithms with calibration is the better trade-off, at least right now.“

Why do you think wireless headsets powered by foveated transport haven’t emerged yet?

“You need to be able to decompress in a smart way on the headset. And the early tethered heads were not very intelligent at all. So they just used a very simple PC display chip. As we evolve, for example standalone devices like a Quest or a Pico, you have enough power in the headset to do more advanced decompression. XR2 of course would be major overkill to only do decompression & warping, but XR1 is maybe more so relevant because it could also decompress.

Eye tracking in these devices is relatively new, even if the concepts are not. I would assume that most headsets eventually will have enough capacity to do a little bit more advanced decompression, and we’re big believers they’ll use eye tracking to do that even better.“

Eye tracking data can be seen as personal. What’s your approach to privacy?

“It’s one of our most important aspects. Eye tracking is so powerful because intent is semi-conscious. And if you use that in the wrong way it can have huge privacy impacts.

Whenever someone buys an eye tracker from us they need to adhere to our privacy policy, which is that if you as a developer or manufacturer want to store eye tracking data they need to communicate that with the user and get their acknowledgement. That’s in all our contracts. From our perspective, privacy is extremely important. We are spending a lot of time & effort on this, and I know the OEMs are too.“

You can read Tobii’s Eye Tracking Data Transparency Policy here.