In a post on the Intel labs blog today, author Daniel Pohl outlined a method for rendering virtual reality games and experiences more efficiently. Consumers want a display where the pixels are undetectable, and according to Michael Abrash, Chief Scientist at Oculus, that will only come with a display that is at least 8k in each eye. This means that systems will need to render for a display 16 times more pixel-dense than what currently is out there, and that requires a good bit of extra firepower from your hardware.

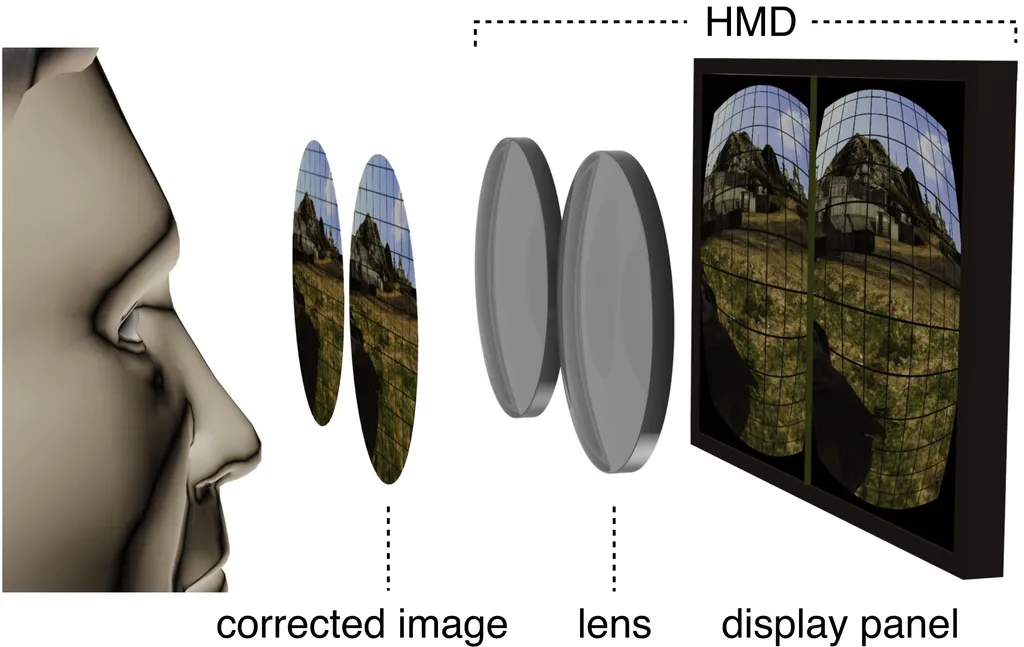

In order to render on such high resolution displays, developers are going to need to find as many ways as possible to improve rendering times. The optimization outlined by Intel exploits a phenomena known as distortion astigmatism, which is the reduction of quality toward the edges images viewed through the lenses of HMDs such as the Oculus Rift. When we view something in the Rift only the center of what we see is very sharp, and the edges of the image are distorted and are more blurry. This phenomena is true in VR as well, which led to the research question: “As the center area can be seen best and the outer areas become blurred, why should we render the edges of the image with the same level of detail as the center?”

That question led to the team devising a method to decrease the quality of the pixel rendering as it spread from the center of the gaze. The smart rendering method was accomplished by using a method of rendering called ray tracing, as opposed to rasterization which is typically used for games. Ray tracing, is a process through which an image is created by tracing a path of light rays through pixels. In order to achieve a higher quality image, more than one ray can be traced for each pixel. The Intel used “sampling maps” to tell the renderer how many rays to cast for each point on the screen. The sampling map told the renderer to cast four rays in the center, resulting in a high quality render in that area, and lowered it down to one as it reached the outside so the more distorted part of the image could be rendered faster (but with lower quality).

One final improvement the researchers tried was to use low-quality rasterization (typically faster than ray tracing) for the outside of the image, and using ray tracing with the sampling map for the center. This hybrid approach resulted in an additional performance improvement.

Ultimately the optimizations improved the rendering of the scene from 14 FPS to 54 FPS while maintaining full image quality at the center of the image. An additional optimization could be added using an eye tracking system like the one in FOVE. An eye tracking system would allow the developer to render areas where the user is not looking in a lower level of detail. That way even the center of the image could be rendered faster when the user is not looking at it.

Exploiting the limitations of lenses, the human eye, and the human mind will allow developers to render VR images faster. The combination of these optimizations and hardware improvements will allow us to reach our goal of rendering on ultra-high resolution displays at sufficient framerates.

Intel says it will provide more details about this method at IEEE VR 2015 in the end of March in Provence, France in a presentation titled “Using Astigmatism in Wide Angle HMDs to Improve Rendering.”