“So when can I try a demo,” asked the Pixar employee standing next to me.

Nvidia had just announced Iray for VR, a tool for rendering photorealistic graphics in realtime. Rather than rendering lighting and shadows at the intense 90 frames per second (FPS) of VR, Iray allows you to pre-render multiple viewpoints, which are then combined depending on the location of your eyes in the scene. No matter where you are in the scene, you can practically view a photorealistic scene which would be impossible to load otherwise.

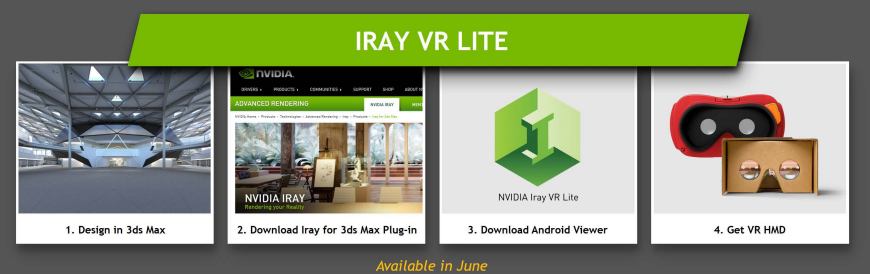

At least, in theory. My demo of the technology using an HTC Vive achieved about 5 frames per second, and was very broken, so I cannot confirm that it works from direct experience. According to Nvidia representatives, the VR implementation is still very early, and when working properly acts as described, namely providing some of the highest levels of photorealism in VR ever. Iray has actually existed for a while as a plugin for 3dsMax, Maya, and Cinema4D, mainly used for lighting rendering. However, Nvidia decided the tool could be perfect for VR, and so is now in the early stages of getting such an implementation working at the significantly more difficult VR requirements.

The result is that to do realtime rendering at Pixar level and above, you might need a $50,000 computer with 8-24 cores. During the Nvidia GTC keynote, Iray was shown working smoothly in realtime with an HTC Vive (90 FPS). I was not able to obtain details on the keynote’s exact setup. Nvidia also created Iray Lite, which allows you to pre-render a scene from a single viewpoint, enabling you to create photorealistic scenes for viewing in Gear VR or Google Cardboard. Regardless of its early implementation, I think pre-rendered photorealistic 3D will be hugely important for the future of VR, and Iray VR seems to be a viable path to get there.

Nvidia also created Iray Lite, which allows you to pre-render a scene from a single viewpoint, enabling you to create photorealistic scenes for viewing in Gear VR or Google Cardboard. Regardless of its early implementation, I think pre-rendered photorealistic 3D will be hugely important for the future of VR, and Iray VR seems to be a viable path to get there.

We’ll have a chance to try Iray for a longer session later this month, and will let you know whether it’s as amazing as it sounds or a long way from implementation.