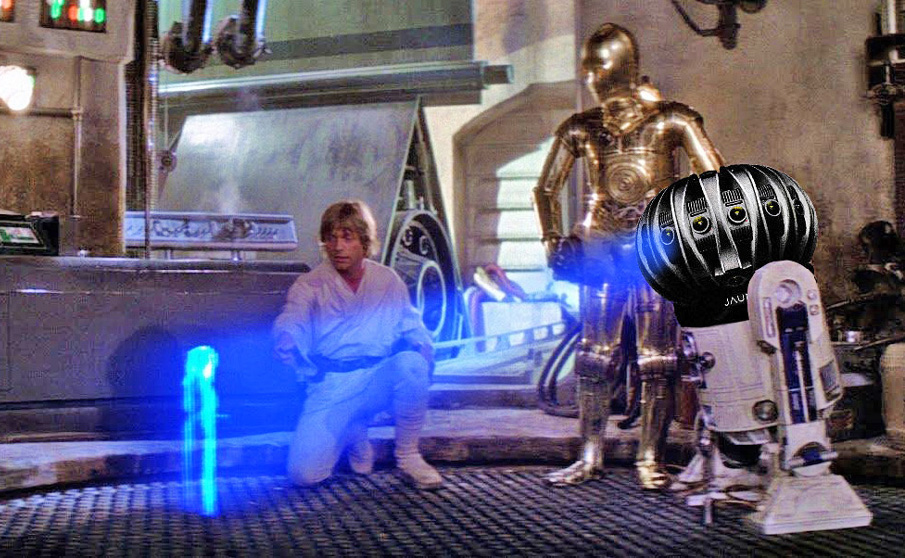

An astronaut steps out from the lunar lander in front of me, beginning the familiar “one small step for man” line before a voice shouts “cut!” from my rear. The lights cut on and the scene opens up to a full studio – I’m on the set of the famed urban legand of Kubrick faking the lunar landing. At first glance it appears to be fairly similar to any 360 video – something that some would argue may not even really be “VR” – but there’s one key difference with this video, I can lean in around the scene. I’m witnessing one of the first ever videos to come out of Lytro’s upcoming Immerge lightfield camera.

Ever since announcing the Immerge camera in November of last year, the company has remained fairly quiet outside of teasing the Lytro Cinema camera at the NAB conference this April. But after months of silence Lytro has finally given us a glimpse in what it means to experience a true “lightfield” video in Virtual Reality, and while it is not quite ready for prime time yet it offers a promising look at what is to come.

A quick primer on lightfields, which can be a bit of a tough topic to wrap one’s head around. When you look at an object, you aren’t seeing that object – but rather each ray of light as it bounces off of it and into your corneas. As you shift position the angles at which that light hits your eyes changes, resulting in the subtle changes in reflections and shadows that give objects depth and realism. A lightfield camera captures every ray of light as it passes through an area or volume, like an array of image sensors. When rendered properly this produces an effect that allows one to view a liveaction scene from multiple perspectives with true depth.

Lightfield is quite a buzzword in the industry these days, consisting of everything from cameras to displays being “lightfield” devices. What Lytro means when they say they’re producing a 360° lightfield camera, is that the video that you’ll watch is effectively a capture of thousands of different perspectives within a given radius. What that means effectively, is that you’ll be able to move your head and change your perspective within the 360° video.

The effect felt quite natural, displaying an impressive amount of depth and parallax to the left and the right. Even the audio was spatialized, and I could locate sounds quite well within the scene without having to look at them. However, while there were a lot of positives to the progress of the tech it wasn’t perfect. I noticed visible artifacts around the live-action cast, resulting in less than crisp outlines of the figures in the scene. That said, it wasn’t enough to be tremendously distracting and a more recent demo (the Moon scene was 6 months old according to Lytro) showed significant improvement in that regard.

According to Tim Milliron, Lytro’s VP of Engineering, the scene blended rendered lightfields with the live action lightfield captured by the camera. This means the CG objects reflect light differently from every perspective you watch from. Looking at the light flickering off of the astronaut’s helmet match the movements of my head throughout the scene was a nice touch of detail that adds such inherent value to the realism.

The real meat and bones of Lytro is way beyond the camera hardware that they’re developing. In addition to their camera hardware technology the team is also building the lightfield processing and the software tools for creating true immersive 3D videos. The development plugin works with Nuke, which is a standard tool within the film industry.

The rig that was used to create this video was Lytro’s first iteration of their Immerge camera. For that reason, this setup was built on a plane of 60 cameras, positioned in a staggered hexagonal pattern creating a flat plane of cameras, called a “wedge”. The entire array is .75m high and .75m wide, connected to a big server rack processing all video feeds. By rotating the rig and shooting the scene 5 times from five different rotational positions of the rig, they created a 360° video scene from essentially 300 camera perspectives. It’s common practice to shoot only portions of 360° videos in multiple takes, in order for the production crew not to have to hide every time the director yells, “Action!” so this fits into the current pipeline.

Due to the nature of lightfield video captured in this way, the moveable volume from the viewer’s perspective is restricted to the physical volume of the camera. In the case of this prototype, the camera offers a sphere with about a half meter diameter, though Milliron tells us that they are expanding that for the next iteration. Hypothetically, one could continue adding more cameras to the rig – increasing its volume potentially limitlessly – something that Milliron says speaks to the rig’s configurability.

Lightfield video is still in its earliest stages, despite the promise this demo showcased. The file sizes for these videos are still astronomical – requiring an entire server cabinet to record. The 30 second clip I was shown was 50 GB alone, and required an extremely powerful computer to run at 90 fps. Milliron says they plan to optimize the compression for these videos quite a bit (lightfield video is actually highly compressible) and are targeting a 5GB file size for the same video by the end of the year. That size alone will restrict its ability to scale in the short term, but it is a solvable problem Lytro says.

The company is in talks with a number of “major content creators” about applying its technology to their content, with plans to start shooting more within the year. For now, however, this tech demo will remain just that – a tech demo. There is no word yet on when Lytro plans to release the demo to the public, for now the plan is to showcase it only at events that the company will announce at a later date.

Note: Feature image is a rendering of Lytro’s Immerge camera, not the final product

Additional reporting by Will Mason