ARKit is almost here, and we see more demos of Apple’s Augmented Reality toolset every day. The latest, from Sweedish developer ManoMotion, is an additional SDK that allows developers to integrate hand gesture controls into their AR apps quickly.

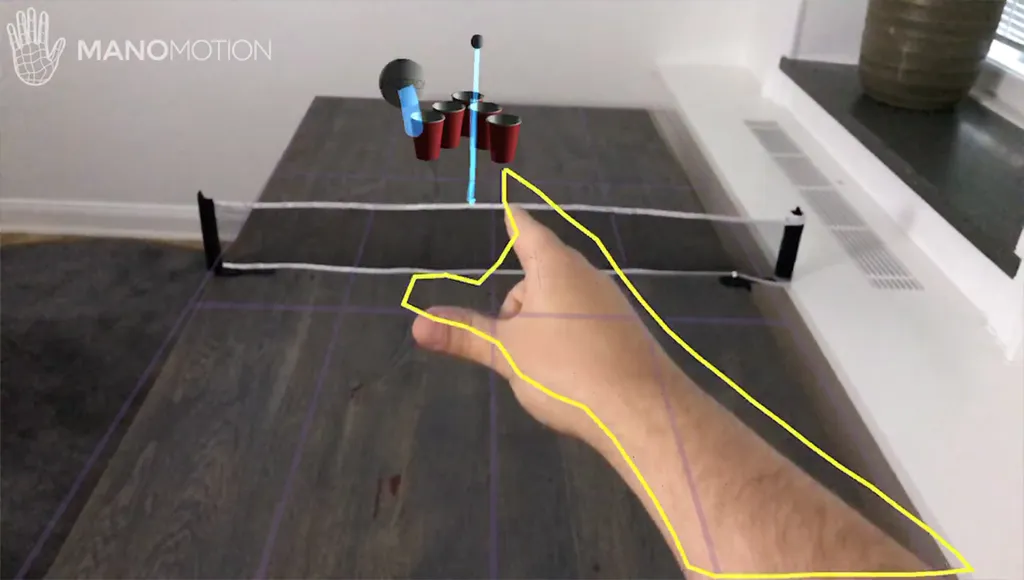

Available within a few weeks, ManoMotion’s tech uses the iPhone’s camera to provide accurate hand tracking with 27 degrees of freedom. It recognizes a variety of gestures including swipes, clicking, tapping, grabbing, and releasing. The video included with the announcement features an AR adaptation of beer pong, with the developer pinching to summon a ball from the ether into his hand, and releasing to throw it into a set of computer-generated red Solo cups.

The company says that the tech demands a minimal amount of battery, CPU, and memory overhead. It also provides tracking for both left and right hands, and a set of pre-defined gestures for developers to integrate into their apps.

For now, the gesture technology remains locked to Apple’s platform, but ManoMotion promises that ARCore integration for Android will be arriving in the future. The SDK will roll out for Unity on iOS first, followed by native iOS 11 support at a later date. Interested developers can sign-up to take a look at ManoMotion’s documentation, and the company will also support their new SDK via email and their own forums.