Meta researchers showed an update of their AI-powered body pose estimation.

Out of the box, current VR systems only track the position of your head and hands. The position of your elbows, torso, and legs can be estimated using a class of algorithms called inverse kinematics (IK), but this is only accurate sometimes for elbows and rarely correct for legs. There are just too many potential solutions for each given set of head and hand positions. Given the limitations of IK, some VR apps today show only your hands, and many only give you an upper body.

Smartphone apps can now provide VR body tracking using computer vision, but this requires mounting your phone in the corner and only works properly when you're facing it. PC headsets using SteamVR tracking support higher quality body tracking via worn extra trackers such as HTC’s Vive Tracker, but buying enough of them for body tracking costs hundreds of dollars and each has to be charged.

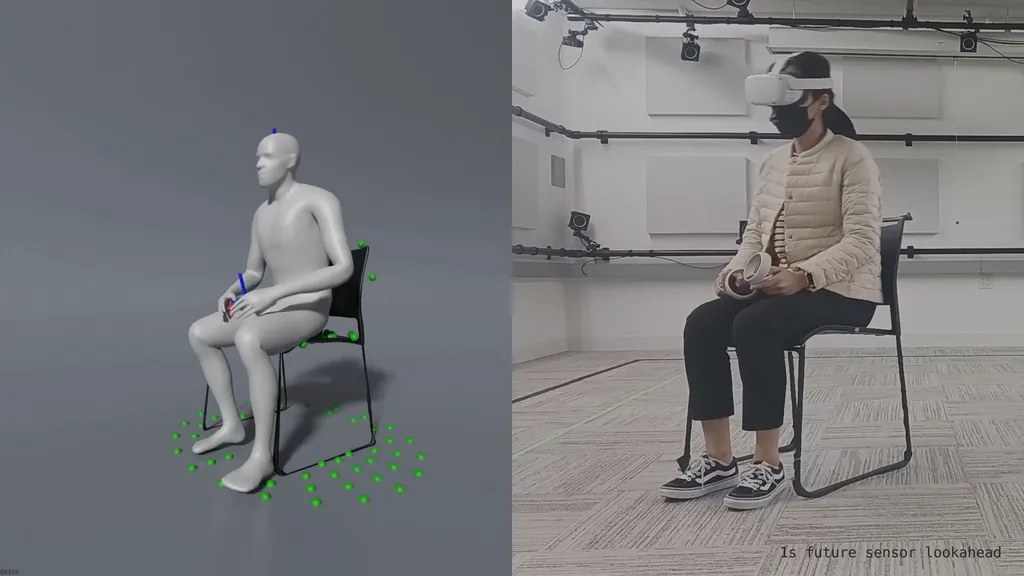

Last year three Meta researchers showed off QuestSim, a reinforcement learning model that can estimate a plausible full body pose with just the tracking data from Quest 2 and its controllers. No extra trackers or external sensors are needed. The resulting avatar motion matches the user’s real motion fairly closely. The researchers even claimed the resulting accuracy and jitter are superior to worn IMU trackers – devices with only an accelerometer and gyroscope such as Sony's Mocopi.

But one edge case QuestSim failed on is when the user is interacting with the real world, such as when sitting down on a chair or couch. Handling this, especially the transition between seated and standing, is crucial for plausible full bodies in social VR.

In a new paper called QuestEnvSim, the same three researchers and two others present an updated set of models, still using the same reinforcement learning approach, that takes into account furniture and other objects in the environment:

As you can see in the video the results are fairly impressive. However, don't expect QuestEnvSim to be running on your Quest 2 any time soon. Like with the original QuestSim, there are several important caveats here.

Firstly, the paper doesn’t mention the runtime performance of the system described. Machine learning research papers tend to run on powerful PC GPUs at relatively low framerate, and thus it may be years until this can run performantly in real-time.

Secondly, the sparse depth map for furniture and objects used was manually positioned in the virtual environment. While Quest 3 may perhaps be capable of automatically scanning in furniture via its depth sensor, Quest 2 and Quest Pro don't have that crucial sensor data.

Finally, these systems are designed to produce a plausible overall full body pose, not match the exact position of your hands. The system’s latency is also the equivalent of many frames in VR. These approaches thus wouldn't work well for looking down at your own body in VR even if they could run in real-time.

But still, assuming the system could eventually be optimized, seeing full body motion of other people’s avatars would be far preferable to the often criticized legless upper bodies of Meta’s current avatars. Meta's CTO Andrew Bosworth seemed to hint this would be Meta's eventual approach last year. When asked about leg tracking in an Instagram "ask me anything" session Bosworth responded:

“Having legs on your own avatar that don’t match your real legs is very disconcerting to people. But of course we can put legs on other people, that you can see, and it doesn’t bother you at all.

So we are working on legs that look natural to somebody who is a bystander – because they don’t know how your real legs are actually positioned – but probably you when you look at your own legs will continue to see nothing. That’s our current strategy.”