Meta researchers built a custom chip to make photoreal avatars possible on standalone headsets.

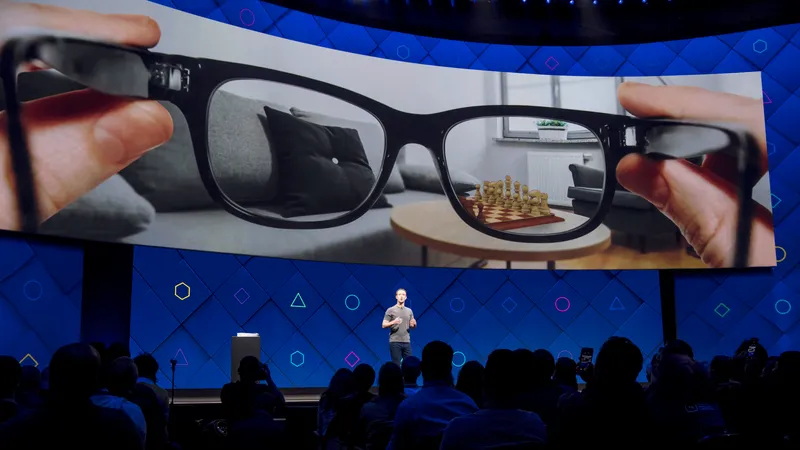

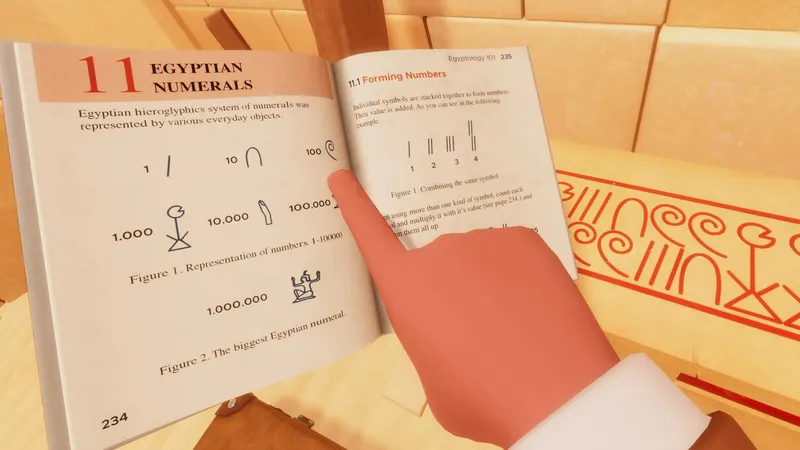

Facebook first showed off work on ‘Codec Avatars’ back in March 2019. Powered by multiple neural networks, the avatars are generated using a specialized capture rig with 171 cameras. Once generated, they can be driven in real time by a prototype VR headset with five cameras; two internal viewing each eye and three external viewing the lower face. Since then the researchers have showed off several evolutions of the system, such as more realistic eyes, a version only requiring eye tracking and microphone input, and most recently a 2.0 version that approaches complete realism.

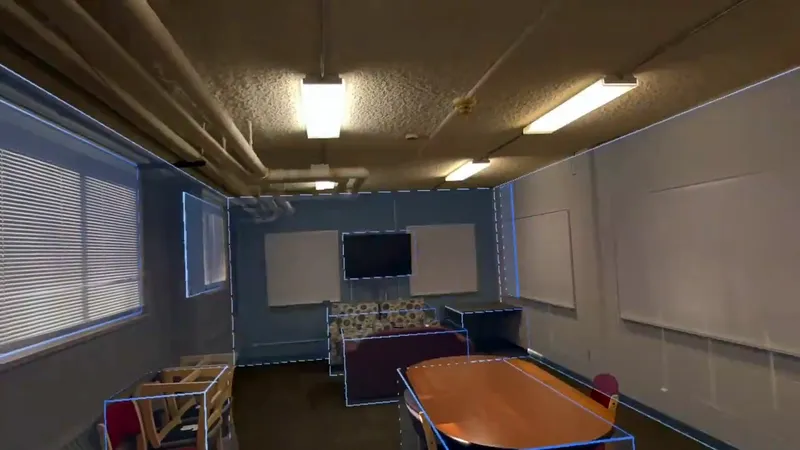

But driving photorealistic avatars on the mobile chipsets used in standalone headsets like Quest is no easy feat. While Meta’s newest & most efficient model can only render (decode) five avatars at 50 frames per second on a Quest 2, this doesn’t include the other side of the equation – converting (encoding) the eye tracking and face tracking inputs to a codec avatar face state.

![]()

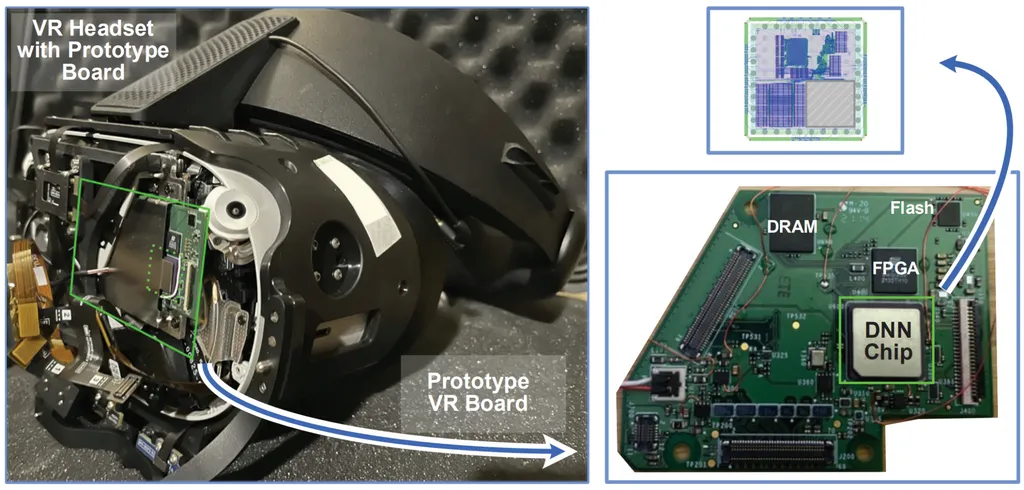

In a paper published for the 2022 IEEE Custom Integrated Circuits Conference, Meta researchers present a prototype of a neural network accelerator chip “that achieves energy efficient performance for running eye gaze extraction of the Codec Avatar model”.

Transferring data between components on mobile devices requires significant energy. The custom chip is located near the eye tracking camera inputs on the main board to minimize this, and handles converting these camera inputs into a Codec Avatar state. The researchers point out this could also have benefits for privacy and security, as the general purpose processors wouldn’t even have access to the raw camera data.

![]()

To be clear, this chip is just an experiment. It only handles one small part of the Codec Avatars workload, it’s not a general solution for making the system viable on near-term standalone headsets. The regular chipset (eg. Snapdragon XR2) is still handling all other tasks.

What this demonstrates is the viability of using custom silicon to solve problems like avatars. The idea could theoretically in future be dramatically expanded to handle more VR-specific tasks.