Meta is reportedly downgrading key specs of its in-development AR glasses to achieve a lower cost.

Meta has been working on AR glasses for eight years now, spending tens of billions of dollars on what Mark Zuckerberg hopes will one day be an iPhone moment.

Last year The Verge's Alex Heath reported that Meta no longer plans to release its first AR glasses, codenamed Orion, to consumers. Instead, it will reportedly distribute them to select developers in 2024 and also use them as a demonstration of the future of AR.

A report today from The Information's Wayne Ma says the same about Orion as Heath. But Ma also makes specific claims about how the first AR glasses Meta plans to sell to the public from 2027 will actually have downgraded specs and features compared to Orion.

These downgrades reflect the wider difficulties across the industry in the struggle to try to bring transparent AR glasses out of the realm of science fiction and into real products. Apple reportedly postponed its full AR glasses “indefinitely” earlier this year, and Google reportedly killed its internal glasses project this year in favor of making the software for third parties instead.

MicroLED Downgraded To LCoS

The Orion developer/demonstration glasses will reportedly use microLED displays from Plessey, the supplier Facebook secured the entire future output from in 2019.

MicroLED is a truly new display technology, not a variant of LCD such as “Mini LED” or “QLED”. MicroLED is self-emissive like OLED, meaning pixels output light as well as color and don’t need a backlight, but is more power efficient and can reach much higher brightness. This makes MicroLED uniquely suitable for consumer AR glasses, which need to be usable even on sunny days yet powered by a small and light battery. While most major electronics companies are actively researching microLED – including Samsung, Sony, and Apple – no company has yet figured out how to affordably mass manufacture it for consumer products.

But Ma reports that "Plessey’s technology has stalled", with the companies being unable to make the microLED displays bright enough and struggling with yield due to manufacturing defects. So the consumer AR glasses will instead reportedly use LCoS.

LCoS displays are essentially LCD microdisplays, though using reflection instead of transmission to form an image. LCoS isn't a new technology, it has been used in movie projectors since the 90s.

LCoS microdisplays were used in HoloLens 1 and are used today in Magic Leap 2. They are less power efficient and less bright than microLED has the potential to be, but much less expensive in the short term.

Field Of View Reduced

The Orion developer/demonstration will reportedly use a silicon carbide waveguide, providing a larger field of view than the glass waveguides used in existing transparent AR systems.

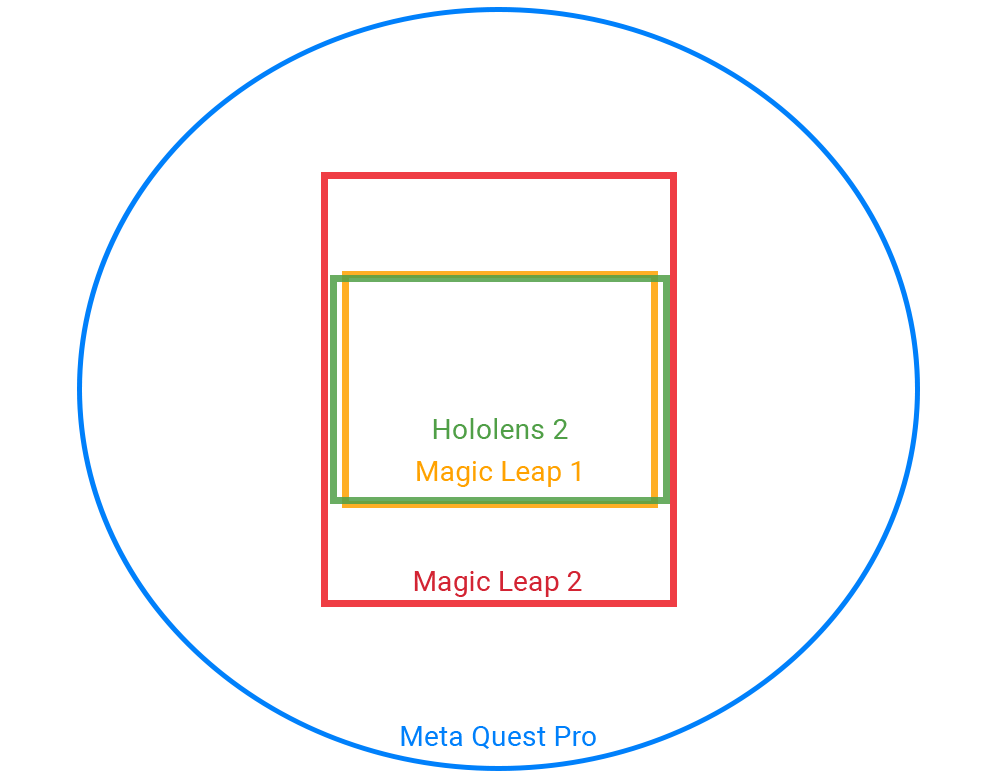

Ma reports Orion will have a field of view around 70° diagonal, slightly larger than the 66° of the bulkier Magic Leap 2 and much larger than the 52° of HoloLens 2 and Nreal Light.

But silicon carbide waveguides are incredibly expensive, so Meta's consumer AR glasses will reportedly use glass waveguides instead, and only have a field of view of around 50° diagonal like HoloLens 2 and Nreal Light.

We harshly criticized the field of view of both HoloLens 2 and Nreal Light in our reviews of each product.

For comparison, opaque headsets which use camera passthrough have field of views well in excess of 100° degrees diagonal.

Compute Puck Features Cut

Ma also reports that Meta is cutting features from the oval shaped wireless puck that handles most of the computing tasks.

The puck will still reportedly feature a 5G modem for connectivity and a touchpad for input, as well as a Qualcomm chipset for compute and a full-color camera.

But earlier prototypes of the puck reportedly also included a LiDAR depth sensor and a "projector for displaying images on surfaces", which Ma says have been cut from the final design, potentially for cost reasons.

Earlier reports suggested Meta's AR glasses will ship with the neural input wristband based on the tech from the startup CTRL-Labs Facebook acquired in 2019, which reads the neural signals passing through your arm from your brain to your fingers. Such a device could sense incredibly subtle finger movements not clearly perceptible to people nearby. Ma's report makes no mention of the wristband, though.

Cheaper Non-AR Glasses Coming Sooner

Ray-Ban Stories shipped in 2021 as the result of a collaboration between Meta and Luxottica. The current Stories are essentially camera glasses for taking hands-free first person photos and videos. They also have speakers and a microphone for music and phone calls but there is no display of any sort. Snapchat has been selling successive generations of a similar product, Spectacles, since 2017.

In a Meta roadmap presentation from March leaked to The Verge, Meta’s VP of AR Alex Himel reportedly told staff the company plans to ship a third generation of Ray-Ban Stories in 2025 which will have a heads-up display (HUD) and the neural wristband.

Called the “viewfinder”, this heads-up display will reportedly be used to show notifications and translate real-world text in real time.

To be clear: this wouldn’t be true AR, it would be a small floating contextual display. But smart glasses like this will be possible and affordable long before AR glasses, and their use cases shouldn't be as heavily affected by the limitations of near term hardware.