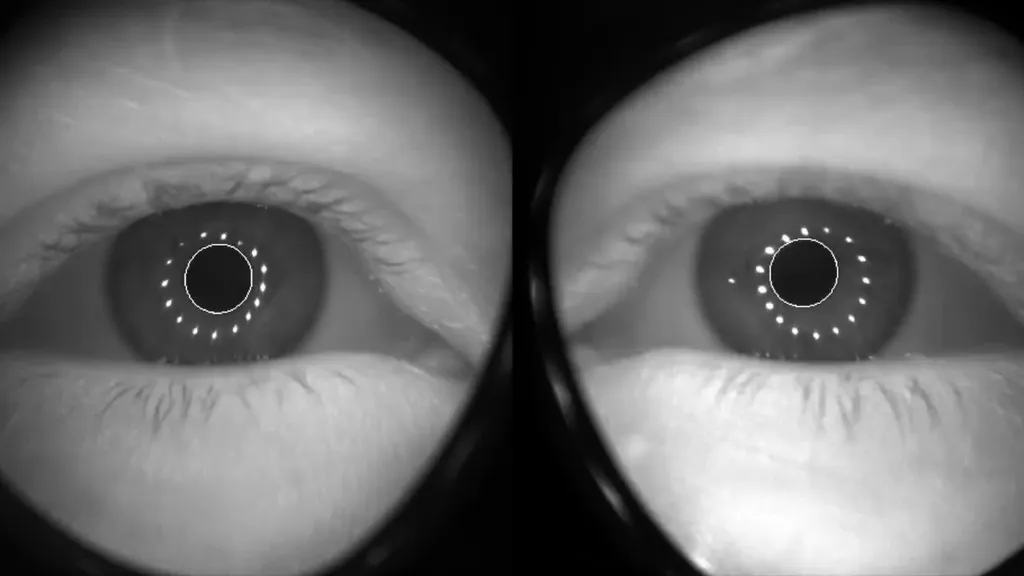

Meta claims it improved the accuracy of eye tracking on Quest Pro.

The extended release notes for the version 55 Quest software update reads:

"We’ve improved eye tracking on Quest Pro to make it more accurate across a broader field of view and during expressions like winking."

Quest Pro was, and remains, the first consumer standalone VR headset to ship with integrated eye tracking. But unlike Apple Vision Pro, which will use eye tracking as the selection method of its primary input system, eye tracking currently plays a far less important role on Meta's headset.

Eye tracking isn't even enabled by default on Quest Pro, as Meta wanted to signal that it cares about privacy. If you do enable eye tracking, it's used to guide you to set the correct lens separation for your interpupillary distance (IPD) and position the headset properly on your head.

Apps can also use eye tracking via Meta's SDK. The most common use case is to control the eye movements of virtual avatars in social applications, such as Meta's Horizon Worlds and Horizon Workrooms.

It can also be used for eye tracked foveated rendering. If you’re not familiar with the term, it's a technique where only the region of the display you’re currently looking at is rendered in full resolution, freeing up performance since the rest is lower resolution. That extra performance can be used to improve the graphical fidelity of apps, or just for higher full resolution.

Core tech updates like this are generally months or years in the making, but it's still notable that Meta would release an improvement for its headset's eye tracking accuracy just two weeks after Apple Vision Pro. Could Meta plan to experiment with eye tracking for interaction too?

Since Quest 3 doesn't have eye tracking and the immediate Quest Pro successor was reportedly canceled, Meta may not want to spend time building out an interaction system only a tiny fraction of the Quest userbase could leverage.