Microsoft Reseach, in partnership with Cornell University, developed a range of techniques to make virtual reality accessible to the visually impaired.

VR is a heavily visual medium. Most VR apps and games assume the user has full visual ability. But just like in real life, some users in virtual environments are visually impaired. In the real world a range of measures are taken to accommodate such users, but in VR no effort has yet been made.

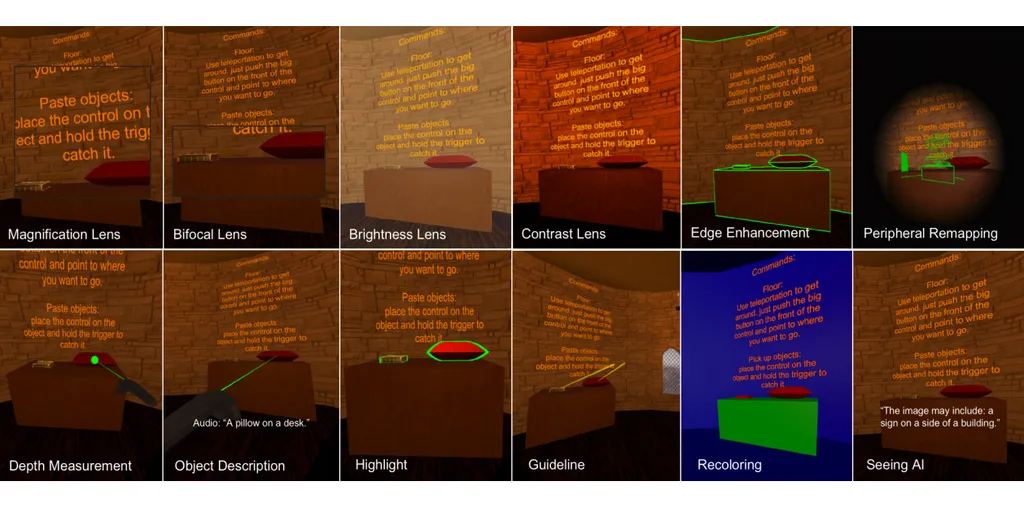

The researchers came up with 14 specific tools to tackle this problem. They are delivered as engine plugins for Unity. Of these tools, 9 do not require specific developer effort. For the remaining 5, the developer of each app needs to undertake some effort to support them.

It’s estimated that around 200 million people worldwide are visually impaired. If Microsoft plans to release these tools as engine plugins, it could make a huge difference in these user’s ability to use virtual reality. For VR to succeed as a medium it must accommodate everyone.

Automatic Tools

Magnification Lens: Mimicking the most common Windows OS visual accessibility tool, the magnification lens magnifies around half of the user’s field of view by 10x.

Bifocal Lens: Much the same as bifocal glasses in the real world, this tool adds a smaller but persistent magnification near the bottom of the user’s vision. This allows for constant spatial awareness while still enabling reading at a distance.

Brightness Lens: Some people have different brightness sensitivity, so this tool allows the user to adjust the brightness of the image all the way from 50% to 500% to make out details.

Contrast Lens: Similar to the Brightness Lens, this tool lets the user modify the contrast so that low contrast details can be made out. It is an adjustable scale from 1 to 10.

Edge Enhancement: A more sophisticated way to achieve the goal of the Contrast Lens, this tool detects visible edges based on depth and outlines them.

Peripheral Remapping: This tool is for people without peripheral vision. It uses the same edge detection technique as Edge Enhancement but shows the edges as an overlay in the center of the user’s field of view, giving them spatial awareness.

Text Augmentation: This tool automatically changes all text to white or black (whichever is most appropriate) and changes the font to Arial. The researchers claim Arial is proven to be more readable. The user can also change the text to bold or increase the size.

Text to Speech: This tool gives the user a virtual laser pointer. Whichever text they point at will be read aloud using speech synthesis technology.

Depth Measurement: For people with depth perception issues, this tool adds a ball to the end of the laser pointer, which lets them easily see the distance they are pointing to.

Tools Requiring Developer Effort

Object Recognition: Just like “alt text” on images on the 2D web, this tool reads aloud the description of virtual objects the user is pointing at (using speech synthesis).

Highlight: Users with vision issues may struggle to find the relevant objects in a game scene. By simply highlighting them in the same way as Edge Enhancement, this tool lets those users find the way in games.

Guideline: This tool works alongside Highlight. When the user isn’t looking at the relevant objects, Guideline draws arrows pointing towards them.

Recoloring: For users with very serious vision problems, this tool recolors the entire scene to simple colors.