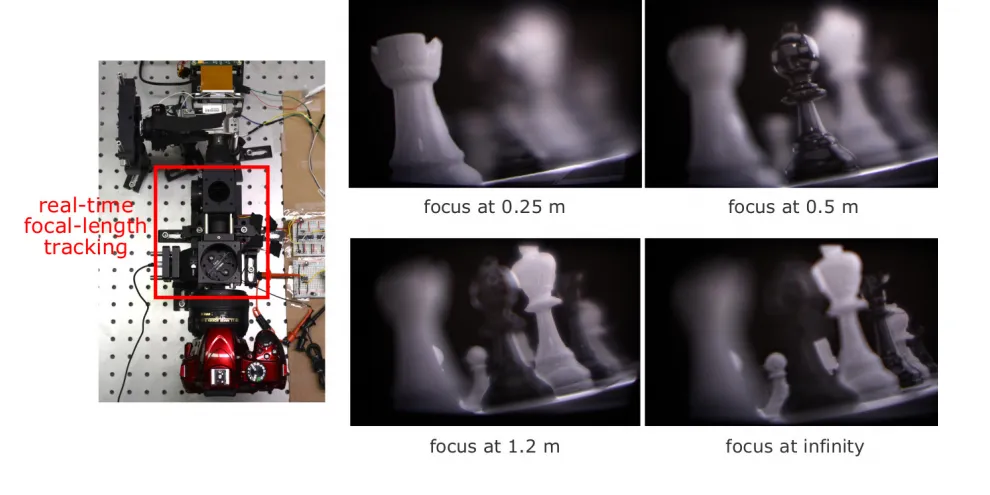

Researchers from Carnegie Mellon University built a multifocal display with 40 unique planes. The system involves a 1600Hz screen and a focus-tunable lens.

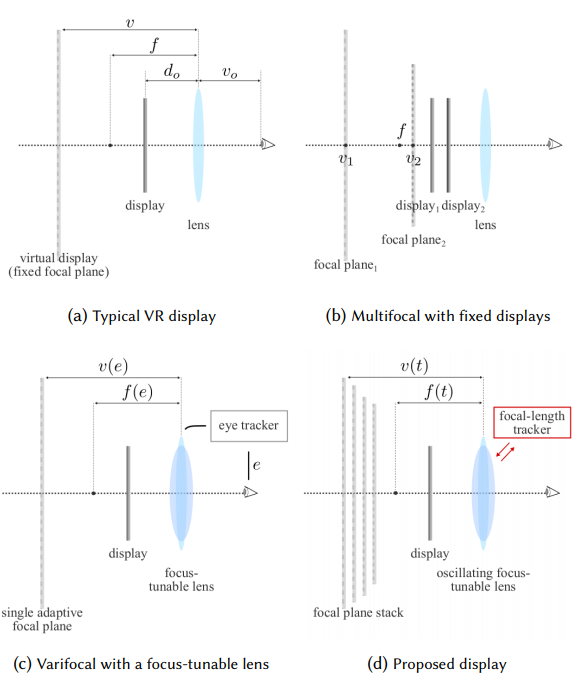

All VR headsets on the market today are fixed focus. Each eye is given a separate image, but the screen is focused at a fixed distance from the lenses. This means that your eyes point (verge) towards the virtual distance to what you’re looking at, but focus (accommodate) to the fixed focal length of the display. This is called the vergence-accommodation conflict. It causes eye strain and headaches and also makes near objects look blurry.

Image from Oculus Research

One approach to solving this is to build a headset with multiple screens layered, with each at a different focus length. This is called a multifocal display. The problem however is that to truly solve the vergence-accommodation conflict, the reseachers researchers that 41 focal planes would be needed. If this were done with hardware it would massively increase cost and weight to the point of impracticality.

Their new multifocal display instead uses a lens which adapts its focus based on the voltage it receives. This is known as a focus-tunable or “liquid” lens. A single display panel is run at 1600H. The lens is cycled through its full range of focus at 40Hz. As the focus is changed, the rendered image on the display is changed to what the new focal distance should see. Thus the virtual world is running at 40FPS and for each frame 40 different focal lengths are displayed.

Diagram from Carnegie Mellon paper

Prospects And Limitations

Unlike varifocal displays, this 40-plane multifocal display doesn’t use or require eye tracking. Additionally since each focal plane is rendered independently it doesn’t require the resource intensive approximation of natural blur to look real.

The requirement to render 1600 frames per second is the main limitation of this approach. Each group of 40 are just different planes of the same frame of course, so it’s not quite as bad as 1600 true frames. Facebook are hard at work at reducing the GPU requirements of their varifocal blur, so it will be interesting to see which becomes practical for the consumer market first.