A new demo made by Daniel Beauchamp allows for greater social expression in VR by bringing the hands of people wearing Oculus Quests into the same virtual room.

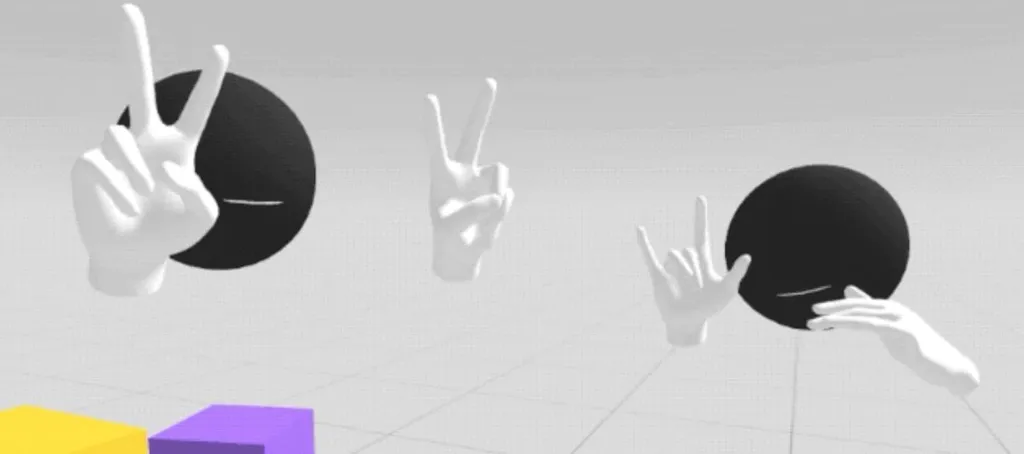

I recently met with Beauchamp (aka viral sensation @pushmatrix) in an otherwise empty virtual world he made with a new version of the Normcore networking software development kit. We had some of the simplest avatars possible — a sphere for a head and glove-like hands. The resulting interaction between myself in Southern California and Beauchamp in Toronto pointed to a clear future for VR interactions that are much easier to grasp than those funneled through tracked controllers.

Look Ma, No Controllers

The sideloaded software bypasses Oculus Store restrictions which only show your hands when tracking is confident about their shape and position. In other words, Beauchamp’s software showed me my hands even when the Quest headset wasn’t absolutely certain of their position or shape. Tracked movements translated into VR which don’t closely match those of your physical body can lead to confusion and, in some cases, nausea. This is likely part of the reason Half-Life: Alyx doesn’t show players their elbows and Facebook’s earliest VR avatar systems essentially leave out the same details. Oculus Touch or Valve Index controllers assume your hands are grasping the tracked objects and assuming too much outside that could lead to some mismatch and discomfort. Therefore, it’s just generally (but not always) more comfortable to only show users their bodies when the person in VR believes what they’re seeing makes sense.

The current Oculus Quest hand tracking system, then, strains a bit during complex interactions in VR, like those seen in Waltz of the Wizard and The Curious Tale of the Stolen Pets. While this might not be the case in future VR hardware, the current Quest’s camera system is reliant on ambient light sources to see the shape of your hand and can only sample the environment to identify position at a limited rate. Future headsets might emit an infrared pattern to identify hand movements or feature cameras that could sample the environment at a higher rate to provide more robust hand tracking.

Defying Distance

In the case of Beauchamp’s demonstration, I was more focused on expressing myself and carrying a conversation with him than, say, interacting with a menu or grabbing virtual objects by pinching them. And I found the demonstration simply astounding — the clearest example yet of just how compelling future VR interactions could be when using natural hand gestures to emphasize what you’re saying or convey personality. It remains difficult, even in 2020, for some people to understand how exactly VR works to “defy distance” and Beauchamp’s software starts to make that end goal clear to a broader audience.

Facebook just started accepting store submissions for hand tracking last month and Quest’s latest software improves the tracking considerably compared with earlier versions. We know the company is investigating methods for quickly typing in VR and both future hardware and further software improvements could improve the technology.