Newport Beach-based NextVR will start using its cameras to capture reality in such a way that viewers in VR will be able to move around the scene in any way.

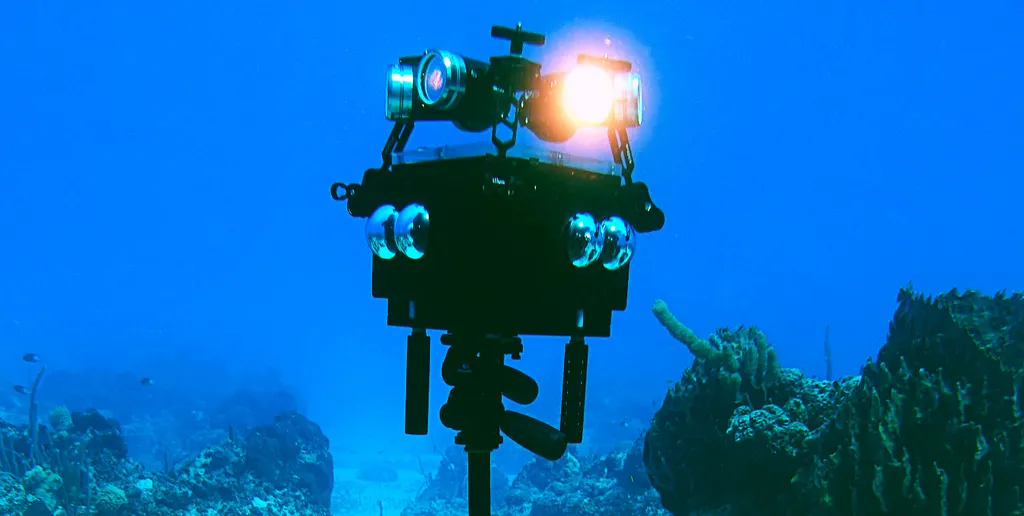

NextVR was an early startup working to develop camera rigs and streaming tech to capture reality and deliver it to VR headsets live anywhere in the world. Where others struggled with a number of quality control issues, NextVR captured a picture that was both crisp and stereoscopic — so you could look around and people or objects seemed to stand out in 3D just like the real world.

The startup worked out first-of-its-kind partnerships for VR broadcasts of live sporting events like NBA and NFL games, and the company even broadcast presidential debates directly to headsets. NextVR’s experience capturing a wide range of events could be a huge benefit as the company embarks on its next phase, which involves improving its capture pipeline so that people wearing PlayStation VR or Windows-based headsets can lean or move around to see the action from different angles.

Over the last few years Facebook and Adobe started experimenting with approaches that would enable similar freedom to move around a captured reality in any direction. Those efforts are very early. Other companies like Microsoft and Lytro also have systems that can capture performers from any angle, but those solutions are often limited in what types of content they can capture. A singer or actor can be captured to insert into a scene with other elements, but capturing an NBA game and broadcasting it live is quite different.

NextVR is planning for a gradual roll-out of new content captured with six degrees of freedom starting in 2018 and continuing into the future. It’ll start with on-demand content with the plan being to eventually bring the feature to live streams.

The biggest obstacle to these efforts is occlusion — where something nearer to you blocks the view of something farther away. Picture sitting right on the sidelines of a football game and a wide receiver goes out for a big catch. Unfortunately, one of the game’s referees stands between you and the receiver right as the ball is about to be caught. In the real world you’d instinctively move your head to the right or left to see around the referee and catch the action. Till now, that movement generally isn’t possible in VR with captured scenes. The cameras capturing can’t see around the referee. So you can turn your head and look around but the moment you lean the illusion is broken.

What NextVR and others are doing is using information from other cameras on its rigs to fill in occluded gaps. As NextVR co-founder David Cole explained it, if the end goal is to have a Holodeck the first step is to free people so they can move around any way they want. The second step is to fill in more and more of those occluded gaps. The challenge is greater, it seems, the closer something is to the camera blocking everything in the background. This means you can expect a careful roll-out from NextVR of its full freedom of movement captures as the startup figures out how to maximize the volume in which people can move around without seeing gaps where the cameras couldn’t see what was going on.

2018 is shaping up to be a big year for NextVR. The arrival of standalone headsets and a larger viewer base could be a major boon to the company. Cole also said NextVR is making progress with social viewing — so you can watch an NBA game with friends instead of alone — but details would be forthcoming at a later date.

We’ll be trying out NextVR’s latest at CES so check back for a hands-on report.