Today, NVIDIA is opening up their GameWorks VR SDK to move developers. For those unfamiliar with the platform, it is comprehensive set of APIs, libraries and new features that enable headset and game developers to deliver amazing VR title. It reduces latency, increases performance, and makes for a much more seamless virtual reality experience when integrated into the code. Now, it has a version 2.

General Manager at NVIDIA, Jason Paul, answers questions about GameWorks VR

Since the alpha release of the GameWorks SDK in May of 2015, NVIDIA has received a lot of feedback from developers and have incorporated much of that feedback into the beta release. While the alpha release was limited to a few select developer partners to start, they are now expanding release with this beta to game developers everywhere.

Virtual reality can be extremely demanding with respect to rendering performance, as mentioned during a recent presentation by NVIDIA’s Developer Technology Engineer, Nathan Reed. For example, both the Oculus Rift and HTC Vive headsets require 90 Hz, a very high framerate compared to gaming on a screen. Taking into account the current pipelines for processing this type of data input is relatively long, leaving much to be desired.

To start, input must be first processed with a new frame submitted from the CPU. Then the image has to be rendered by the GPU, and finally scanned out to the display. Following that, the scene has to be duplicated for virtual reality headset to achieve the stereo eye views that give VR worlds a sense of depth. This tends to approximately double the amount of work that has to be done to render each frame, both on the CPU and the GPU. That clearly comes with a steep performance cost, which must be addressed by virtual reality developers.

With the GameWorks SDK, there are 5 primary advantages to using it. The goal is to reduce latency, and accelerate stereo rendering performance. Each feature uniquely gives developers resources to optimize their virtual reality projects, allowing for more comfortably for the end users. A quick rundown is found below:

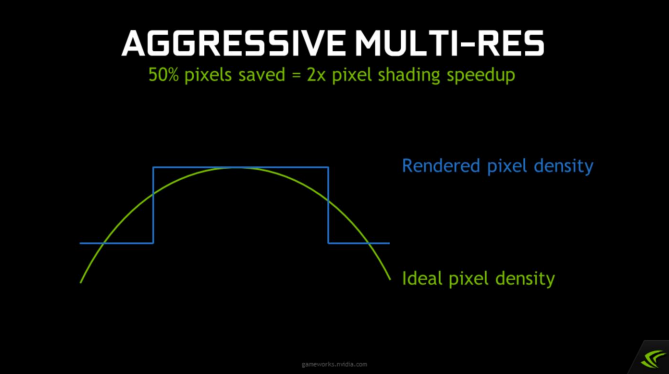

Multi-Resolution Shading

To output an image to be seen in a VR headset, the image has to be warped to fit the construct of the lens. The trouble is that GPUs can’t natively render into a distorted view like this; thus requiring a post-processing pass that resamples the image to the correct VR view.

Although the shape of the image is better than before after the distortion pass, pixels are often generated in excess that never actually making it out to the display (aka over shading). That becomes wasted work, and it slows the process down. The idea of multi-resolution shading is to split the image up into multiple viewports that keeps the center viewport the same size while scaling down all the ones around the edges. This increases the speed by many factors depending on the how aggressive the developer want the multi-res shading to occur.

This technology uses the multi-projection architecture of the GeForce GTX 980 Ti GPU to render multiple viewports in a single pass. The result: substantial performance improvements for VR games.

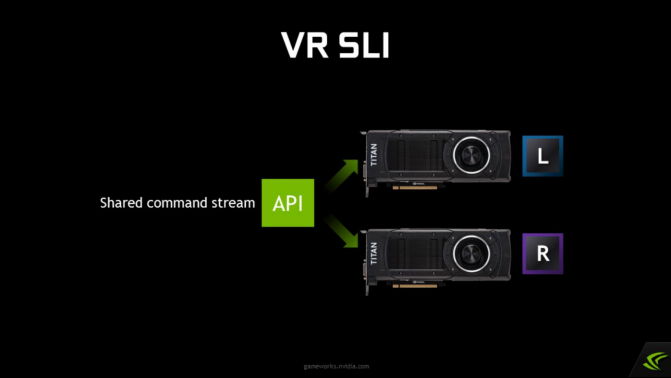

Virtual Reality Scalable Link Interface (VR SLI)

SLI is a multi-GPU technology developed by NVIDIA for linking two or more video cards together to produce a single output. SLI is an algorithm of parallel processing for computer graphics, meant to increase the processing power available for graphics.

In the case of two GPUs, one of the drive will render the even frames and then the other one will render the odd frames. The GPU start times though are staggered half a frame apart in an effort to try to maintain regular frame delivery to the display. This works reasonably well to increase framerate relative to a single-GPU system for the most part, but it doesn’t assist with issues surrounding latency. A better model specifically for VR is needed.

A one way is to use two GPUs for VR rendering to split the work of drawing a single frame across them — namely by rendering each eye on one GPU. This improves both framerate and latency relative to a single-GPU system. This provides added performance for virtual reality application as multiple GPUs can be assigned a specific eye to dramatically accelerate stereo rendering. With the GPU affinity application programming interface provided by the GameWorks SDK, VR SLI allows scaling for PCs with two or more GPUs – while keeping the drives synced simultaneously.

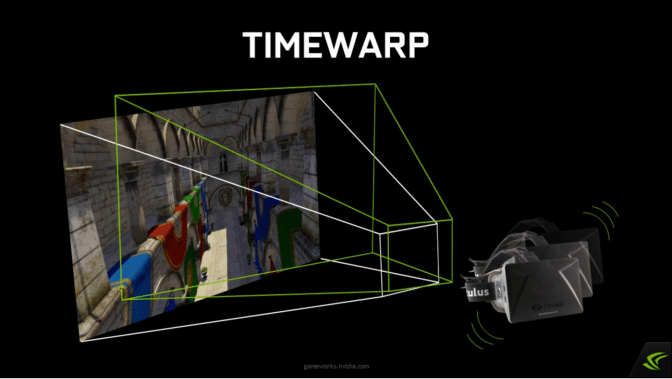

Context Priority

Context priority is a low-level feature that NVIDIA provides developers to enable VR platform vendors to implement asynchronous timewarp. Timewarp is a feature implemented in the Oculus SDK that allows developers to render an image followed by a postprocess to adjust it for changes during head motion. Furthermore, asynchronous timewarp speeds things up by not having to wait for the app to finish rendering.

The GameWorks SDK allows developers to implement asynchronous timewarp by exposing support in their driver for a feature called a high-priority graphics context. It’s a separate graphics context that takes over the entire GPU whenever work is submitted to it – pre-empting any other rendering that may already be taking place.

Direct Mode

Direct mode is another feature targeted at VR headset vendors. It enables NVIDIA drivers to recognize when a display that’s plugged in is a VR headset as opposed to a screen, and hide it from the Operating System so that it doesn’t extend the desktop onto the headset. Then, the Virtual Reality platform service can take over the display and render to it directly. This just provides a better user experience in general for working with VR.

Front-End Buffering

Direct mode also enables front buffer rendering, which is normally not possible in D3D11. VR headset vendors can make use of this for certain low-level latency optimizations.

Note: Most of the information in this article was pulled from the NVIDIA presentation linked above. Additional information about the platform can be found there.