A new tool from NVIDIA for benchmarking VR hardware and experiences should make it easier for buyers to figure out how systems perform in real world scenarios.

Head-mounted displays like the Oculus Rift and HTC Vive put heavy demands on the PCs required to power these headsets. The graphics cards from companies like NVIDIA and AMD are often one of the most expensive components in a PC that runs VR. This means buyers interested in getting into VR are often in a tough situation trying to decide whether they should get the most expensive computers available with the most powerful graphics cards, or whether they can settle for something less and still be able to have a great VR experience.

Complicating matters is the rise of VR-capable laptops, which are more convenient since they make it easier to move from one room to another. Laptops, however, have battery life issues and heat concerns that might impact how a machine performs and thus how a virtual world looks and feels to the person wearing the headset.

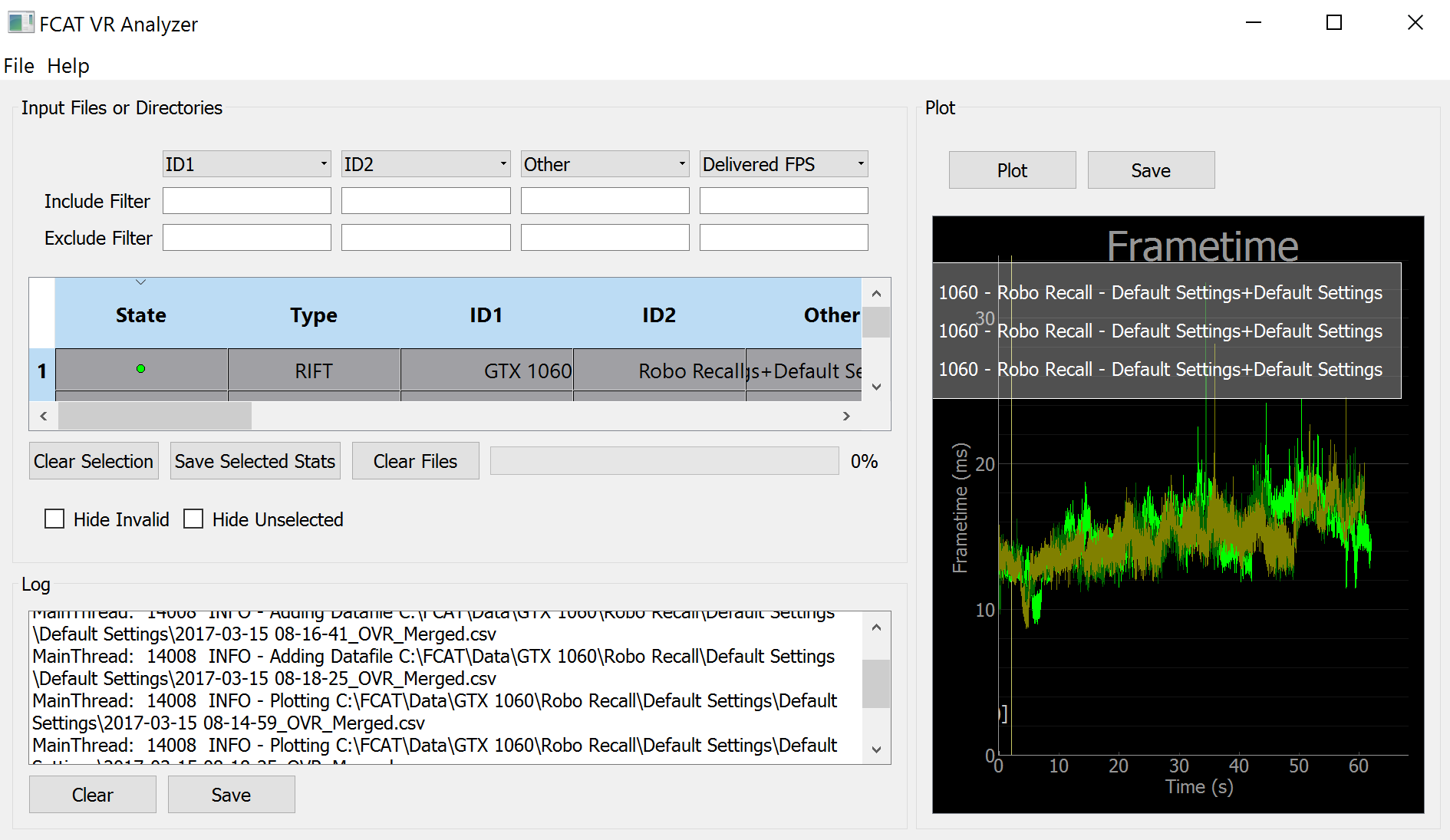

The newest version of NVIDIA’s FCAT software, called FCAT VR (analyzer interface pictured above), allows people to test the performance of virtual worlds and the hardware drawing those worlds inside the Oculus Rift and HTC Vive. NVIDIA has made previous versions of the software modifiable and redistributable, and FCAT VR will follow the same path.

We’ve gotten access to early versions of the FCAT tool from NVIDIA and ran some initial tests on a laptop powered by NVIDIA’s GTX 1060, though the software in its most recent tested state still requires limited use of the command line for setup and several steps to visualize the data captured by the software. Even then, finding conclusions that will help buyers will take some time to identify. We’ve barely scratched the surface of what the software can do, so expect updates in the future.

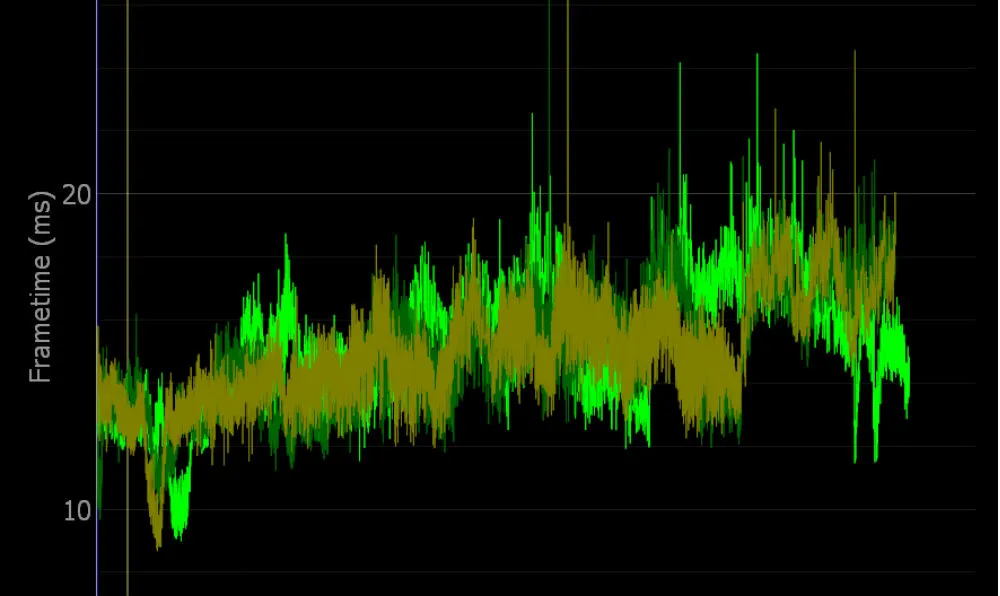

The featured image at top is the data charted by FCAT VR over a minute from three subsequent tests of Robo Recall on an Alienware GTX 1060 laptop. You can see the raw data charted below — which should give the more technically-minded readers an idea of the data provided by the new toolset. Performing additional tests, visualizing the data in a useful way and delivering useful conclusions will take us some time.

If you have any specific requests about hardware, software, or settings you’d like see tested as we spend more time with this software, please let us know in the comments.