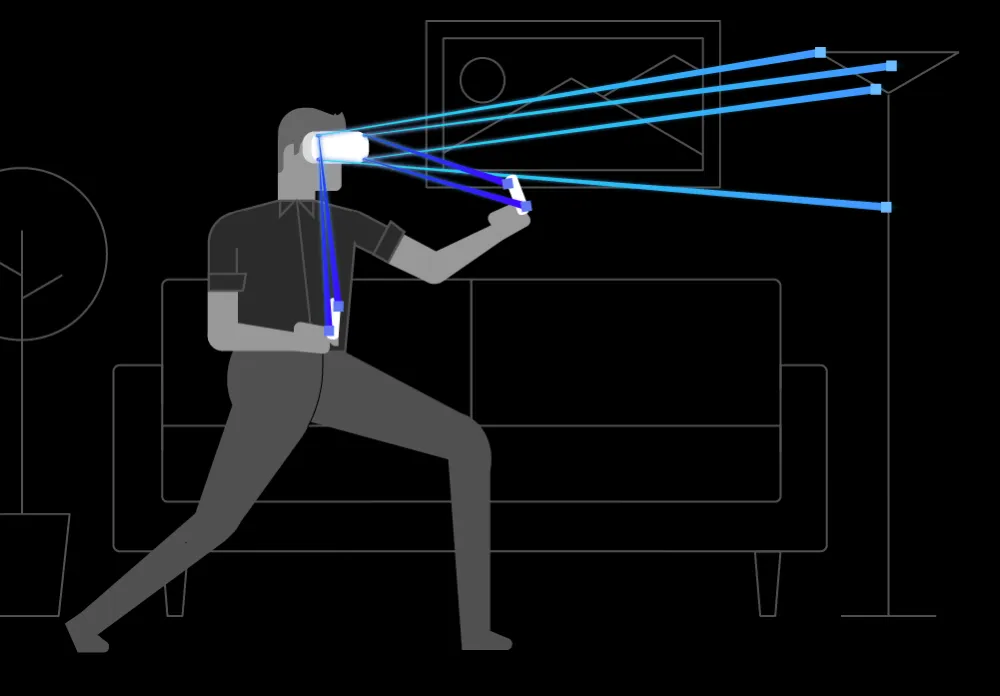

Facebook provided background on the development of the inside out positional tracking technology which enables both Oculus Quest and Rift S to operate without any external cameras.

A pair of blog posts published today by Facebook explain how a team spread across the company’s VR development labs in Zurich, Menlo Park, and Seattle built the technology.

According to Facebook, the company used OptiTrack cameras and “by comparing the measurements recorded with the OptiTrack cameras with the data from Oculus Insight, the engineers were able to fine-tune the system’s computer vision algorithms so they would be accurate within a millimeter.”

Employees tested out the cameras in their own work spaces and homes to recreate a variety of conditions in which a headset like Quest might be used.

Here’s how Facebook described the process:

“The OptiTrack systems would track the illuminators placed on participants’ HMDs and controllers and throughout each testing environment. This allowed us to compute the exact ground-truth 3D position of the Quest and Rift S users and then compare those measurements to where Oculus Insight’s positional tracking algorithm thought they were. We then tuned that algorithm based on the potential discrepancies in motion-capture and positional data, improving the system by testing in hundreds of environments that featured different lighting, decorations, and room sizes, all of which can impact the accuracy of Oculus Insight.”

“In addition to using these physical testing environments, we also developed automated systems that replayed thousands of hours of recorded video data and flagged any changes in the system performance while viewing a given video sequence. And because Quest uses a mobile chipset, we built a model that simulates the performance of mobile devices while running on a general server computer, such as the machines in Facebook data centers. This enabled us to conduct large-scale replays with results that were representative of Quest’s actual performance, improving Insight’s algorithms within the constraints of the HMD it would have to operate on.”

Facebook says that to get this same Simultaneous Localization And Mapping (SLAM) technology to work inside slimmer AR glasses, they’ll have to figure out how to reduce latency further while “cutting power consumption down to as little as 2 percent of what’s needed for SLAM on an HMD.”

Here’s a video detailing how the technology works: