NOTE: this article was originally published September 20.

For the last half-decade at each Oculus Connect, Facebook’s top VR researcher presented an annual look at the future of the technology.

The research-focused talk by Michael Abrash is a highlight of the annual conference hosted by Facebook and we’ll hope to see a similar update during Oculus Connect 6. Hired from Valve, Abrash built up Facebook’s long-term VR research efforts first at Oculus Research and then under its new name Facebook Reality Labs.

You can watch everything he said about the future of the technology during his presentations from 2014 to 2018 in the video below.

Oculus Connect (2014)

Abrash offers an overview of Oculus Research and tries addressing the question of — with VR failing to reach mass adoption in the past — why it is going to be different this time.

“In a very real sense its the final platform,” he says. “The one which wraps our senses and will ultimately be able to deliver any experience that we’re capable of having.”

He says Oculus Research is the first well-funded VR research team in 20 years and their job is to do the “deep, long-term work” of advancing “the VR platform.” He points to a series of key areas they plan to pursue including eye tracking. The idea behind foveated rendering is that if you track the eyeball’s movements fast and reliably enough you could build a VR headset which only draws the most detailed parts of a scene directly where you are looking. He also described the fixed focal depth of modern VR headsets as “not perceptually ideal” and admits they can “cause discomfort” or “may make VR subtly less real” while hinting at “several possible ways” of addressing this problem requiring new hardware and changes to the rendering model.

“This is what it looks like when opportunity knocks,” he says.

Oculus Connect 2 (2015)

In late 2015 Abrash outlines a more specific series of advances required to drive human senses with VR technology. He says he’s fine leaving the sense of taste to future VR researchers, and both touch and smell require the development of breakthroughs in delivery techniques. He also discusses the vestibular system — which he describes as our internal accelerometer and gyroscope for sensing change in orientation and acceleration — and that “conflict between our vestibular sense and what you see is a key cause of discomfort.”

“Right now there’s no traction on the problem,” he says.

For hearing, though, there’s a “clear path to doing it almost perfectly,” he says. “Clear doesn’t mean easy though.” He breaks down three elements of audio simulation as Synthesis (“the creation of source sounds”), Propagation (“how sound moves around a space”), and Spatialization (“the direction of incoming sound”) and the difficulties involved in doing all three well.

“We understand the equations that govern sound but we’re orders of magnitude short of being able to run a full simulation in real time even for a single room with a few moving sound sources and objects,” he says.

He predicts that in 20 years you’ll be able to hear a virtual pin drop “and it will sound right — the interesting question is how close we’ll be able to get in five years.”

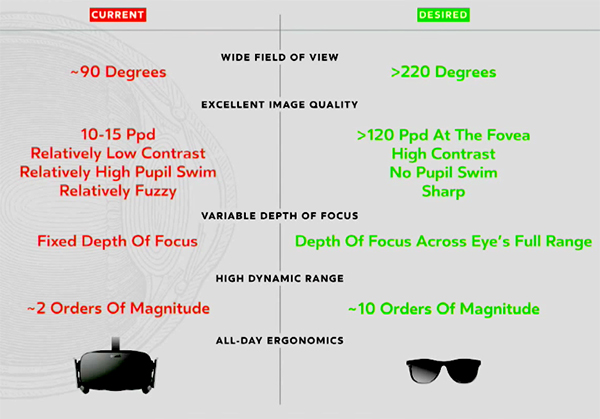

Future Vision

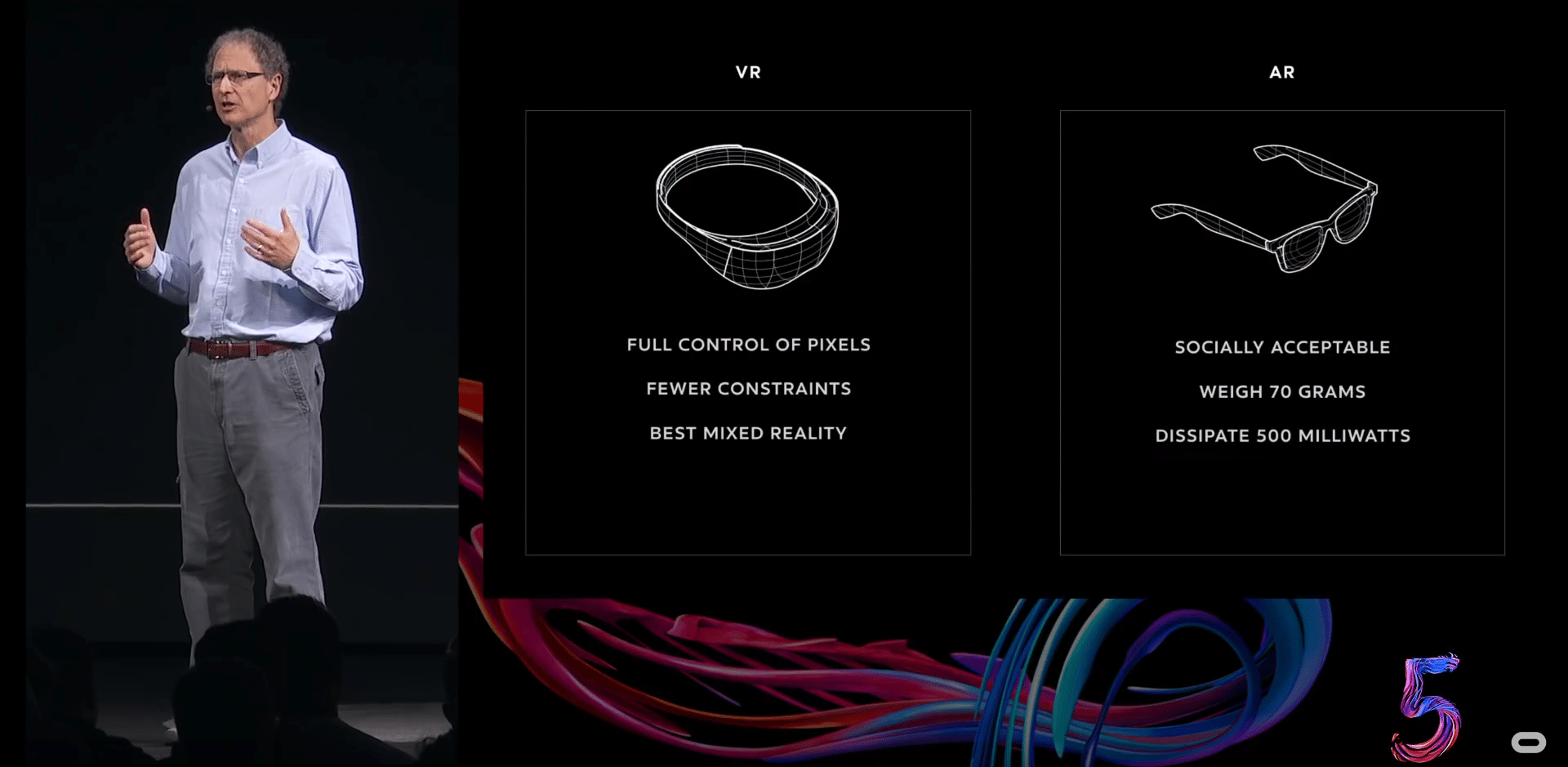

He describes “photon delivery systems” as needing five attributes which are often in conflict with one another, requiring trade-offs in field of view, image quality, depth of focus, high dynamic range and all-day ergonomics.

“All currently known trade offs are a long way from real-world vision,” he says.

He provides the following chart showing the current market standard and the “desired” attributes of a future VR vision system.

Reconstructing Reality And Interaction

Abrash describes other areas of intense interest for Facebook’s VR research as scene reconstruction as well as body tracking and human reconstruction. Interaction and the development of dexterous finger control for VR is a particularly difficult problem to solve, he adds.

“There’s no feasible way to fully reproduce real-world kinematics,” he says. “Put another way, when you put your hand down on a virtual table there’s no known or prospective consumer technology that would keep your hand from going right through it.”

He says it is very early days and the “first haptic VR interface that really works will be world-changing magic on par with the first mouse-based windowing systems.”

Oculus Connect 3 (2016)

Facebook’s PC-powered Oculus Rift VR headset launched in 2016 but Facebook hadn’t yet shipped the Oculus Touch controllers for them just yet. Echoing the comments he made in 2015, he says “haptic and kinematic” technology “that isn’t even on the distant horizon” is needed to enable the use of your hands as “direct physical manipulators.” As a result, he says, “Touch-like controllers will still be the dominant mode for sophisticated VR interactions” in five years.

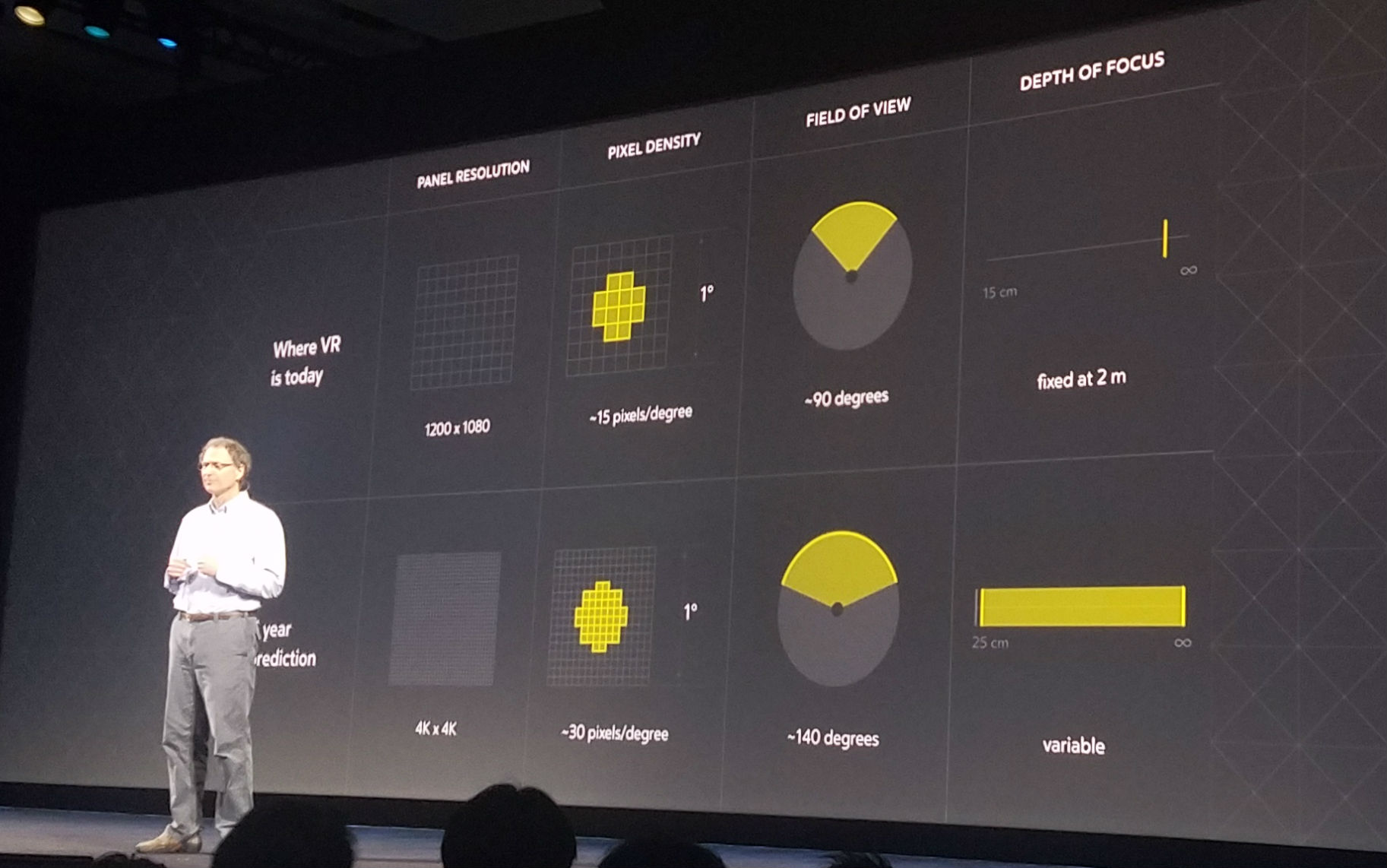

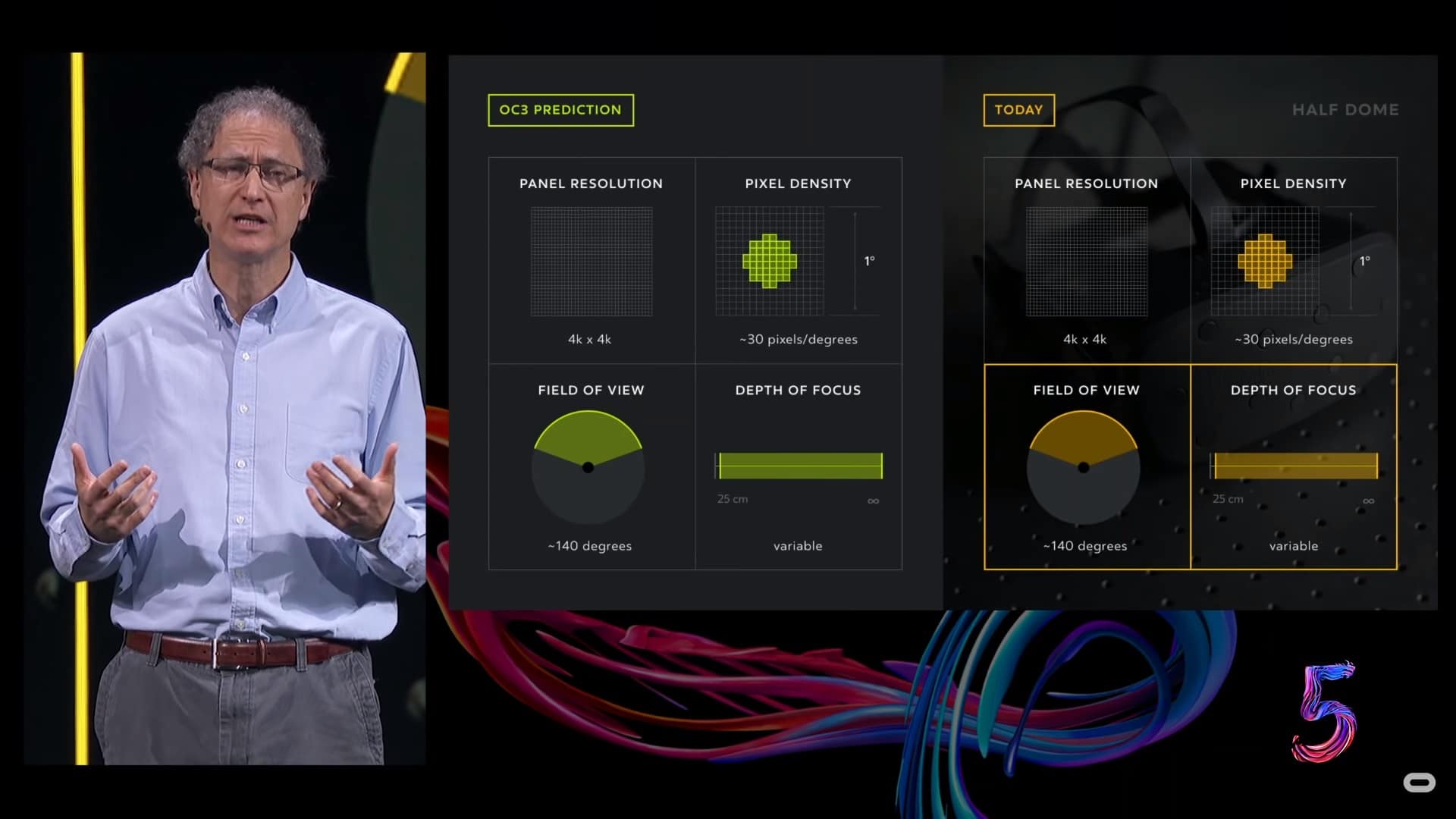

The prediction came in a series as Abrash essentially outlines what a new PC-powered Rift made in 2021 might be able to achieve. The biggest risk to many of his predictions, though, is that eye-tracking quality required for many advances in VR displays is not a solved problem. It is “central to the future of VR,” he says. He suggests foveated rendering is even key to making a wireless PC VR headset work.

The prediction came in a series as Abrash essentially outlines what a new PC-powered Rift made in 2021 might be able to achieve. The biggest risk to many of his predictions, though, is that eye-tracking quality required for many advances in VR displays is not a solved problem. It is “central to the future of VR,” he says. He suggests foveated rendering is even key to making a wireless PC VR headset work.

“Eliminating the tether will allow you to move freely about the real world while in VR yet still have access to the processing power of a PC,” he said.

He said he believes virtual humans will still exist in the uncanny valley and that “convincingly human” avatars will be longer than five years away.

Oculus Connect 4 (2017)

At Oculus Connect 4 in 2017 Facebook changed the Abrash update into a conversational format. Among the questions raised for Abrash was how his research teams contribute to VR products at the company.

“There’s nothing in the current generation that has come from us,” he said. ” But there is certainly a number of things that we could see over the next few years.”

While there isn’t a lot about the future in this session the following comment helped explain what he sees as the purpose of Facebook’s VR and AR research:

“How do we get photons into your eyes better, how do we give you better computer vision for self-presence, for other people’s presence, for the surroundings around you. How do we do audio better, how do we let you interact with the world better — it is a whole package and each piece can move forward some on its own but in the long run you really want all the pieces to come together. And one really good example is suppose that we magically let you use your hands perfectly in VR, right? You just reach out, you grab virtual objects — well remember that thing I said about where you’re focused? Everything within hand’s length wouldn’t actually be very sharp and well focused right. So you really to solve that problem too. And it just goes on and on like that where you need all these pieces to come together in the right system and platform.”

Oculus Connect 5 (2018)

At last year’s Oculus Connect conference Abrash updated some of his predictions from 2016. While they were originally slated for arrival in high-end VR headsets in 2021, this time he says he thinks they’ll likely be in consumer hands by 2022.

He suggests in this presentation that the rate of advancement in VR is ramping up faster than he predicted thanks to the parallel development of AR technology.

He suggests new lenses and waveguide technology might have a huge impact on future display systems. He also says that foveated rendering and great eye tracking still represent a risk to his predictions, but now he’s comfortable committing to a prediction that “highly reliable” eye tracking and foveated rendering “should be doable” by 2022.

“Audio presence is a real thing,” Abrash said of Facebook’s sound research. “It may take longer than I thought.”

Abrash showed Facebook’s work on “codec avatars” for convincing human avatar reconstructions and suggests that it is possible these might arrive along the same time frame as his other predictions — 2022.

He also makes his longest-term prediction in saying he believes by the year 2028 we’ll have “useful haptic hands in some form.”