Steven Spielberg’s adaptation of Ernest Cline’s Ready Player One debuts March 29. The foundation of a real Oasis, a social metaverse where anything is possible, is still under construction. However, the influence of the book on the VR community and now general culture should not be underestimated and might transform the technology landscape.

Previously, I wrote “The Social Matrix: How the Cloud Can Activate A Billion Virtual Reality Users,” a first exploration of cloud VR. In this new two-part series, the author explores the technology path toward an Oasis, first focusing on the technology and infrastructure, and the following piece, on the software and social experience.

Ernest was right, the metaverse is coming. One year before the Oculus Rift Kickstarter began he published Ready Player One and a movie deal was already in place. The book inspired the most recent generation of geeks who started companies with duct-tape, wood and wire prototypes that became billion-dollar enterprises. Now that vision of the future will be broadcasts to millions, in stunning cinematic form thanks to Steven Spielberg, against a backdrop of tangible VR technology.

While many question the film’s prospects, many, myself included, believe it will be a blockbuster and might catalyze popular interest the metaverse. The effect on how we think about technology could be profound. The advent of streaming virtual reality over upcoming 5G networks is being taken seriously by every major wireless network provider, and the technology necessary to do it is gathering strength.

In the Ready Player One future, the real world has taken a dystopian turn pushing almost everyone into the escapist metaverse of the Oasis. Kids get wireless, retinal projection visors to attend school in the Oasis and the more adventurous start to build a virtual identity in an expansive universe. Getting into the Oasis is free, and electronic credits are earned and spent in the Oasis to travel to different worlds, unlocking the experience and transacting a significant percentage of the real-world economy. The Oasis is based on a massive game engine able to manage millions of simultaneous users – the virtual world does not run locally, instead it is housed on the greatest cloud computer system ever constructed and streamed to everyone. Locomotion devices including haptic enhanced tracked gloves, body tracking haptic suits, fully immersive displays, full range locomotion gymnasiums – all of them are for purchase if you can afford real world upgrades. Hardware, software, and the entire technology landscape has been shifted toward powering the metaverse, one digital experience that rules them all. Does our future look so different?

In our real-life future, fueled by a developing 5G wireless network infrastructure, we could buy HMDs from wireless providers or unlocked on Amazon, cheap and subsidized. The “use case” for these headsets will be everything we do on smartphones today, but fully immersed, or when not immersed, powerful cameras will cast the outside world almost as well as our own eyes. You can always take off the fully immersive headset and wear an augmented reality one, and the distinction will be negligible, you will be able to see the world around you, if you care to, and use this same ecosystem through world-morphing lenses. The future is the metaverse, the network is wireless, and the business model is access to everything imaginable as if you are there.

The VR headset is not a solved problem, however the engineers building headsets like the Rift and Vive are making design tradeoffs and reducing engineering risks, with confidence in their product roadmaps and their ability to surprise with innovation.

The new technology frontier is behind the headset experience, up the data path extending to the cloud. The disruptive question is when, not if, the cloud and upcoming wireless networks and compute infrastructure – 5G and beyond – can be crafted to beam VR directly to the headset, bypassing the existing PC and mobile ecosystems to create a new platform, beyond Windows, iOS and Android and potentially bigger and more important than all of them combined. The architecture of this streaming VR network is the subject of a new white paper from TIRIAS Research which describes the technology of streaming virtual reality over upcoming high speed 5G networks. The graphics rendering pipeline will evolve to deliver low latency video streams that connect the resources of the cloud to VR headsets.

Running the metaverse will require virtualized game engines able to run with minimum latency – servers placed on the network’s edge with high bandwidth, low latency connectivity to a billion mobile and home users. The pathway is dizzying – your head moves, sensors detect and transmit that movement, head position is sent up to the cloud, the cloud runs a multiplayer experience much like your desktop PC does today, a GPU renders the corresponding scene, and then it must be compressed, transmitted over the network, decoded and output to a display – all in 20 milliseconds or less. It’s the American Ninja Challenge of computing, a heterogenous computing race against time, where the red button at the end of the race is your eyeball. If the computers fail, a billion humans get simulation sickness from visuals that are poorly correlated to movement in the virtual world.

The 5G Metaverse Backbone

The hypothesis of major network providers is that 5G is that future network, able to serve home and mobile users with extremely low latency. Quality of service will need to be high, or users will need to hang out at home with potentially more robust connections and generally more predictable network connectivity. The latency over 4G networks is theoretically 10ms, runs over 30ms in practice, and for VR needs to be less than 5ms. The theory is that 5G will deliver the combination of low latency and high bandwidth required for VR.

The computing architecture behind the wireless network needs to be designed for VR if imagined contextual user experiences like AR and smart notifications are to materialize. At Huawei, Ericsson, Deutsche Telekom, and AT&T, teams are investigating how to construct the network and the potentially massive computing infrastructure that must be available on demand to deliver these services. At Google, Facebook, Apple, Amazon, Tencent, Baidu, and Alibaba teams are trying to figure out if an arrangement with network providers is possible, or whether they will need to drive or acquire their own network.

Streaming the Metaverse

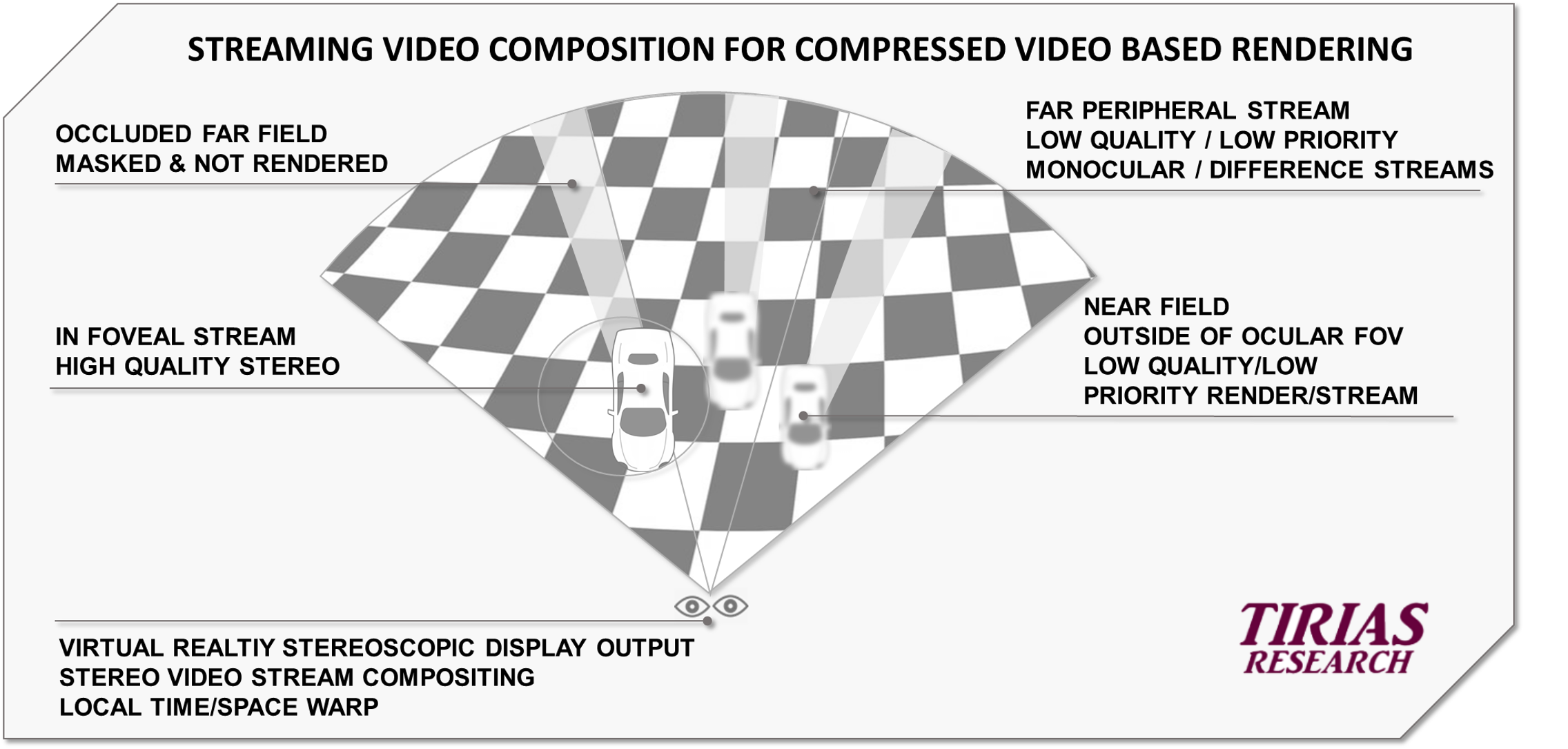

Cloud streaming of VR content is likely to include full or partial use of remotely rendered content delivered as prioritized and layered video streams. A new rendering path is required, one that can transit VR over the wireless network, compressing and decompressing video in a fraction of the sub-20 millisecond photon to motion latency budget.

NGCodec demonstrated VR streaming for the second year at CES, showing sub 10ms latency encoding and decoding to an HTC VIVE. Powered by a low-latency H.265 video codec implementation on fast programmable FPGA processors, NGCodec claims the fastest, highest performance encoder on earth. When strapped to the similarly low latency 5G network, this technology is designed to enable cheap mobile headsets to deliver desktop grade VR.

Another company, TPCast, also talked about streaming VR straight to wireless headsets, and even mentioned a collaboration with Huawei to field a proof of concept system using – you guessed it – FPGA based video compression. NGCodec claims this latency can drop further and is preparing to deliver 8K streams for VR over 5G networks. These arguments and early demonstrations are compelling and winning the attention of 5G network providers and the companies leading VR.

The backbone is a two-way street with upstream and downstream network demands. Delivering a strong user experience will also involve a host of contextual upstream data – sensor data for environmental mapping, object tracking, eye tracking, sophisticated motion and hand tracking, voice recognition and more. Augmented reality will require pose estimation, object tracking, and local scene reconstruction – locally and in the cloud – to create fully interactive augmented spaces.

Rendering the Metaverse

The rendering path will have to evolve to comprehend compressed video inherently linked to the rendering engine. Content in the current focus of users can be delivered as a high priority video stream to maximize frame rates and ensure lowest latency delivery of frames. Mobile VR headsets will be able to skip the heavy lifting and focus on performing last minute corrections, tweaking the stream or selecting the best from multiple potential frames.

The new cloud rendering pipeline define the requirements of edge servers that power the streamed metaverse. Networks and their computing backbone will be custom designed and optimized for a rendering pipeline, ready for streaming over 5G networks and beyond. VR session virtualization will permit the remote execution of VR experiences and subsequent graphics rendering on “headless” servers. Mobile eye tracking will reduce the field of view for high fidelity rendering, substantially reducing bandwidth requirements and conserving cloud GPU resources. Tracking-aware video compression will provide predictive logic for high quality and highly compressed video streams. Foveal prioritization will provide decoding and frame priority to content in the foveal range. And light field rendering will create cinematic visuals on a modest data budget.

The engineering, and soon the popular consensus is that the metaverse it going to happen. Does the existing set of roles suit the metaverse, or is the metaverse going to reshape the landscape of technology? For example, Google certainly can build their own 5G network on their Google Fiber infrastructure, strapped to their own custom designed compute architecture, and avoid the intricacies of dealing with network providers. Control over the entire cloud to use loop brings experience control to an entirely new level. And it enables streaming VR, IOT, augmented reality, not to mention self-driving cars and so on. But if Google has already built out their fiber network to penetrate large cities and is just waiting on 5G for the last mile unlocking this wealth of metaverse-as-a-service opportunities, where does that leave Apple, Facebook, and Amazon? Will Apple need to acquire their own network to keep up and deliver these emerging services? Will Amazon need to acquire their own network to keep their cloud relevant? Will Facebook need to have their own network to deliver VR and social in the new paradigm? While we cannot predict what each of these corporate players will do, we can predict that a fast-changing landscape will compel action. The promise of the streamed metaverse will create pressure to tightly integrate technology from the cloud to the end user.

Simon Solotko is an accomplished VR and AR pioneer who manages the AR/VR Service for TIRIAS Research and serves as an adviser to technology innovators. You can follow or message him on Twitter at @solotko or connect on LinkedIn. To read more on the migration of VR to the cloud and the intersection between VR and AI, check out http://www.tiriasresearch.com/pages/arvr/. Simon actively works with many of the companies mentioned including NGCodec which sponsored the white paper mentioned herein. This is a guest post not produced by the UploadVR staff. No compensation was exchanged for the creation of this content.