Meta just released a hand Interaction SDK and Tracked Keyboard SDK for its Quest VR headsets.

Interaction SDK

There are already interaction frameworks available on the Unity Asset Store, but Meta is today releasing its own free alternative as an “experimental” SDK. That “experimental “descriptor usually means developers can’t ship the SDK in Store or App Lab builds but Interaction SDK is an exception.

Interaction SDK supports both hands and controllers. The goal is to let developers easily add high quality hand interactions to VR apps instead of needing to reinvent the wheel.

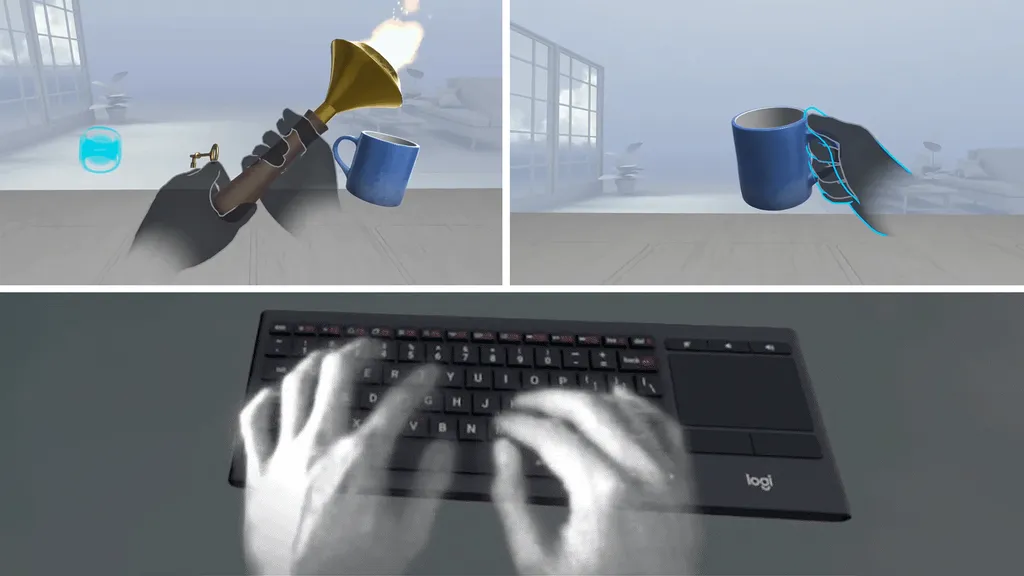

The SDK supports:

- Direct grabbing or distance grabbing of virtual objects, including “constrained objects like levers”. Objects can be resized or passed from hand to hand.

- Custom grab poses so hands can be made to conform to the shape of virtual objects, including tooling that “makes it easy for you to build poses which can often be a labor intensive effort”.

- Gesture detection including custom gestures based on finger curl and flexion.

- 2D UI elements for near-field floating interfaces and virtual touchscreens.

- Pinch scrolling and selection for far-field interfaces similar to the Quest home interface.

Meta says Interaction SDK is already being used in Chess Club VR and ForeVR Darts, and claims the SDK “is more flexible than a traditional interaction framework—you can use just the pieces you need, and integrate them into your existing architecture”.

Tracked Keyboard SDK

Quest 2 is capable of tracking 2 keyboard models: Logitech K830 (US English) and Apple Magic Keyboard (US English). If you pair either keyboard using bluetooth it will show up as a 3D model in the home environment for 2D apps like Oculus Browser.

We've been excited for this feature for a long time. Watch out for an update next week supporting a live-tracked @Logitech K830 in our app @vspatial!

Available on: @MetaQuestVR pic.twitter.com/guU5qbOqst

— vSpatial (@vspatial) February 1, 2022

Tracked Keyboard SDK allows developers to bring this functionality to their own Unity apps or custom engines. Virtual keyboards are slower to type with and result in more errors, so this could open up new productivity use cases by making text input in VR practical.

The SDK was made available early to vSpatial, and has been used by Meta’s own Horizon Workrooms for months now.