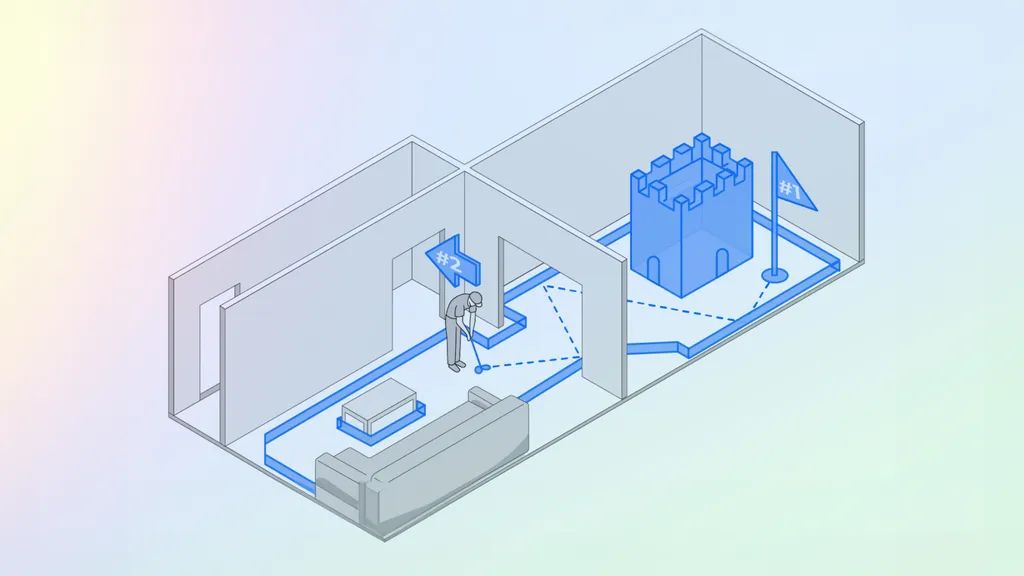

Developers can now build Quest 3 mixed reality experiences that span across multiple rooms in your home.

Earlier this month we reported that v66 of the Meta XR Core SDK lets developers disable the annoying VR-centric safety boundary in mixed reality. But what we didn't report was that the previous v65 release increased the accuracy and range of Spatial Anchors and let developers access the scanned scene meshes from multiple rooms.

Combined, these v65 and v66 SDK improvements now enable developers to build environment-aware mixed reality apps that let you walk through your entire home, not just a single room.

Spatial Anchors let developers lock virtual content to a precise real-world position, which is irrespective of the safety boundary or room mesh and can even be remembered by the headset between sessions. Spatial Anchors have been available for years, but before v65 the feature was designed for use in a single room. Meta claims with v65 of the SDK Spatial Anchors will now stay accurate in spaces up to 200m2, for example a 20×10m (66×33ft) home.

What makes mixed reality on Quest 3 uniquely compelling compared to older Meta headsets though is its ability to capture a 3D mesh of your environment, called the Scene Mesh. The Scene Mesh enables virtual objects to be positioned on, bounce off, or otherwise interact with your walls, ceiling, floor, and furniture.

At launch Quest 3 could only store one Scene Mesh at a time, but since the v62 update in February it has been able to store up to 15. Until the v65 SDK developers were only provided with the currently loaded Scene Mesh, the room you're currently in, but now with a new Discovery API the system will provide developers with the Scene Mesh for other captured rooms too.

The ability for developers to disable the safety boundary in v66 is the key enabler that makes these Spatial Anchor and Scene Mesh improvements truly useful, since the user can now walk around their home freely, no longer restricted to within that boundary.

A remaining major limitation with multi-room mixed reality on Quest however is the need to have captured a Scene Mesh for each room in the first place. In contrast, Apple Vision Pro continuously scans your surroundings to constantly update its scene mesh, no setup process required, which also has the advantages of supporting furniture being moved and avoiding baking moving objects like people and pets into the mesh. Given all the advantages of this approach, I imagine it must simply be too performance and energy intensive for Quest 3, hence Meta's static capture approach.