Quest Pro doesn’t have a depth sensor, despite Zuckerberg saying it would.

Quest Pro was previously referred to as “Project Cambria” – the codename given when it was teased at last year’s Connect. Zuckerberg told Protocol in May Cambria will “ship later this year” with an IR projector for active depth sensing. When referring to Quest 2’s camera-based depth reconstruction he said “it’s kind of just hacked”, going on to say “on Cambria, we actually have a depth sensor”. Hardware-level depth sensing is also “more optimized towards hands”, he said.

From the second I saw Quest Pro in person, I looked for the depth sensor in the position the leaked schematics suggested it would be. But I saw nothing, even as I shined my phone’s flashlight directly at the spot. So I asked Meta for clarification. A company representative responded with the following statement:

Meta Quest Pro uses forward facing cameras to measure depth in your environment. This works with 3D reconstruction algorithms to render your physical environment inside your headset via Passthrough.

Mark was referring to a different depth sensing system included in a previous prototype version of the device.

For context, we’re constantly testing and refining our products before launch and it’s common for hardware changes to happen between versions of the device as we build and develop.

Interestingly though, hand tracking did work better on Quest Pro in my brief time with it than I’m used to on Quest 2. That’s probably down to the forward-facing cameras having four times the number of pixels. Meta made significant improvements to hand tracking on even Quest 2 thanks to recent advances in neural networks, so it’s possible these advances mean a depth sensor is no longer strictly necessary for high-quality hand tracking.

But a depth sensor probably would have had significant benefits for one of Quest Pro’s key use cases: mixed reality. Devices with depth sensors like HoloLens 2, iPhone Pro, and iPad Pro can scan your room automatically to generate a mesh of the walls and the furniture in it so that virtual objects appear behind or on real objects. This is crucial for convincing mixed or augmented reality.

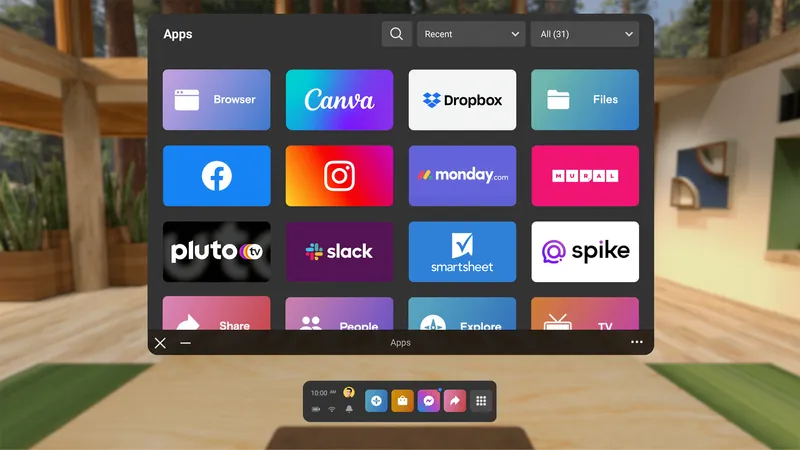

Right now, mixed reality on Quest Pro requires manually marking out your walls, ceiling, and furniture, just as it does on Quest 2. This is an arduous process with imperfect results, adding significant friction to mixed reality.

Meta told us it’s looking into automatic plane detection – referring to flat surfaces like walls and tables – but said it won’t be there at Quest Pro’s launch.