As we walked around the Los Angeles Convention Center this week recording what we saw at SIGGRAPH, I realized the computer graphics conference was looking back at us.

Around every corner, cameras, computers, and TVs broadcast their real-time interpretation and tracking of human movement. A startup called Wrnch, for example, found a spot across from the Oculus Medium booth where people were sculpting future physical objects in Facebook’s VR art application. Wrnch pointed a standard webcam at the stream of people walking between their booths. They say they could track the movements and gestures of more than 30 people at once, all powered by a small box sitting on the ground nearby. Each person’s pose and some simple gestures, like thumbs up, can be recognized by the system.

In our video below of a 39-minute walk through of the SIGGRAPH show floor you can see multiple companies displaying similar technologies.

Human Activity Capture

Several SIGGRAPH exhibitors strapped flexible booth workers into tracked suits for demonstrations of more robust performance capture — the kind that’s so accurate the movements can be incorporated into movies, TV shows, video games, and virtual worlds.

More than two dozen of Optitrack’s cameras tracked the movements of five people in real-time, all of them wearing suits while doing gymnastic-type movements. Rokoko, though, fine tunes the sensors on their suit to track body movement without all the external cameras. Their demo at SIGGRAPH shows how they can mount an iPhone to the suit for simultaneous facial capture. While we’ve seen this content development pipeline demonstrated previously, SIGGRAPH 2019 showed us a number of companies are now incorporating the world’s most popular phone into performance capture development.

New Appendages And Displays

A future-looking area of the conference focuses on exploratory research — like a robot tail. The device provides a sense of how balance might be augmented with the addition of a new appendage following us around everywhere. Even this tail, though, would likely need to understand individualized body movements with incredible detail to provide any actual benefits.

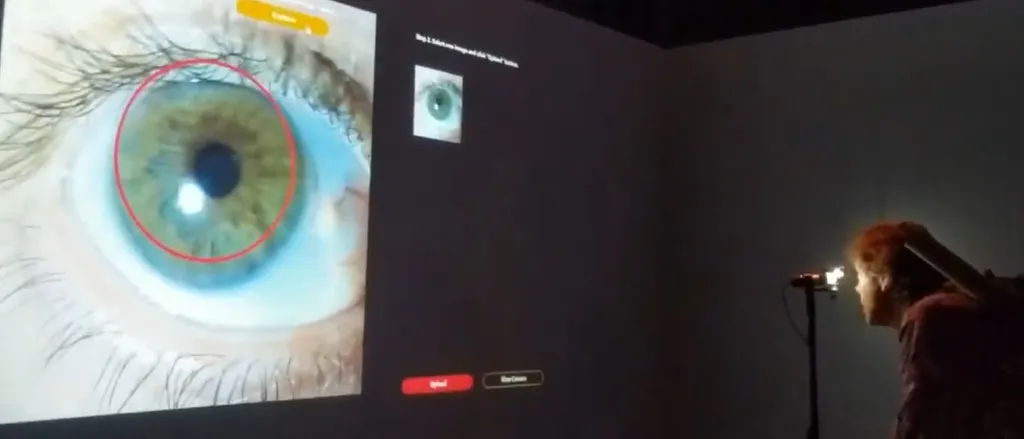

Sony’s display technology at SIGGRAPH needs to know your head’s position to show you a fairly convincing holographic image. Meanwhile, display research from NVIDIA showed the possibilities for a new kind of optics in an AR system. The system should be able to detect an eye’s movement — and then move a high resolution display so quickly — that your brain can’t even process what happened literally right in front of your eyes.

And of course — there’s no better example of the lowering cost and availability of complex systems based on computer vision technology than Oculus Quest. We saw developers pull the $400 headset out of their bag and put the headset on folks wherever they stood. Facebook uses the four cameras on that headset pointed outward to derive the location of the head and hands at any given moment.

Artistic Visions

A corner of the conference for art exhibits offered some of the most thoughtful explorations of this brave new world.

Artist Neil Mendoza employed voice recognition to transform words spoken by attendees into a word-projectile. You step up to the microphone and say something, then see your words launched across the room on a 2D screen only to be kicked around and added to the pile. The installation — called “The Robotic Voice Activated Word Kicking Machine” — then audibly repeats the word back out into our world. This utterance, though, is distorted so that it doesn’t sound anything like the original voice.

That seems like a fitting exploration of technology considering the way the Internet’s been used in recent years. Now that your intimate and individualized body movements can be recorded, saved and reproduced over the Internet with incredible detail on far less expensive hardware — the piece is one I’m hopeful more people will ponder as we look toward SIGGRAPH 2020 and beyond.