To understand what a Light Field Volume is, it’s important that we first grasp the concept that a captured Light Field consists of rays of light traveling in every direction, with their brightness, color, and their path, which is the direction and position of those rays. To learn more about what is a Light Field, here’s Ryan Damm’s previous article on the subject.

When light illuminates a scene, light rays bounce off objects in the scene, reflecting in every direction mixing colors of each surface they’ve hit. Rays bounce off surfaces in their path, eventually dissipating their energy as the light is absorbed gradually with each bounce. Some of those rays are occluded (blocked) to create shadows while other rays bounce at different intensities appearing to viewers as reflections or highlights.

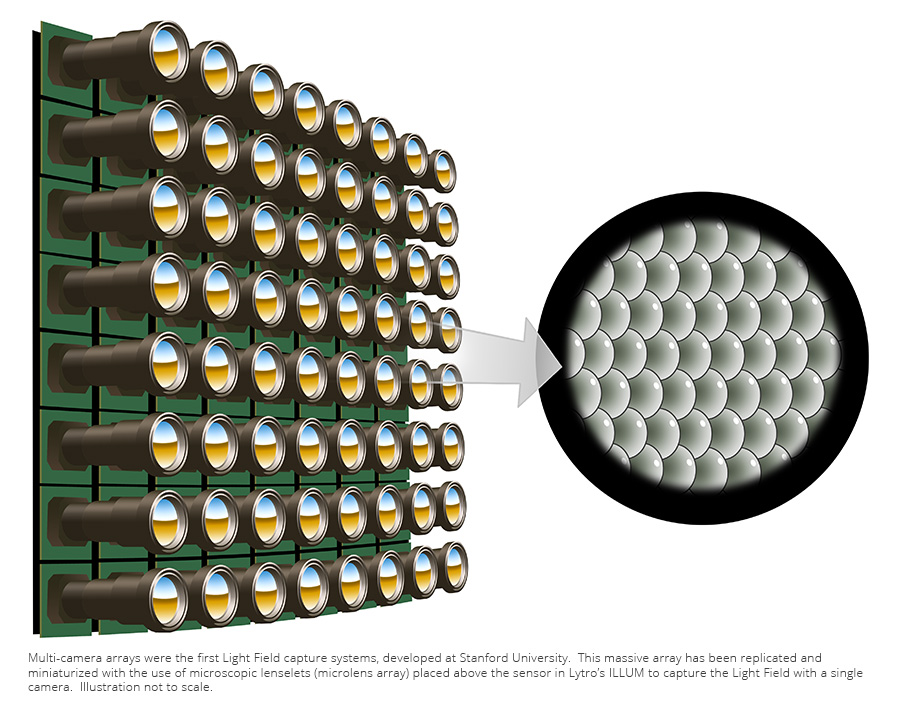

A Light Field can be captured using an array of multiple cameras as well as a plenoptic device like the Lytro ILLUM, with an array of microlenses placed across its sensor. The core principle in both cases is that the Light Field capture system needs to be able to record the path of light rays from multiple viewpoints.

With the recently announced Lytro Immerge Light Field camera, the light rays’ path is captured via a densely packed spherical array of proprietary camera hardware and computational technology. In its spherical configuration, a sufficient set of Light Field data is captured from light rays that intersect the camera’s surface. With that captured Light Field data, the Lytro Immerge system mathematically reconstructs a spherical Light Field Volume, which is roughly the same physical dimension as the camera.

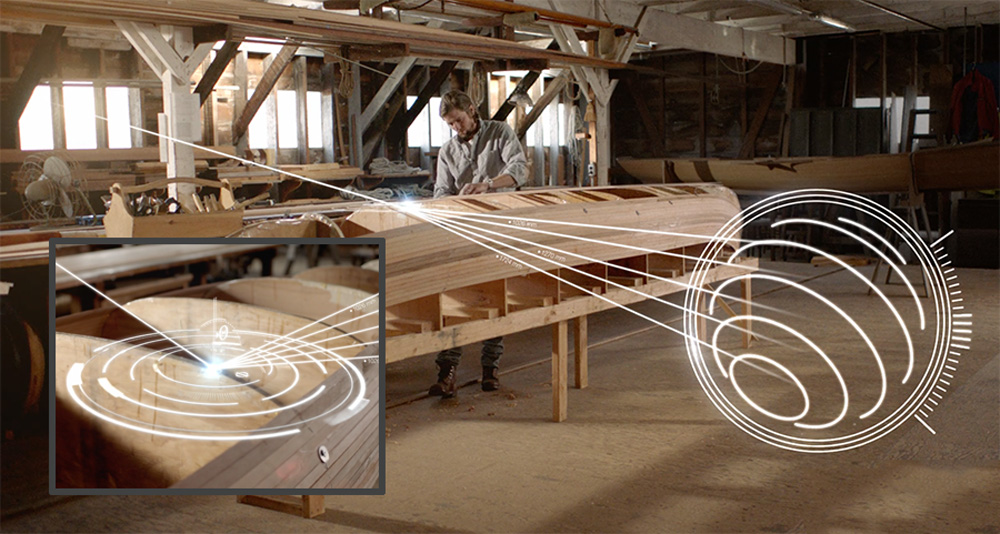

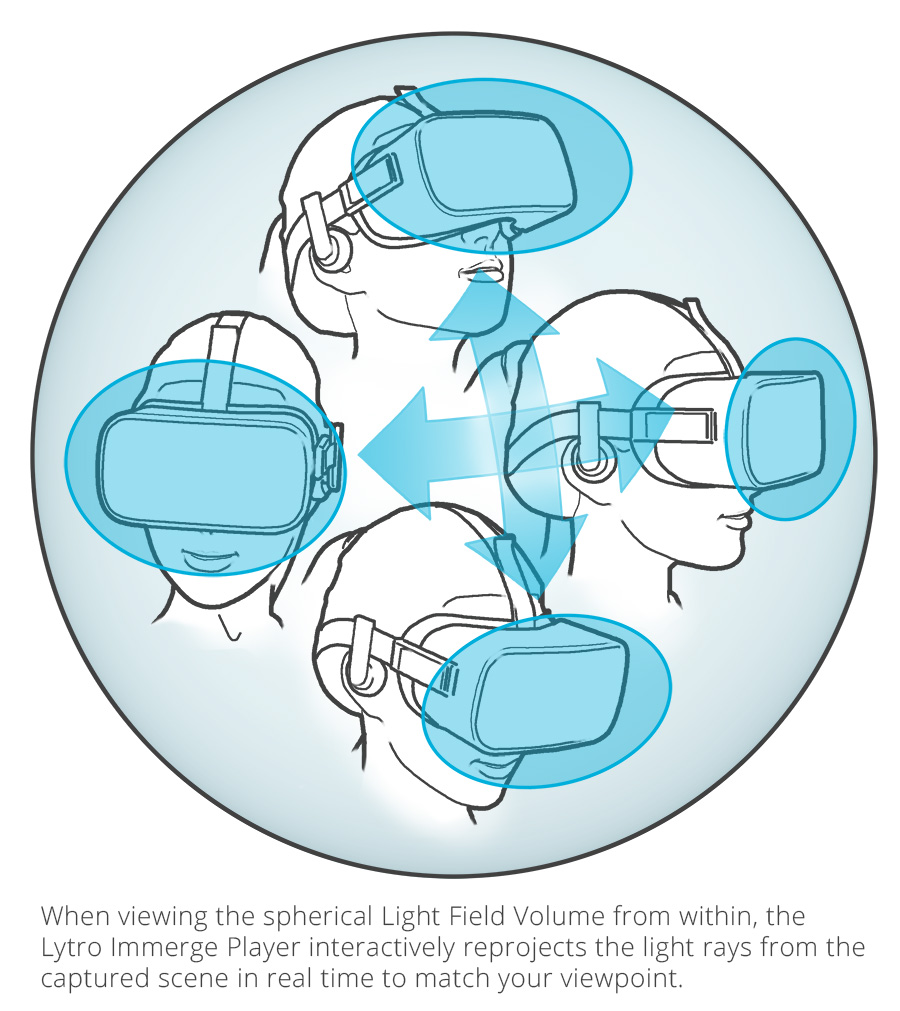

From any location within that spherical Light Field Volume, every viewpoint of the surrounding 360º scene can be recreated virtually, from the furthest left to right, top to bottom and front to back, in every direction and angle. The Lytro Immerge Player uses that Light Field Volume data to reproject captured light rays and virtually render the scene, with the rays’ color, brightness, and path. The Lytro Immerge Player automatically performs this critical last step, reconstructing the correct flow of light in the scene to deliver full parallax movement, natural reflections and shadows, all without any stitching artifacts.

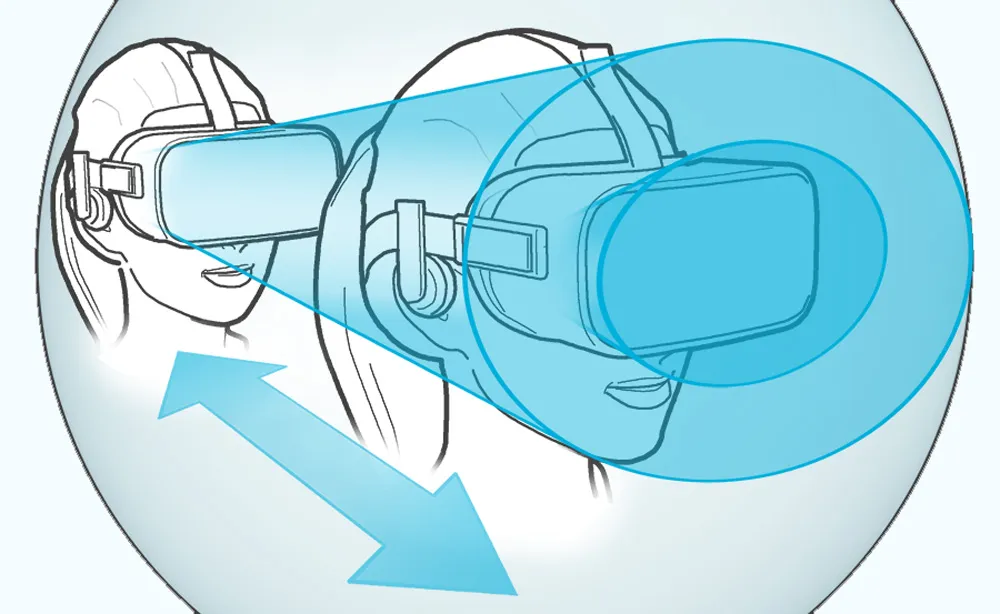

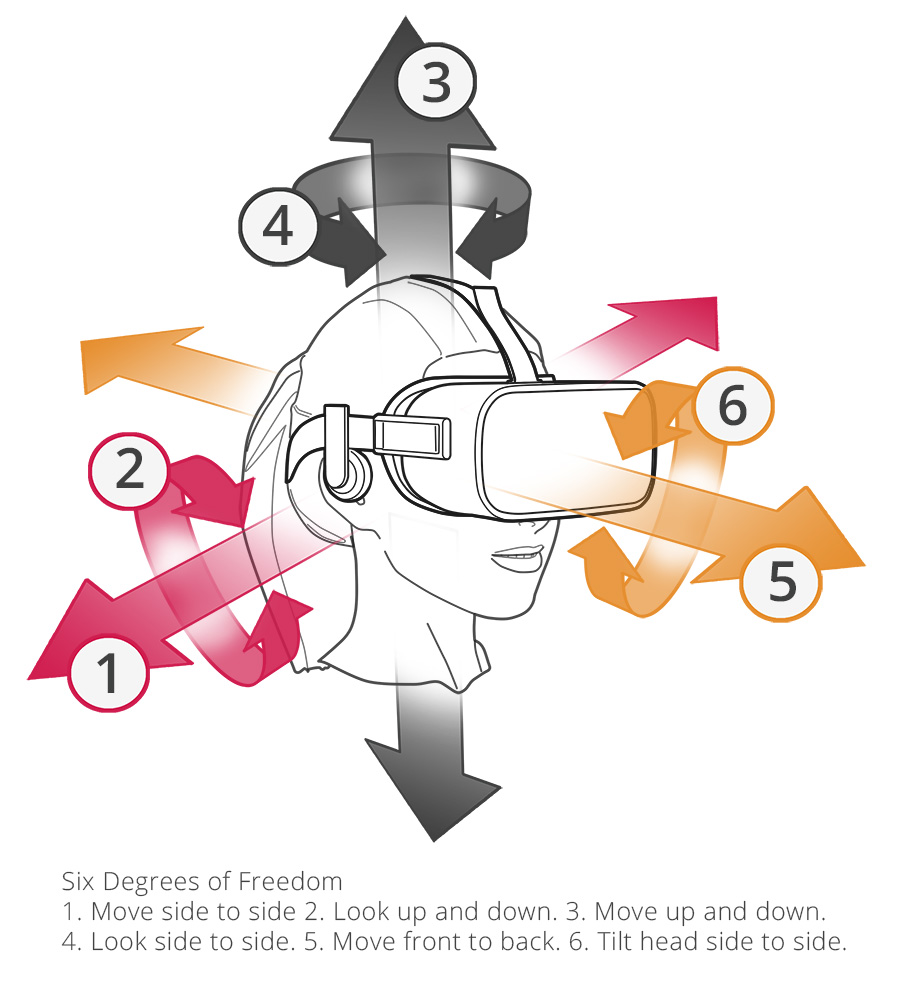

When viewed through a VR Head Mounted Display placed virtually inside the captured Light Field Volume via the Lytro Immerge Player, the reconstructed scene is displayed as perfect left/right stereo views throughout the entire volume, using any interpupilary distance (IPD) necessary for optimal viewing experience (distance between the eyes of the viewer). When viewing a Light Field Volume from within it, the viewer is able to enjoy a truly lifelike, immersive sensation of virtual presence with full Six Degrees of Freedom and uncompromised stereo perception at any IPD. Moving forward, side-to-side or twisting one’s head, the scene has the same natural parallax as if the viewer was looking at the original scene. Objects closer to your point of view shift naturally as you move side to side or back and forth.

In comparison, with typical stereo spherical 2D capture rigs (a cluster of 2D cameras arranged as a ring or sphere), the individual videos from each camera must be laboriously stitched into a pair of spherical 2D video streams. These two streams are recorded at a best-guess IPD, pre-determined and locked in space when the distance between each pair of cameras was set. As a result, the stereo depth perception in the VR video is only correct in a single direction and the stereo effect degrades as the viewer’s head turns.

In comparison, with typical stereo spherical 2D capture rigs (a cluster of 2D cameras arranged as a ring or sphere), the individual videos from each camera must be laboriously stitched into a pair of spherical 2D video streams. These two streams are recorded at a best-guess IPD, pre-determined and locked in space when the distance between each pair of cameras was set. As a result, the stereo depth perception in the VR video is only correct in a single direction and the stereo effect degrades as the viewer’s head turns.

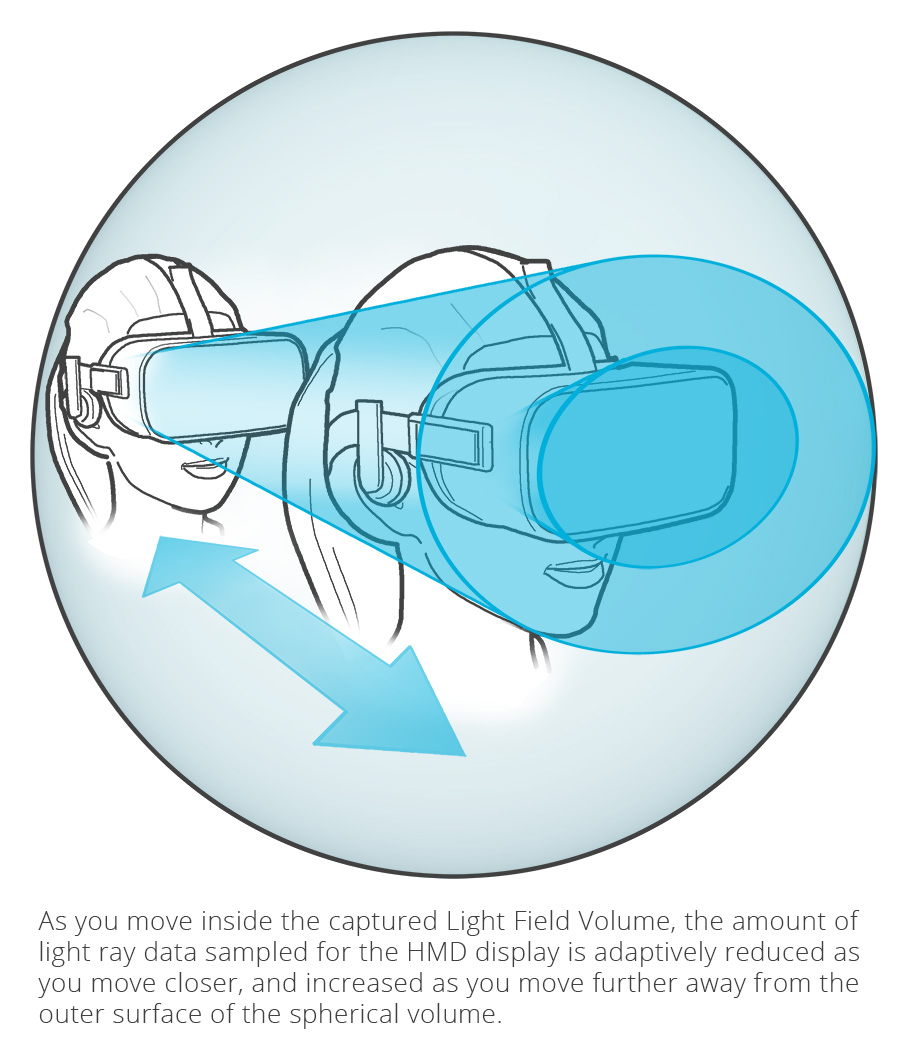

Conversely, as you look around within the Light Field Volume using the Lytro Immerge Player, the reprojected scene will respond interactively to your head’s motion, in real time, to precisely match your point of view. Move closer to the edge of the volume and a smaller area of light ray data is used. Pull your head back and more light ray data is included in the reconstruction. As you pan and twist, the player reprojects the correct Light Field rays for your exact viewpoint. The size and shape of the capture rig directly defines the area of physical movement a viewer can move around in when exploring a reconstructed Light Field Volume. In the case of the Lytro Immerge, the navigable Light Field Volume is slightly smaller than the actual camera size.

Lytro Immerge is designed to be configurable so it can meet the requirements of various productions and cinematic projects. In the case of a 360º scene, the light ray information from every direction is captured in the Light Field Volume. But other scenes may only need 180º views, or even planar views, and the Lytro Immerge can be configured accordingly to capture a Light Field Volume that suits the needs of each story. The Light Field Volume can even be combined with CG elements to produce effects such as a musical performance on a 3D stage, or create a live-action backdrop to CG characters. Content creators can also merge captured volumes in post-production to create larger or differently shaped virtual scenes. In future blog posts in this series, we’ll cover how various shaped Light Field Volumes and compositing techniques can play a part in cinematic live-action content creation for VR.

Steve Cooper is the Director of Product Marketing at Lytro and has been involved with 3D illustration, animation and character animation tools since the mid 90’s.

Steve Cooper is the Director of Product Marketing at Lytro and has been involved with 3D illustration, animation and character animation tools since the mid 90’s.