EDITOR’S NOTE: This article was a sponsored post by Intel. It was written by one of our New York correspondents, James Dorman and edited by our Editor-in-Chief Will Mason.

In a space where I constantly hear about sensors such as Leap Motion and Microsoft’s Kinect, Intel is getting the word out about its own “natural user interface” technology appropriately named RealSense.

One of the ways in which they are doing this is though “educate-and-excite” hackathons. I recently had a chance to experience one first hand in New York City. After a presentation introducing the second version of the company’s SDK, developers familiar with the space were asked to create something over the course of 3 hours. While they were busy experimenting, I went around and asked the developers what they would want to use this technology for. They gave answers that ranged from something as simple as scrolling down a page to creating a physics simulation lab.

RealSense is aiming to become a Swiss Army Knife of sorts for this space as they have been loading it with a ton of different features while keeping the size minimal.

Features include:

- Hands and finger tracking

- Speech recognition

- Segmentation (background removal)

- 3D scanning

- Augmented Reality

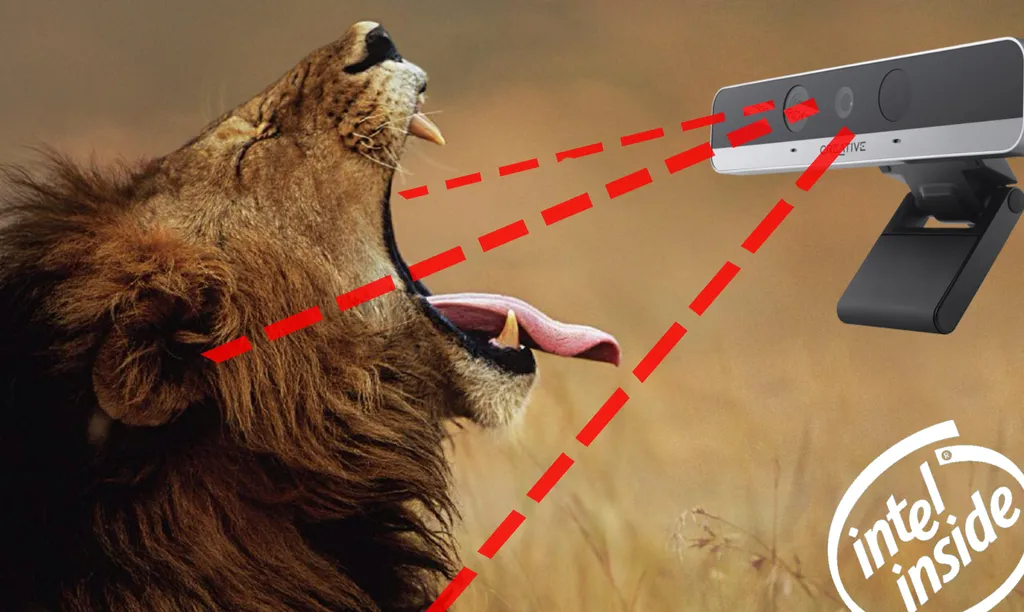

However, the most interesting feature I found was the detailed facial recognition ability of RealSense. RealSense is designed for close range interactions (20cm-120cm). The company said it wants to make it even better but as it stands now it is a notable improvement when compared to its peers.

RealSense has what the company calls “emotion tracking” and can support six primary emotions. Dennis Roberts won the hackathon’s “expression game,” which uses facial expressions as controls to move a ball around in space. Having used it myself it was fun to notice the smoothness of sticking your tongue out and having the ball move one way just before you raise your eyebrows to have it move another. The idea of the game was to navigate a ball within a set environment and absorb other balls. Dennis said that the game teaches self control and body awareness. After you succeed in making the ball go one way you tend to to get excited and smile which then moves the ball in another direction. The trick he says is “to stay clam and aware.”

A more practical application came up during the presentation when they gave an example of how RealSense could help make reading fun for a child. Let’s assume the child is reading the part in Jack and The Bean Stalk where the stalk grows and grows. While he or she is reading there is an animation in real time of the stalk growing. If the child stops reading, however, the animation stops. This interaction can provide fun incentives for the child wanting to physically see what will happen next. Who wouldn’t want to see that stalk keep growing?

For those of us that have graduated elementary school there are other exciting applications to be noted. How about using your face as a password for your computer? Yes, Intel is working on fine tuning that technology for that as well. RealSense even has the ability to calculate your pulse using computer imaging technology – which is pretty darn cool if you ask me.

It is clear that gesture control and more natural interfaces are going to advance rapidly as the VR and AR market grows. Intel has already made plans for future growth with the R-200 version of RealSense which will be coming out later in the year.

Some of the features of the R-200 will include:

- Larger Range (a couple of meters)

- Made for both indoor and outdoor use

- Ability to scan rooms .

- Option to 3D scan and print

Other future modifications that are being discussed include:

- Voice recognition

- Ability to recognize a greater amount of emotions

Things can get a bit slow when using RealSense to navigate the web. Having to swipe your hands three times to scroll a webpage once completely takes a bit of the “natural” out of “natural user interface.” However, the developers and I found that things really smooth out in comparison once you use Unity. Intel has made it their made it their mission to integrate this technology into laptops, so it will be interesting to see how people use it at a larger scale.

While making my rounds one developer summed things up quite well stating,“I like the idea of small devices doing awesome things.” With the exception of a few needed improvements- I agree.