Great news! OTOY has just demonstrated light field capture for VR, for real, for the first time! The images look like they’re pretty amazing, this isn’t just light field for depth mapping … this is full, accurate, correct look around for head tracking in live action VR.

(UPDATED: we erroneously reported the rig uses two cameras; the second camera is simply there as a counterweight. Don’t believe everything you see.)

Well, sort of. The action is only somewhat live, it’s more like a still life. Check out the rig they used here:

Confused? Check it out in motion. It’s scanning all around, taking a few minutes to capture the light field with two cameras.

Obviously, this doesn’t work with moving subject matter, so we now have light field for still life. We could reasonably use this approach for stop motion animation, but not shooting traditional live-action VR.

But take nothing away from OTOY, this is still a super clever approach. Live action VR is hard, and an array that could capture light field in realtime would be harder (you know, physics and all). At a rather impressive minimum, this prototype is a tech demo to show off the power of OTOY’s light field render engine. And it’s an impressive tech demo, which will matter more when someone, anyone creates a real time light field camera (you paying attention yet, Lytro?).

Also, small aside: this could be an important part of capturing static backgrounds; imagine shooting live action VR on a greenscreen and comping in a light field background captured by this scanning rig … apart from the challenges of a hybrid approach, it seems like a reasonable way to generate live action VR. It might also work well with fully CG subject matter, providing a live action backdrop. So we’ll have to see how this is used, or if it’s just an incremental step along the way to full, real time live action light field VR (that’s a VR mouthful).

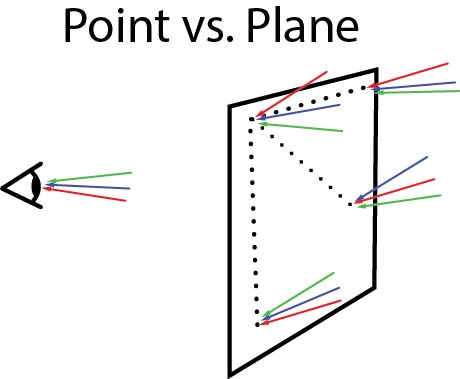

But back to the tech itself: how does it work? Let’s do some ‘splaining. Quick review: a light field is the set of all light rays that enter a surface or volume. We looked at this when talking about Lytro’s fund raise, but here’s the image again:

In my wild, Lytro-fueled speculation, I imagined using a dense microlens array to capture a real world light field. OTOY took a completely different approach: two (different) lenses, twirled and spun in a circle. They’re creating a virtual lens array by sampling the image across time. It’s a virtual camera array for virtual reality — fitting.

[UPDATE: So yeah, as noted earlier, the rig only uses a single camera, and a single lens (the ubiquitous Canon 8-15mm). The second camera visible in the still image, sans L-series glass, is simply there as a counterweight. Hat tip to Marko Vukovic for pointing this out. (This also explains OTOY CEO Jules Urbach’s confusion when I asked him about a ‘second lens.’) I was originally imagining the second lens might provide a different FOV for some clever super-sampling technique — wide lens for entire phi, theta range, longer lens for higher accuracy. But no, it’s just a camera see-saw. My bad.]

And the reason the virtual array works is the light field it’s sampling isn’t time-varying; it’s a still life. So if you take a photo, move the camera slightly to the right and take another photo, you’ve got two substantially similar photos. But the differences are the tiny variations in the light field from position to position.

So, it’s a virtual camera array. Big deal, you say — some companies like Jaunt are using physical camera arrays, not virtual. Why are two Canon DSLRs (UPDATE: one plus a dummy) on a merry-go-round any kind of significant advance? Because of subsampling and aliasing. A real-world light field is mostly composed of low-spatial-frequency data: you move your head a little to the right or left, and the scenery doesn’t change significantly. (It changes enough that your two-eye view of the world provides stereoscopy, though.) But there are high frequency components too: the reflections off of shiny surfaces, glints and sparkles. You simply can’t capture those components of the image without a dense light field capture —- even high end sparse capture (like Jaunt’s) or inferred depth (like NextVR’s light-field-for-bump-mapping approach) necessarily miss those tiny sparkles. In their press release, OTOY correctly points out how this affects our perception of materials:

“Reflections off of jewels, marble or hardwood flooring move accordingly as one changes perspective. Every photon of light is accurate to where one is in the scene and the materials that light is interacting with.”

So let’s take their cue, and instead of talking about frequency domain analysis, let’s take a concrete example. You’re a live-action cinematographer, and part of your scene is glass of water (it’s a heart-stopping, thrill-a-minute show, no doubt). If you move around the glass of water, you see sparkles and glints reflected and refracted by the glass. You see a strange, distorted scene through the glass, one that changes quickly as you move around it. (Sound trivial? This is exactly how your brain understands that this thing is glass. It’s a stunning amount of implicit processing going on in our heads.) Small but bright glints of light — the sun refracted through the glass? — appear then disappear as you move around the scene.

Now, if you’ve got a sparse array, you might completely miss that glint because the rays of light are in a narrow bundle (that’s why it disappears when you move your head). You’d miss it if the rays of light landed between the lenses in your array. Worse, if it does happen to hit a lens in your array, you have no real way of knowing that it’s only a small glint — you can’t find the start and end of it — so you’re stuck smearing that glint out across a wider space. This is what’s called ‘aliasing,’ when you have image components that are higher frequency than your capture frequency. (DPs, remember the 5D mark II? Remember thin lines on shirts or chain link fences exploding into psychedelic color shows? That’s aliasing; the mark II’s pixel binning only sampled the image every few pixel columns, and the result was a capture frequency well below the frequencies present in the image.) The punch line is, whether you catch that glint or don’t, you’re reproducing a significantly lower frequency image if you’re doing sparse sampling. The result may feel plastic, dull, or dead.

OTOY’s whirligig, though, will catch that glint, from the beginning to the end (well, within reason — it’s still a discrete system, so there is an inherent sampling frequency … but it’s a lot higher than 20 or 50 cameras, from the demo video). The result should be significantly more realistic representation. (I say ‘should’ because I haven’t had a hands-on yet — go follow the links and you’ll know more or less exactly what I know. We’ll update you with more details when we get access, hopefully later today.)

[UPDATE: hands-on is here.]

That said, we’re still not capturing motion with this prototype. It’s still life only, furniture and bowls of fruit. You can get the reflections off of jewels, but not if those jewels are attached to an actor or actress. (Unless the actor is heavily sedated.) But that’s okay, this is just another step in the right direction. Hopefully the effect is profound enough to spur more advances on the capture side. And until then, the bar for live action VR — especially with head-tracking — has been raised yet again.

(Also, we’re getting closer to doing light fields justice. And that gives me warm fuzzies.)