I’ve always prized creativity. As a developer, writer and musician, it’s what drives me. But as much as I value creativity in my work and free time, I have to confess I’ve never really felt compelled to make visual art. That’s not to say I don’t apply a design eye in my web work or that I don’t have opinions about style — it’s just that my creativity manifests in other ways. I considered this a blind spot and never felt inspired to develop those skills.

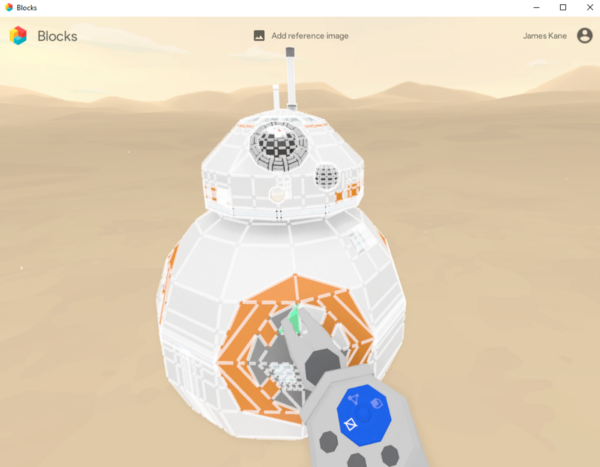

That was true until a few weeks ago, when I got my hands on a new class of virtual reality 3D creation tools including Google Blocks, Google Tilt Brush, Oculus Medium, Oculus Quill, et al. Each in its own way, these apps try to fundamentally change the process of 3D art and asset creation — modeling, sculpting, painting, etc. — moving away from two-dimensional computer screens and into room-scale three-dimensional VR space. Even in their infancy, these tools have already inspired me to think and work and create in entirely new ways.

The Old Way & The New

To contrast, think about 3D content creation happening on a standard 2D computer monitor. The simplest task, like rotating your subject, requires knowledge of a labyrinthine software suite and its many unique series of hotkeys, scrolls, mouse clicks, drags and so on (to say nothing of more complex modeling and sculpting tasks/techniques).

In new VR creation tools like my personal favorites, Google’s Blocks and Tilt Brush, you can literally step around what you’re working on, or reach out and grab it, physically manipulating and scaling objects effortlessly in real-time. It’s a revelation — a quick trailer:

https://www.youtube.com/watch?v=12dB1IeJW_I

There are true masters of the old way, for sure. But the barrier to entry is super high — in addition to the already-Herculean task of having to be being artistically inspired. Why shouldn’t this process be more natural, spatial and physical?

The work already being done with this technology by artists and developers at the highest levels is astounding:

Hey there! I’m Jarlan and I make robots, mechs and anything #scifi! New bot posted every Wednesday!! #gamedev #VirtualReality pic.twitter.com/x5lFKdfph5

— Jarlan Perez (@JarlanPerez) July 31, 2017

Can I get a “yaaaaay!” for magnets! #MadeWithBlocks #MadeWithUnity pic.twitter.com/Zd5PqHIowm

— naam (@_naam) July 31, 2017

I’m STILL flabbergasted that @Google invited me to be a VR Artist in Residence. Thx for EVERYTHING!❤️ Blog feature: https://t.co/dmLA8kiBkx pic.twitter.com/q4vVUjMPhS

— Estella Tse (@estellatse) July 18, 2017

My Process

So, what could I possibly do with it? Aside from a couple Blender tutorials, I’ve got no experience making 3D models (just pushing them around in Unity) and no real visual arts experience, period.

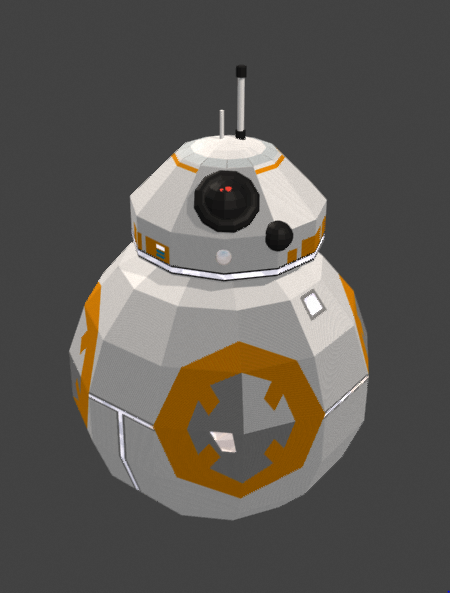

I’m an amateur, but as soon as I picked up the palette and drawing hand in Google Blocks, it just made sense. Versus any 2D interface I’ve encountered, it’s incredibly intuitive and fun and compelling to model characters, objects and scenes in real space. While you can import models from Google’s crowd-sourced, public repository to construct a scene, I wanted to try my hand at some totally, completely original work.

Another option using VR creation tools would be to bring my unpainted model into another app for freehand painting with a wider array of brushes, effects and color options. But ultimately, my drawing is lousy, I preferred the symmetrical simplicity of the low-poly patterns for this design and for this exercise I wanted to experiment solely in Blocks.

I spent two-to-four hours (time flies) on my first-ever 3D model. It was a truly exciting learning experience. Considering this is a brand new tool exploring brand new UX parameters, I admire what the Google team has already accomplished and look forward to continued improvements.

So, that’s it, right? I made a model — what now? Ah-ha, but you can publish your models to Google’s public repository so they can be downloaded and reused in other people’s scenes. Check out my full BB-8 model here which you can inspect, manipulate, download and remix yourself.

Even more powerfully, you can export Blocks models and scenes in .obj format — with materials included in .mtl — for use elsewhere, including other creation tools, 3D engines, VR/AR and 3D printing. For me, this is where Unity comes in.

For the uninitiated, Unity is the game engine that powered last year’s smash hit Pokemon Go! and many thousands of indie and high-end titles besides. It’s extremely easy to use while also being spectacularly powerful and customizable. I started learning Unity in C# two years ago (despite or perhaps because of my years in web development, I steered clear of the JavaScript API) and I’m still regularly blown away by the amount of free, amazing resources available to Unity developers.

I’m using an Oculus Rift, so my path of least resistance in Unity is to prototype a scene using the Oculus Sample Framework from the Unity Asset Store (another Unity strength, that asset dispensary). This package provides many of the core prefabs and scripts you’ll need to put together a totally custom Oculus app. It’ll give you access to an API to program responses to HMD and Touch controller input data, as well as standard models to visualize HMD and controller tracking in real-time.

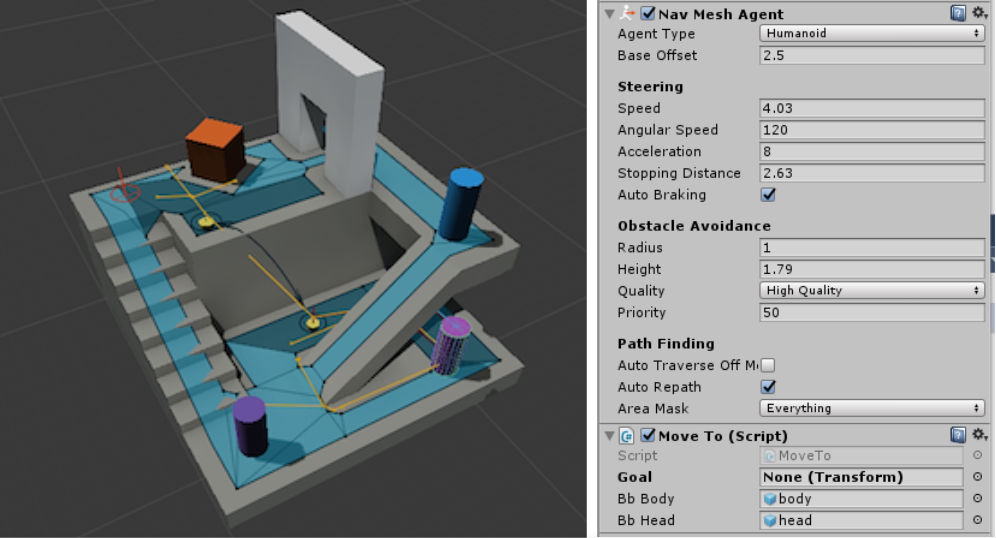

Once those controls were in place, my initial game logic goal was simple: BB-8 should roll to wherever I’m pointing.

Again, Unity is so powerful and these use cases so well-tread that certain aspects of this are practically free to implement. By establishing a NavMesh on the game floor and adding a NavMeshAgent component to BB-8, he instantly knows how to move about the terrain once given a goal destination, navigating obstacles and even coordinating with other moving NavMeshAgents in real time.

Since his goal destination should be wherever I’m pointing to, I just need to do the following:

- detect when the pointing gesture is being made

- when that happens, get the transform value at the end of the raycast extending X units (arbitrary) from the pointer finger. If the raycast hits the ground, get the transform of the collision point

- set BB-8’s NavMeshAgent goal destination to that transform

- apply some rotation animation to BB-8’s ball-body while he’s moving, maybe with a little dust trail effect at his base

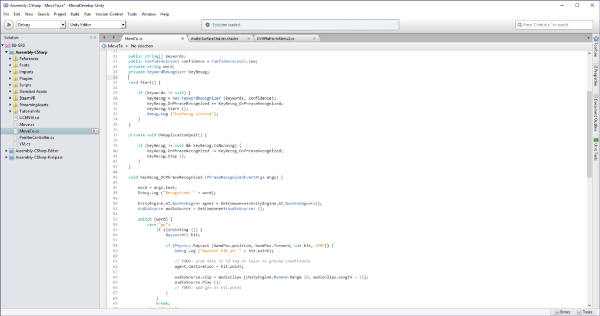

Interestingly enough, I don’t think there are direct API methods to detect common Touch controller hand gestures (like making a fist or pointing). Instead, I had to monitor several inputs at once for the magic combination that make up the gesture, as done in a rudimentary way below:

private bool IsPointing () {

// if (!OVRInput.Get(OVRInput.Touch.PrimaryIndexTrigger)) { Debug.Log(“No PrimaryIndexTrigger touch detected, could be pointing!”); }

// if (OVRInput.Get(OVRInput.Touch.PrimaryThumbRest)) { Debug.Log(“PrimaryThumbRest is being touched!”); }

if (!OVRInput.Get(OVRInput.Touch.PrimaryIndexTrigger) && OVRInput.Get(OVRInput.Touch.PrimaryThumbRest)) return true;else return false;

}

So I can call this in Update() and, if it returns true, ask BB-8 to roll to wherever I’m pointing. To get him to the goal destination, I just have to access his NavMeshAgent component and assign it to a transform in the inspector.

// in our declarations:

public Transform goalDestination;

// to store transform data for BB-8’s goal destination.

public GameObject bbBody;

// allows us to access BB-8’s body for rotation control

Update () {

UnityEngine.AI.NavMeshAgent agent = GetComponent<UnityEngine.AI.NavMeshAgent>();

if (IsPointing()) {

agent.destination = goalDestination.position;

}

if (agent.remainingDistance > 0.25f) {

bbBody.transform.Rotate (agent.velocity.magnitude * bbRot * Time.deltaTime, 0f, 0f);

}

}

The last conditional is a primitive rotation for BB-8’s body (but not his head, which I’ve separated out as another GameObject in my hierarchy), which will cause it to roll forward on its X whenever BB-8 has a goal destination but hasn’t arrived there (i.e. the NavMeshAgent is moving). The amount of rotation will be roughly determined by the velocity BB-8 is moving at, which we can easily set in the editor via NavMeshAgent (some braking speed is also conveniently baked in).

That roughly takes care of my simple logic goals. Like, it’s probably wise not to test the notoriously litigious Lucasfilm organization further, right?

Oh, what the hell, I can’t resist. This is a great opportunity to experiment with another new Unity feature: Windows 10 Voice Recognition functionality!

Unity now offers a native integration of Microsoft’s speech recognition tech, which can run locally with no internet connection — as opposed to a Apple’s Siri, Amazon’s Echo or Unity’s other native voice recognition APIs, which offshore your vocal input for processing in high-powered, highly-optimized language processing machines in the cloud.

It’s an impressive advancement. In my early experiments, I’ve had to allow for a fairly low confidence threshold and I’m unsure about the total load this locally-driven processing puts on your machine (no time to run tests), but I really enjoyed being able to plug keywords in and immediately start coding game logic for them. I set up custom responses to “stop” and “go” so that BB-8 will only move to where I’m pointing if I give him the vocal command and added “return” logic to make him come back to the player’s position.

Since BB-8 now has to listen to me, it’s only fair that I let him get a word in edgewise. To the Google Machine we go! Predictably, I found a big cache of questionably-sourced Star Wars sounds, downloaded them, and did not think twice about it because BB-8 deserves a voice. “Hi/hey/sup/thanks” now all prompt vocal responses from BB-8, as do the movement commands.

Instagram-Ready

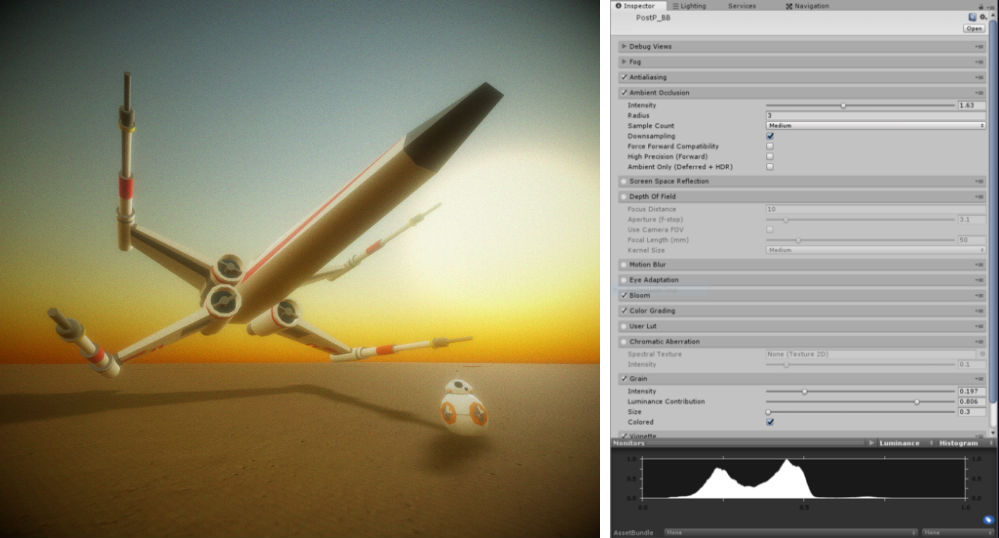

Game logic aside, BB-8 could use some sprucing up. I started by adding some colored spotlights around his various LEDs, positioning them in relative space above the model. But the real magic happened when I worked through Danny Bittman’s great video tutorial on how to render and light Blocks models in Unity. He took BB-8 from cute-but-clunky to camera-ready in about 15 minutes with post-processing and settings tweaks — tricks I will continue to use in other projects! Thanks, Danny!

Back to Reality, Temporarily

At this point, BB-8 is alive (the big reveal in a moment) and we’re best friends. I’ve spent hours staring into his bulbous black visual sensors, up close and personal. We’ve had lengthy conversions, discussing this and that. He’s as real as I could possibly have made him without knowledge of advanced robotics.

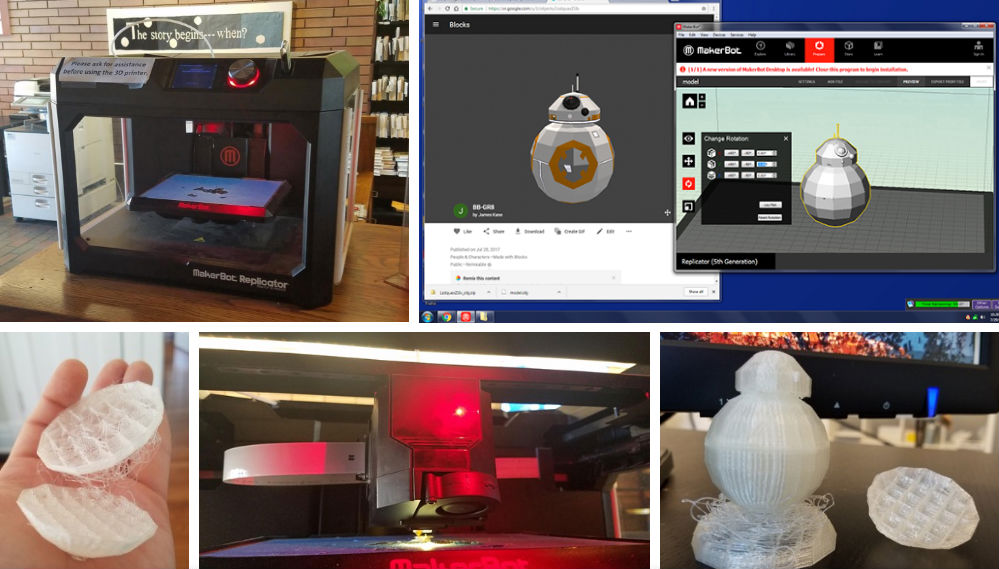

But something more may yet be done! I feel BB-8 needs a physical manifestation of some kind, and it just so happens that right down the street is the University City Public Library. They have a 3D printer available on-demand for the fine sum of one US dollar per hour (yes, $1/hr). It’s a Makerbot Replicator Mk5, and while it’s not exactly top-tier as 3D printers go, it’s available to every man, woman and child in the community for a super low cost. As these creation tools, VR experiences and 3D printing technologies mature alongside one another, publicly-available tech resources like this will be so critical. Behold, BB-3DP!

The Promised Big Reveal

What’s all this been building to? Well, I’ve become an evangelist for these technologies in my personal life and work— and like any good evangelist, I need to spread the word.

I’m now hosting monthly XR office hours in the studio at my workplace to allow co-workers to experience high-end VR, many of them for the first time. Last week at the inaugural event, I demoed these creation tools and my BB-8 mini-game process alongside other Oculus experiences — plus live 4k mixed reality camera tracking, mobile VR, and samples of Apple’s upcoming ARKit functionality. I’m pleased to say it was a big hit and has already started generating a lot of client-facing ideas for the company. Even BB-3DP showed up to watch the curtains pulled back.

Well, let’s have it then:

Conclusions

To be able to work with Google Blocks at such an early stage feels like a privilege. I can see this tool and others like it really coming into their own in the next few years and completely redefining 3D creation workflow as we’ve known it.

Masters of the old way — 3D workflow on 2D screens — will be remiss to give up their finely tuned hotkey combos, and that’s fine. But for coming generations of XR-native 3D designers, artists and developers, I truly believe this more spatial, more physical creation process will become industry standard as tools and best practices mature with time. For now, there are things I wish Blocks could manage that it can’t — and a few suggestions are appended to this section.

What it can’t do (yet), others are attempting. More creation tools are pouring into the marketplace daily, with Gravity Sketch being the latest entrant (now available in early release on Steam). UK-based developer Tim Johnson is working on his own creation tool in his spare time, and it already does a few nifty tricks Blocks can’t yet handle.

VR modelling so far, in summary:

✅Face/edge/vert movement

✅Face extrusion

✅Undo/redo

✅Face/edge/vert collapse

✅Face/edge/vert delete pic.twitter.com/W0ipNUkAOa— Tim Johnson (@marmalade_tim) August 3, 2017

Ultimately, while standalone 3D asset creation apps are great, to truly unlock the power of your work— to make it do something — you still have to export it, right?

That’s why, to me, Blocks- and Tilt Brush-like functionality would be most powerful as native Unity or Unreal 4 feature-sets — an API or plugin for use directly within these editors, where I can really make magic happen as a developer.

I actually think Unity just needs to add a Blocks-like plugin/API for live in-scene model/object editing and composition, right? Why not?

— jameskanestl (@jameskanestl) August 3, 2017

Perhaps there’s room for Google and Unity to partner on this — or perhaps I’m hopelessly naive for even suggesting such a thing? No idea. Regardless of feasibility, bringing all the functionality of Blocks/Tilt Brush directly into Unity itself would be an amazing achievement and one I’m starting to ponder the how-to’s of.

My questions for the Google teams would be around goals for Blocks and Tilt Brush. Are these fun tools/toys or is this just the start of a grander vision, some kind of unified VR editing suite that can totally redefine 3D creation best practices as we know them? Their blog post detailing new VR animation tools built for Blocks during a recent hackathon shows the team is thinking creatively — but what is the long-term strategy?

Here are a few suggestions from an outsider’s POV. Maybe these are unrealistic goals for reasons I don’t understand, and maybe I am using poor terminology, but fwiw:

Shape/object grouping hierarchy UI — create a panel to manage shape/object grouping, a la the layers menu in Photoshop

Symmetrical extrusion — select multiple faces & extrude simultaneously

Subdivison deletion — ability to delete subdivisions (besides undo). How about… while the pliers tool is selecting a specific subdivision, hitting undo deletes it? Or an option of the eraser that switches to subdivision deletion instead of shape deletion?

More snap-to options — ability to toggle through a few different snap-to guesses. Sometimes it’s intuitive, sometimes uncooperative

Vert & face welding — demonstrated in Tim Johnson’s work, among other things

General 2D Windows app frame insertion into VR workflow (beyond “Add reference image”)

Voice commands — if 3D modeling is becoming more physical, why not involve speech? Stuff like “rotate object 180 degrees” while selecting something… maybe

Postscript: Thanks to the Community

I have to give a shout out to the Twitter and web/blog communities surrounding VR/AR. Working on XR solo at a small company in the Midwest, I haven’t had a lot of local resources to lean on while trying to shift from web/app development (though that is starting to change). But thanks to Twitter and the incredibly supportive community there, I still feel super connected to what’s going on in XR on a daily basis. Everyone is so excited and energized by this technology and so eager to share their knowledge. You can really feel the common sense of purpose: spread the word about XR.

Special thanks to the project manager working on Blocks, Brit Menutti (@britmenutti). Just the fact that someone on this team, let alone the PM, was willing to respond to FAQ tweets from a pleb like me was inspiring. @_naam from Twitter’s videos are some of the best work I’ve seen using Blocks/Cinema 4D/Unity. Mind-blowing. 3Donimus’ Blocks models are… impossibly complex to me. A true artist. Jarlan Perez is also in that category. Thanks again to Danny Bittman and Tim Johnson. Stephanie Hurlburt works on shader stuff I don’t yet understand but is super positive and encouraging to people looking to break into the tech industry.

I went to Unity’s Vision VR/AR Summit in Hollywood, CA, this past May and saw a lot of speakers who similarly inspired me to keep at this (even before I had a real HMD in my hands). Some of my favorites were Kat Harris and Estella Tse, and Unity’s evangelist from Australia John Sietsma ended up providing me with a great jump-start on my 360-degree video work.

There are many others, but I won’t belabor the point. Thanks to you all.

James C. Kane is a developer (web, apps, XR), writer and musician out of St. Louis who’s working to win hearts and minds for VR and AR. @jameskanestl on Twitter. This post originally appeared on Medium.