There’s something slightly broken about the Gear VR and Oculus DK2, and Oculus Chief Scientist Michael Abrash hinted at it in his F8 keynote last month. It’s related to the Pulfrich illusion he demonstrated here, which you can only really appreciate if you’ve got a dark lens you can stick over your left eye (sunglasses work well).

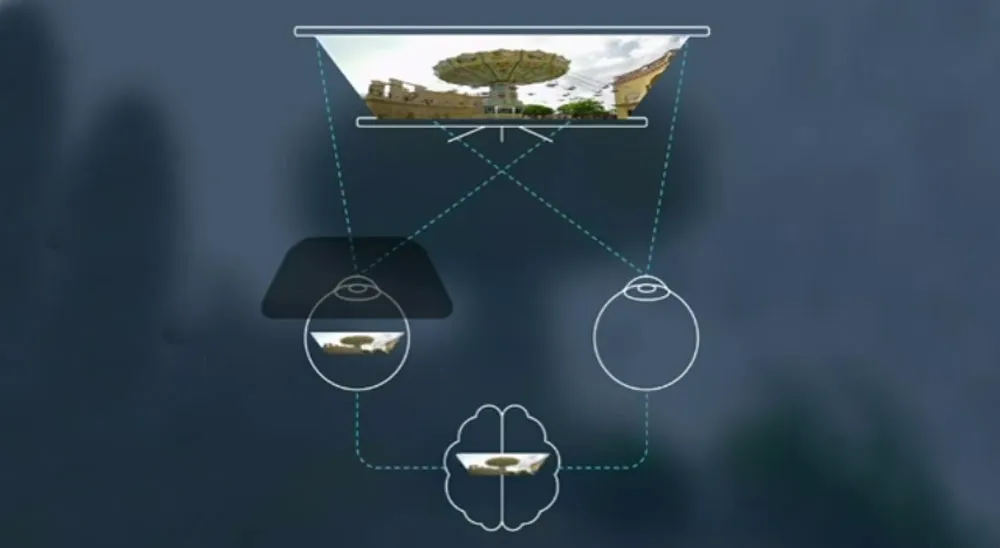

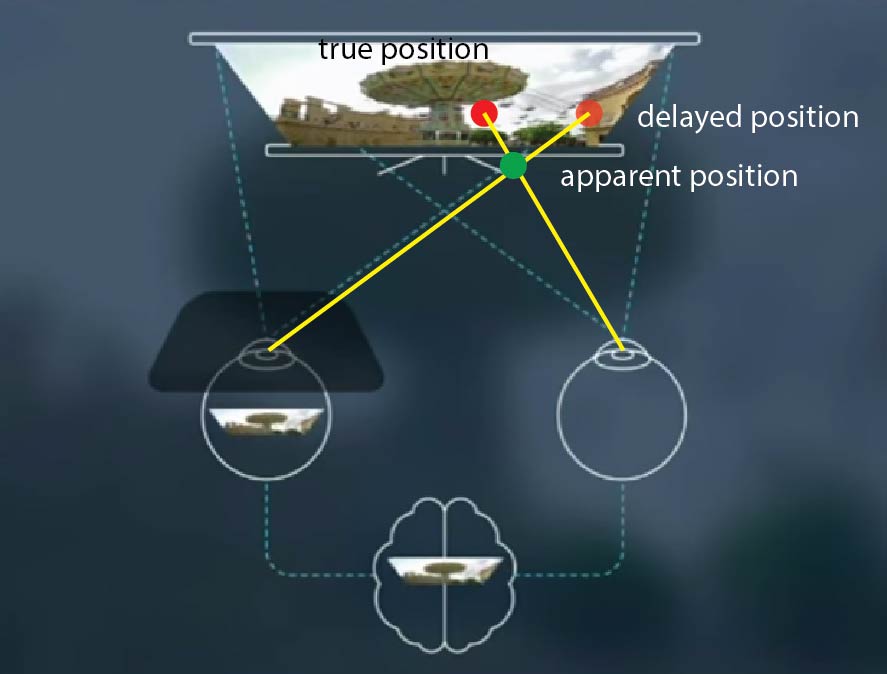

Briefly, the Pulfrich illusion creates the visual appearance of stereo depth in moving objects due to time delay in the visual system. The dark lens causes your left eye to take longer to integrate the image — it blocks some photons, so the integration time increases, and the signal to the brain is delayed relative to the right eye.

So when the images from each eye are integrated, your left eye is actually sending an image from slightly longer ago — and the apparent position of the moving object is displaced from its actual, 2D position.

The effect appears to move the object forward if the object is moving right to left, and further away from you if the object is moving left to right. The directions are reversed if your right eye image is delayed … and that’s important, as I’ll explain in a moment. So, if the image from each eye isn’t delivered to the brain at the same time, you get this false sense of depth with lateral motion. Turns out the Gear VR and DK 2 do the same thing, but the delay is in hardware, not squishy brainware. Check out this video, shot at 240 frames per second:

I made this video with a simple color strobe; each frame is a different color in sequence (R, G, B, W, K). What you’re seeing is the rolling update of the LCD panel in the Gear VR. (I actually filmed it out of the headset, but we have somewhat less-legible video proof that the effect is still there in the headset.) Why does this matter? Because it’s functionally identical to the Pulfrich effect we dissected earlier: your stereo perception will be hacked by this rolling update. The effect is highly motion-dependent, so still objects won’t show the false depth. Faster objects show it more than slow objects. (Nerd note: the slight tilt to the border between colors is actually caused by the camera’s rolling shutter — I used a GoPro Black 4 — and rolling shutter is sort of the obverse of this effect.)

This means that for video content, your depth cues may be incorrect for fast lateral motion. (More on game engines in a second.) Here’s a horribly contrived example: the standard Pulfrich pendulum, a white circle moving back and forth on a black background. Download this file and watch it on your Gear VR or DK 2 (or Cardboard, depending on the phone model) and you should see the ball move in a circular arc in front of your eyes:

What about games? Well, whether the effect is noticeable depends a bit on whether the game engine ‘races the beam’ or not. Here’s a super-detailed post about the idea from Michael Abrash during his days at Valve (warning, it’s a long, dense read … but very good). In a nutshell: it’s possible for a game engine to anticipate latency in a display and partially compensate for it, at the expense of some complexity and some other possible artifacts. And Abrash generally discusses head motion, but I doubt the beam-racing is being used to compensate for subject motion on rolling displays right now, especially because compensating for subject matter motion would require some data flow acrobatics within the game engine. And of course, for video content you’re stuck with the subject matter motion that’s baked into your footage no matter what.

So yeah, wow, Oculus is pretty broken! Stereo is so important, how could they let this happen? Well, before the haters hate, let me say this: our brains are incredibly complex, and can cut through a lot of impossible or conflicting signals to create an acceptably accurate model of the world. When signals about depth conflict, the brain often lets the most likely signals override the other ones, so the Zuckerfrich effect will only be visible in some circumstances — I’ve found it gets completely overridden by accurate occlusion and parallax, provided the motion isn’t too fast.

In fact, I first suspected this effect might exist when an astute ad exec asked if some 2D demo footage I had was 3D … I thought he was just picking up on the parallax cues from the motion, but he was probably experiencing the positive form of the Zuckerfrich effect, when the ‘false’ depth cues match the parallax in the subject matter, effectively creating stereo 3D from 2D footage. (This has been tried for 2D video; search ‘Pulfrich effect’ on YouTube and you get tons of videos shot solely to exploit subject matter motion to create a 3D effect … which is of course limited by the fact that it only works in one direction.)

Of course, later in my footage, cars speeding by at 60 miles per hour look either weirdly close or strangely punched out of the background, almost Escher-esque, depending on their direction. And this breaks the stereo effect completely if it conflicts with foreground objects — so there are limits, and it’s worth being aware of them. Now, this can’t be a huge effect, otherwise tons of people would’ve noticed it by now. (Though frankly, there isn’t a lot of good high-motion VR content out there, so perhaps we just aren’t watching the right stuff yet.) But I think it’s important to know the exact limits of the medium when you’re creating content, so this isn’t pure theory. And of course, you’ve read this far, so you must agree. QED.

So for you devs, animators, and live-action content producers out there: know that some VR headsets may display slightly incorrect depth information with motion. Test, and perhaps you should avoid showing objects with high motion and conflicting depth cues, or you may get an inconsistent depth effect … especially if the motion is fast.

For you viewers and gamers, sit back and appreciate how magical and awesome your brain is, that it can paper over the idiosyncrasies of our still-maturing display technology and not completely barf. Provided the devs take care of your brain properly.

Disclaimers: as this goes to press, we haven’t tested any other headsets, so this should not be interpreted as a knock against either of these HMDs, it’s more a quirk of using certain LCD panels in VR. Also, Mark Zuckerberg probably had nothing to do with the technology decisions causing this effect, but ‘Abrashfrich’ sounds frankly horrible, so Zuckerfrich it is:

Yes, he’s quietly laughing at you. Here’s the download if you want to try it in your headset. It’s strangely mesmerizing. And to complete the Rogue’s gallery, I present to you a smorgasboard of Frichs:

Download Luckeyfrich here.

Download Carmackfrich here.

Download Abrashfrich here.

Download Iribefrich here. (Note his vertical hair causes some compression problems at this low bitrate. Iribe is not optimized for web delivery.)