Researchers from Meta and ETH Zurich have developed a software system called TouchInsight, which they say solves the problem of turning any surface into a virtual keyboard.

Text input in VR and AR today is cumbersome, and significantly slower than on PCs and smartphones. Floating virtual keyboards require awkwardly holding your hands up and slowly pressing one key at a time, providing no haptic feedback and preventing you from resting your wrist. Quest headsets support pairing a Bluetooth keyboard for full speed typing, but this means you have to carry around a separate device larger than the headset itself.

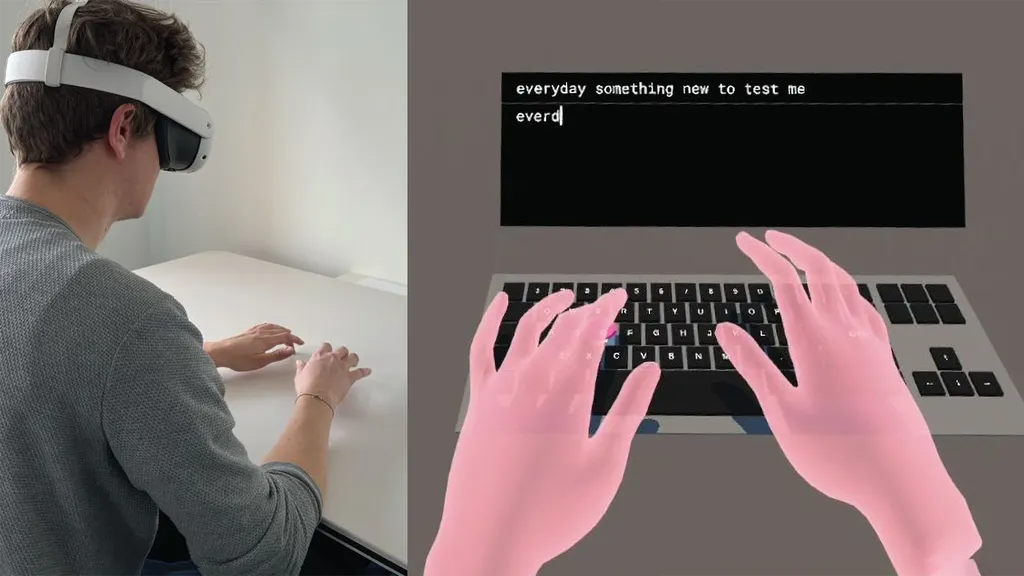

If headsets could turn any flat surface into a virtual keyboard, it would bring partial haptic feedback and allow you to rest your wrists, without the need to carry around a physical keyboard. Developers can technically already build surface-locked virtual keyboards on Quest today, by using hand tracking and getting the user to tap the surface to calibrate its position. But in practice, even the slightest deviation of the virtual surface height from the real surface results in an unacceptable error rate.

Meta showed off research towards solving this idea last year, with its CTO Andrew Bosworth saying he achieved almost 120 words per minute (WPM). But last year's solution seemed to require a series of fiducial markers on the table, which may have been acting as a robust dynamic calibration system. Plus, Meta didn't reveal the error rate of that system.

In the TouchInsight paper, the six researchers say their new solution only achieves a maximum of just over 70 WPM, and an average of 37 WPM. The average person types at around 40 WPM on a traditional keyboard, whereas professional typists reach between 70 and 120 WPM depending on their skill level.

But crucially, TouchInsight apparently works with only a standard Quest 3 headset, on any table, with no markers or external hardware of any kind. And while Meta didn't disclose the error rate for last year's system, the researchers say the average uncorrected error rate of TouchInsight is just 2.9%.

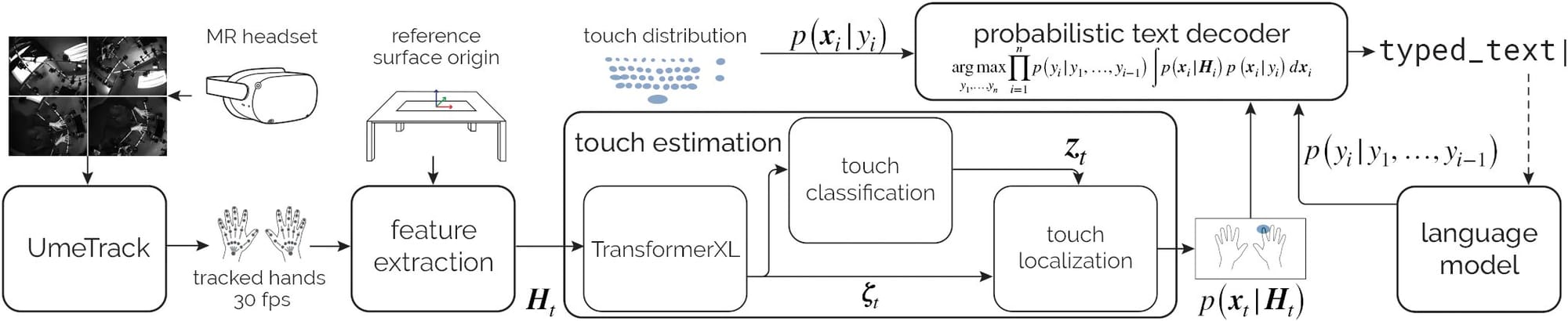

"In this paper, we present a real-time pipeline that detects touch input from all ten fingers on any physical surface, purely based on egocentric hand tracking.

Our method TouchInsight comprises a neural network to predict the moment of a touch event, the finger making contact, and the touch location. TouchInsight represents locations through a bivariate Gaussian distribution to account for uncertainties due to sensing inaccuracies, which we resolve through contextual priors to accurately infer intended user input."

In comparison, the built-in floating virtual keyboard of Horizon OS, according to the paper, results in an average uncorrected error rate of 8%, and an average typing speed of just 20 WPM. That means TouchInsight is 85% faster and three times more accurate.

As another point of comparison, a 2019 study suggests the average smartphone typing speed is 36 WPM, with an uncorrected error rate of 2.3%, meaning typing with TouchInsight is as fast as using your phone, and only slightly less accurate.

TouchInsight could also be used for general detection of finger presses on physical surfaces beyond just typing. For example, the researchers say, it could enable a mixed reality Whac-A-Mole game where you use your fingers to squish tiny moles.

The researchers say they'll demo TouchInsight at the ACM Symposium on User Interface Software and Technology next week, in Pittsburgh.

It's unclear whether Meta plans to integrate TouchInsight into Horizon OS any time soon, and if not, what the specific barriers to doing so are.