You have probably heard about Sphericam 2, which has (at this time) 22 days left on its Kickstarter campaign and has already attracted over $150k in orders and counting. It’s been written up all over the place, including right here by our own Will Mason, who noted when Sphericam 2 hit its funding goal yesterday morning. He covers the high points, so read that piece first if you haven’t.

Now, caveat fundor: it’s a Kickstarter campaign, so dates and specs could easily slide or shift. But Sphericam founder Jeffrey Martin has done this dance before — there’s a reason it’s Sphericam 2, after all — so he’s much more likely to make his dates and projections than a newbie.

And what do his projections suggest? First of all, 4k, live-streamed, spherical video, in a tiny package. What’s going on under the hood, and how will this compare to, say, GoPro arrays? Quite favorably I think, and Will’s correct that this might be the killer rig for ‘prosumer,’ if not professional, productions. First, price. You can get a Sphericam 2 for less than four GoPro Blacks, and only slightly more than four of the GoPro ‘Session’ camera announced today. You need at least six GoPro-class cameras to cover a sphere, so there’s an immediate price advantage for the Sphericam — nevermind the complexity of rigging GoPros, and trying to sync them.

Right, about that sync. It’s not currently possible to synchronize multiple GoPro 4s, period. (No idea about the Session, but it’s got even fewer interface options, so I’m doubtful they’re sync-able.) So at best you’re within about 1/2 to 1/4 of a frame sync on average with a GoPro array using a remote or slate-sync. Worse, if you’re moving, or your subject matter is in motion, the GoPro’s rolling shutter comes into play — because it exposes the sensor sequentially, you don’t really even have proper sync across a single GoPro frame (images can stretch or behave strangely if you move too fast relative to your frame rate).

This phenomenon is often known as ‘jello,’ because if you pan a rolling shutter camera fast enough, the entire image appears to wobble like a Bill Cosby alibi. Jello-cam isn’t great for regular video, but it’s absolute poison in VR: your seams won’t match up, and you’ll see a strange tearing effect at the boundary between cameras when the rolling shutter artifacts become too large. And you don’t have to be going terribly fast to see the seams, though it’s more obvious the faster you’re going (check out this skiing video on VRideo to see what I mean). This is especially bad because our vision system is optimized to see peripheral motion, and the seam-tearing creates a powerful illusion of motion, which is extremely distracting even when it’s not front-and-center.

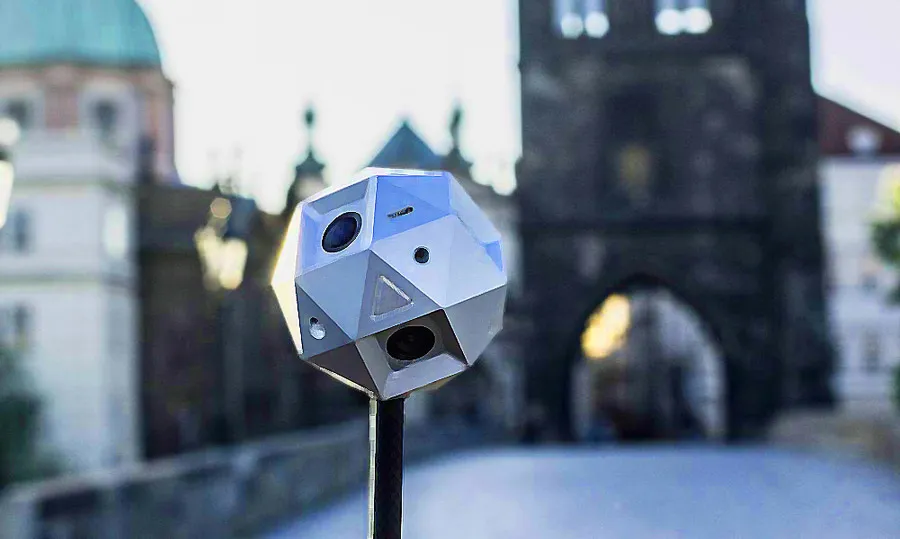

In contrast, Sphericam 2 has a global shutter, and it synchronizes all the sensors, so you shouldn’t have any motion-based image tearing. You still have some parallax stitching issues, but the entire package is only about 2.5″ across, so the stitching should be pretty reasonable. (The lenses are about as far apart as your eyes, so to see the parallax you have to deal with, just wink alternate eyes — the amount of parallax you see between near-field and far-field objects is what has to be ‘fudged’ in software.)

Now, monoscopic spherical video isn’t as impossible as stereo spherical video; using optical tricks like mirrors you can theoretically produce seam-free images for most of a monoscopic sphere, like this Fraunhofer camera array. But for a useable camera system, small is beautiful. And Sphericam 2 is definitely small.

Oh, and about stitching; the camera can do stitching on the fly. There’s no way it’s doing image analysis at that speed, so the live stitching must be using a calibration map to create the stitch. (This is how Immersive Media’s IM Broadcast system works, by the way, at a slightly different price point.) This inevitably means that objects close to the camera will show some seams, but objects at infinity should look correct. Because the lenses are so close together, the parallax effect should be fairly small.

Finally, image quality: according to the published specs, this camera spits out some high quality streams: Cinema DNG, 10- or 12-bit color depth, 4k at 30 or 60 fps. What does that mean in layperson’s terms? Well, it should have good color; the bit depth means you shouldn’t have banding in the sky, like you’d get with the 8-bit files you get off a GoPro. Capture bit depth is important, particularly if you intend to do any color grading on the footage in post, but note that how the color information gets packed into the file matters at least as much. For example, a 10-bit log file can hold more color data than a 16-bit linear file, so you can’t just go on numbers alone.

(“Bit depth” just refers to how many bits are applied to each color channel in an RGB image. Most consumer cameras are 8 bit, which means you have 256 different values each for red, green, and blue, or 16 million different color values. Your monitor is 8-bit or lower; the extra bits in a professional video or still image are useful for capturing higher dynamic range, and for adjusting the image in post without it showing artifacts… so you have more creative and technical control over the final image.)

Now, exactly how the camera captures those bits matters a lot; not all bits are created equal. For example, the quality of the A/D converter (and its bit depth, which is often higher than the final, captured bit depth) matters, as does the circuitry and algorithms that mathematically squish and squash the voltage into the bits of a recorded file. In other words, we can’t know until we see it in action. So naturally, we speculate: Martin declined to say much about the sensors, except that they’re global shutter. But, there are some test videos up.

Granted, they’re test images from a prototype. Even so, they look somewhat flat (which is good, actually!), though the sky blows out rather badly, going cyan near the blown-out sky which suggests clipping in the blue channel. I’d expect the same from a GoPro, though, and it’s hard to tell what the file might look like when graded (most GoPro settings actually apply a grade before recording the file, FYI); I suspect there’s more color information there than the videos suggest, and because the rest of the image is so flat it’s clear there hasn’t been color work done on it yet.

Alternately, we can work backwards from some other sensor specs: Martin mentioned a native ISO of ‘up to 3200,’ and given the 4k pixels are distributed across the entire sphere, this suggests each sensor is probably no higher resolution than 1920 x 1080 or so. (Or 1920 x 1440 if it’s 4:3.) This, combined with the relatively high ISO suggests large-ish pixel wells, which tend to correlate with lower noise and higher dynamic range… probably not the ~5 micron pixels of professional cameras, but probably larger than the GoPro’s ~1.6 micron pixels. (Your choice of sensor size and available optics are pretty limited: camera manufacturers are often ordering off of a menu for these things, so they’re typically pretty standard. In other words, this speculation isn’t quite as wild as it seems.)

The camera can also do high dynamic range imaging (HDR) by exposing each frame more than once. For a double-exposure, this halves the effective frame rate, since the camera is recording two frames for each output frame — one that’s exposed for shadows, and the other for highlights. This can mean the highlights and shadows may not be perfectly in sync for fast-moving subjects, by the way, so be wary of motion artifacts when using sequential-exposure HDR like Sphericam 2’s. (Red’s Epic/Scarlet class cameras have the same feature, and the Red site has a good explanation of the effect and its artifacts here.) Incidentally, Sphericam also claims higher HDR ratios by stacking even more exposures if you want a timelapse effect (because you’re effectively cutting your frame rate by the same multiple).

In addition to bitrate, the camera can capture at a pretty impressive bitrate: it records to six microSD cards simultaneously, and if you want to max it out, it can do a theoretical 2.4 Gbit. We thought the Jump array created a lot of data, at ~430 GB/hour, but the Sphericam 2 could theoretically record nearly 1 TB/hour. Better go invest in hard drives, I guess. There aren’t specific specs for an editing computer, though Martin notes that “a reasonably modern GPU that is OpenCL compatible will be helpful.” Like Google Jump, the cost of postproduction could easily outpace the cost of the camera. Pro tip: don’t blow your whole budget on a camera, you’ve got to be able to do something with the footage, too.

Finally, a video on the Sphericam website hints at sensors for image stabilization. Martin confirmed they’re working on it, combining gyros, accelerometers, and image-based analysis to stabilize the image. (The sample videos have not used this technology, so there are some jitters visible.) This would be a huge deal, because unstable VR video can be sickening.

So, global synchronized shutters, live stitching, high quality color and high bitrate capture, and possibly stabilization — for a relatively-affordable price of $1500 or so for pre-orders (post crowdfunding price TBA). The only downside? You have to wait until December – or October if you’re feeling spendy and want to spring for the $2,500 ‘pioneer’ package.

Then again, if you’re really feeling spendy, you can always just get it gold-plated: