In many settings in virtual reality, true to life realism isn’t a baseline requirement, Convrge is a fantastic example of how extremely simplistic avatars can be incredibly effective. However, when it comes to a more authentic presence in virtual worlds – especially for things like professional settings, it is important to be you.

Thats what companies like xxArray are trying to achieve. Using an array of 90 Nikon cameras xxArray is able to capture your likeness in full 3D in less than one second. Its simply stand in the center of the array, stretch your arms out, flash, and then the capture process is complete. Now, this isn’t yet completely magic yet, producing the final image still takes a little bit of post production work but still the results are pretty incredible.

What xxArray is doing is not a new process, having been used at a professional level in the movie industry for years; but the technology has finally reached a point where it can reach the consumer level via installations (imagine, for example, going to the xxArray photo booth at the mall to get yourself scanned in).

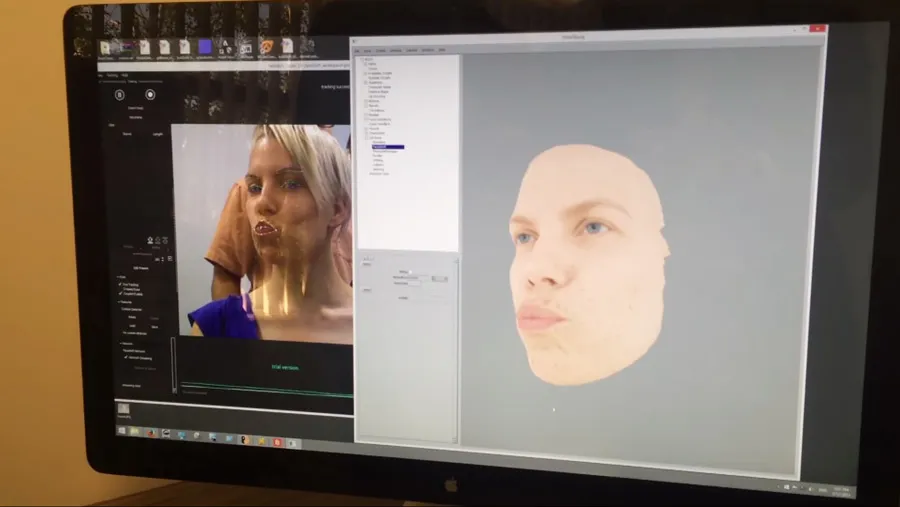

These avatars will also have to move accurately, not just look photorealistic. That’s where technology like FaceShift and SmartBody, a research project funded by the Army out of USC’s Institute for Creative Technologies, come into play. Using FaceShift’s markerless motion capture technology, which uses depth cameras like Kinect, SmartBody is able to really nicely translate that movement to the model by mixing all of the blend shapes into the final image (along with some nice fancy shaders) resulting in the realistic looking facial motion. The result is the video you see above, which is still a work in progress.

Read More: Scan your face into the game in less than 30 seconds with Seene

There are still issues to be solved when it comes to capturing facial movement within an HMD, but it is something that is currently being researched. One project in particular out of USC (with collaboration from Oculus) shows some pretty promising results:

The project uses a short range RGB-depth camera mounted on the front of the headset which detects the movements of the mouth. The system also uses a set of capacitive sensors embedded in the foam padding to detect muscle movement around the eyes. Minus eye tracking it appears to be a fairly effective solution – although I doubt the general public is down with the Angler Fish look.

We may not yet be at a place where all of these technologies will converge quite yet at a consumer scale, and not just for design reasons but we are getting very close. Depth cameras are beginning to be integrated into laptops and phones aren’t far off and Oculus and their bedfellow, Microsoft, are both working on a lot of advanced computer vision research. With the social push Facebook and Oculus are bound to make in the upcoming years, it would be surprising not to see this kind of technology find its way into the consumer realm in the not too distant future. With consumer expectations set high, technology like this gives us reason to think we will reach them.